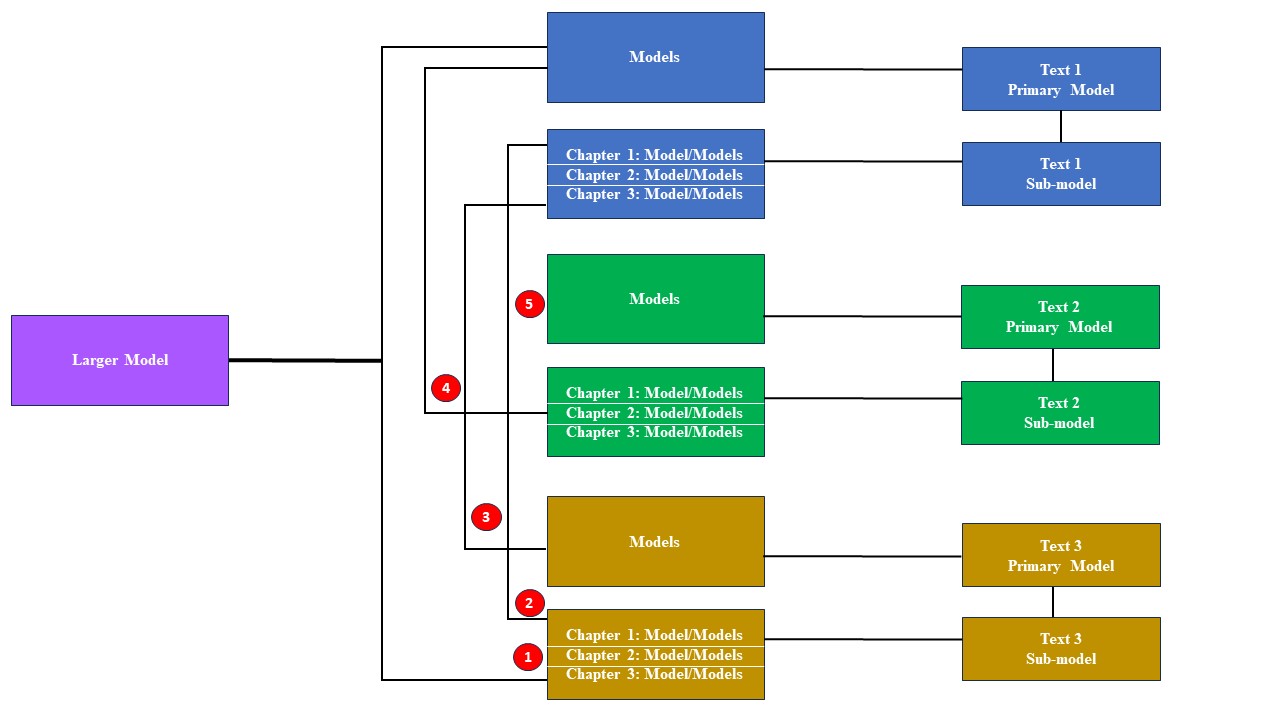

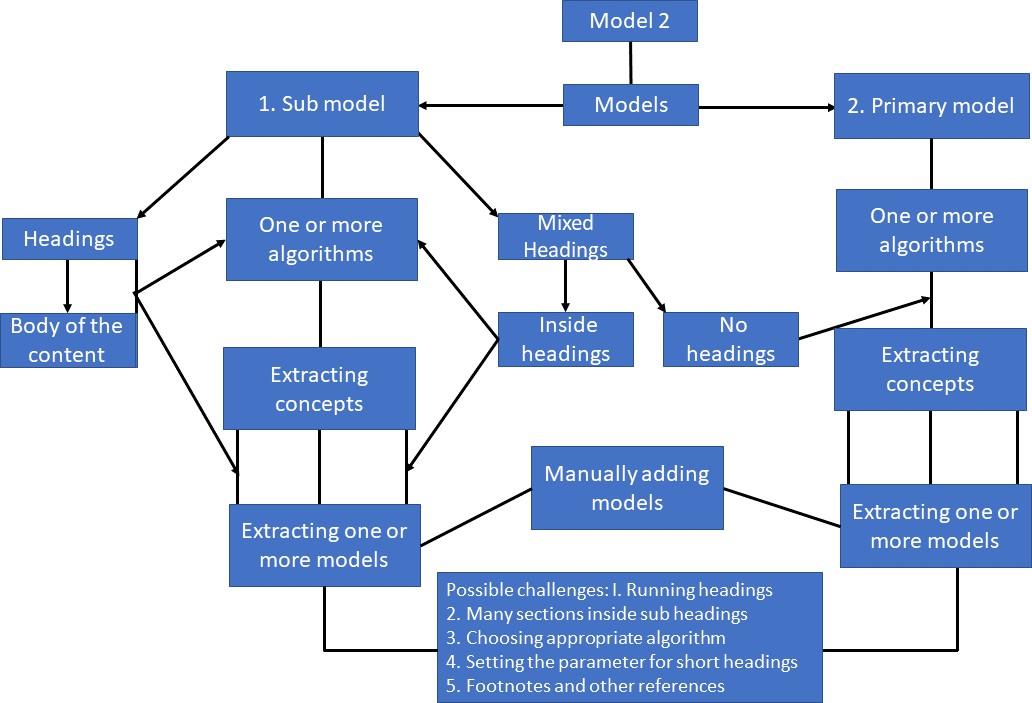

Distribution of concepts in sub-model and primary model

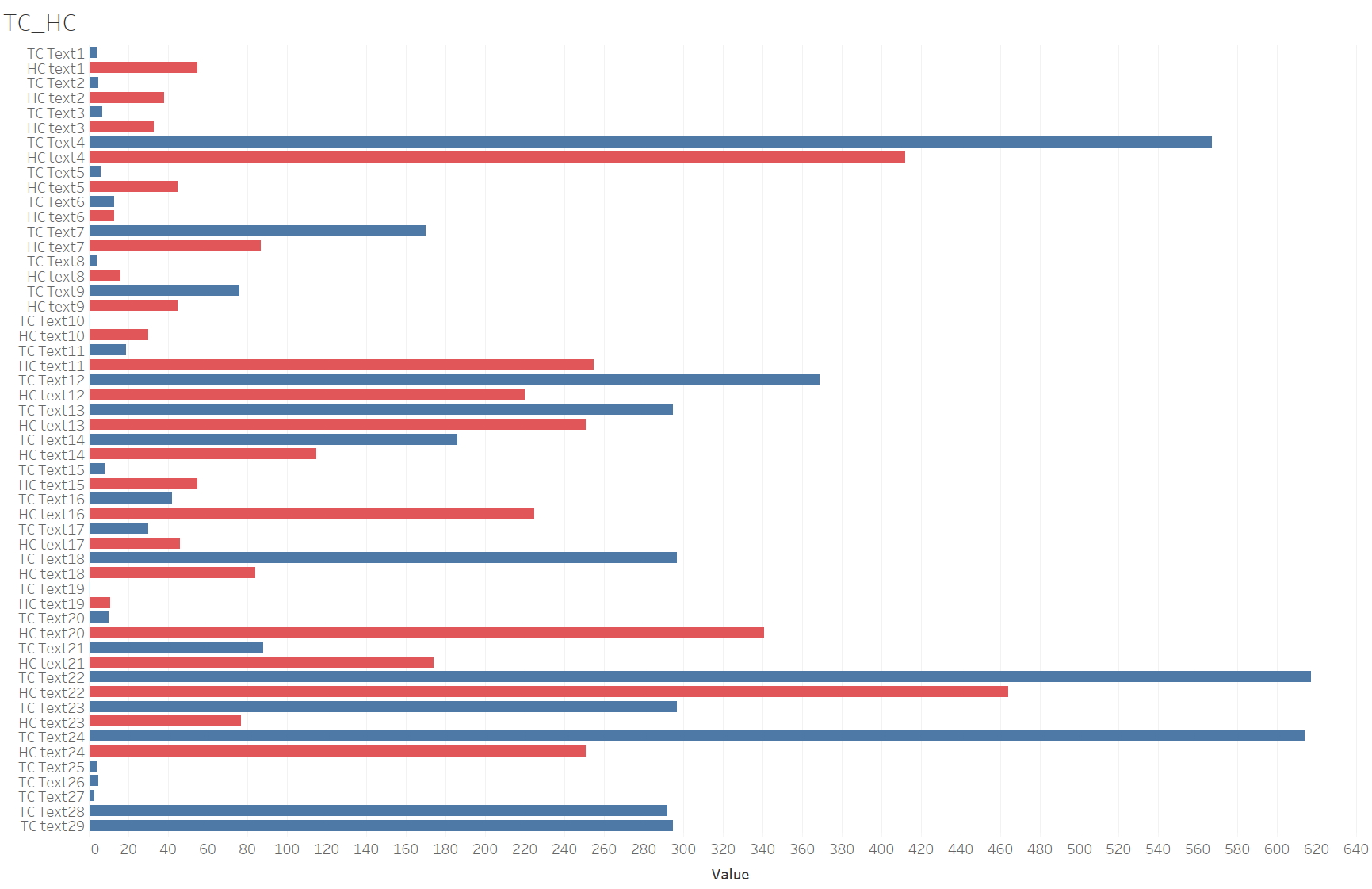

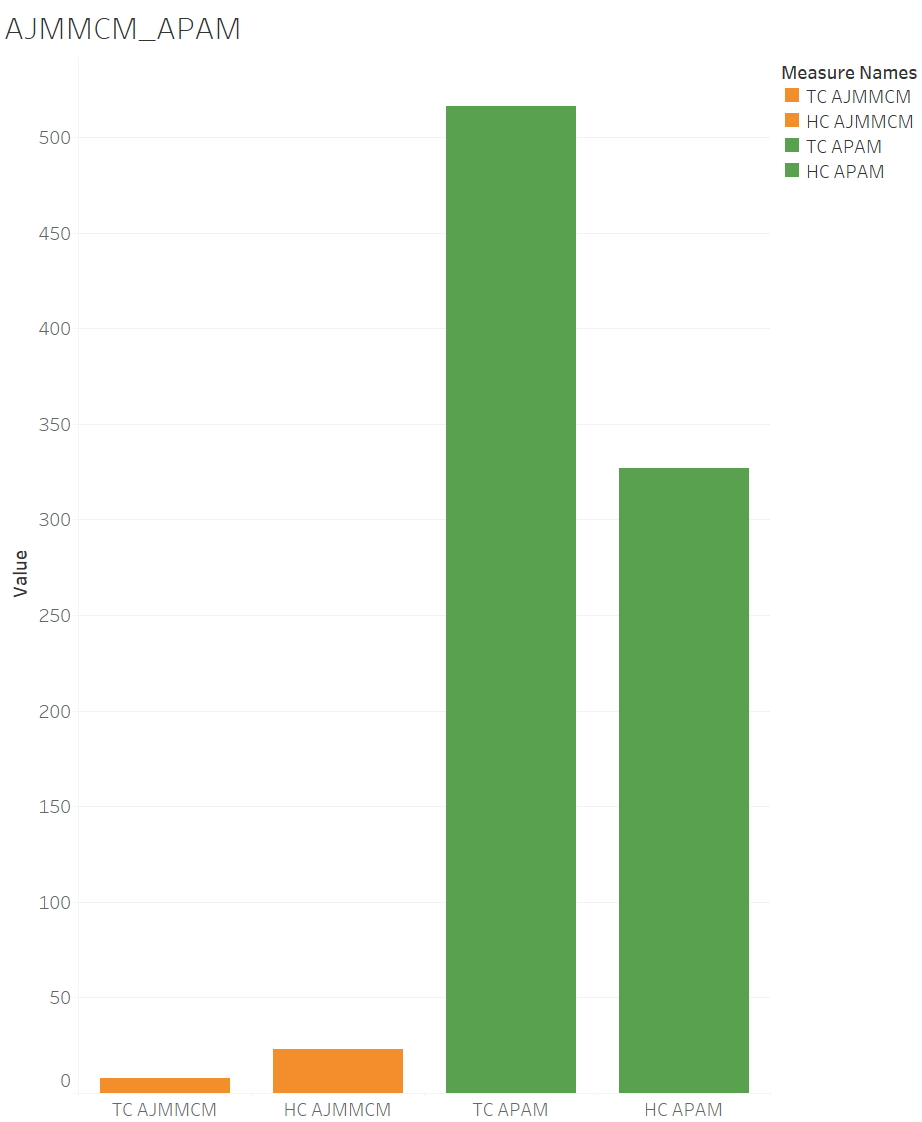

Investigating the number of clusters obtained by models for both heading clusters (hereafter HC) and text clusters (hereafter TC) would help us discern the complexity and nature of the corpus before delving into studying the labels of the clusters. Although the concepts presented in the headings are pertinent to the text, often, the headings represent unique concepts that might not be traversed throughout the text. In this case, when the model identifies unique words and their similarities based on the distance, the output for the clusters of each heading could be greater in number, as they may be unique and dense only for their respective headings, unlike the entire text. Hence,

Figure 4, depicting the clusters of all the HC in red and TC in blue, reveals that the former outnumber the latter. However, to discern this difference, we will discuss how the concepts distributed across the headings and text vary from one another through selected texts from the corpus.

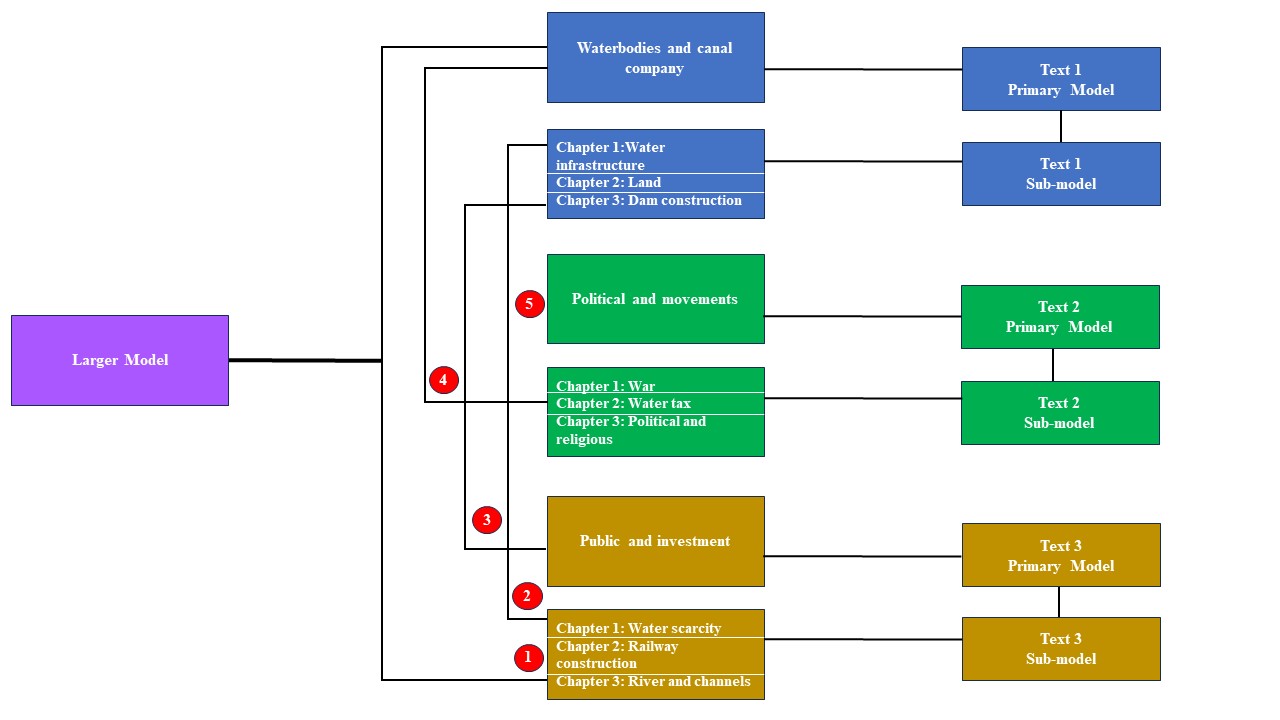

Figure 5 shows HC and TC of Francis Buchanan’s

A Journey from Madras Through the Countries of Mysore, Canara, and Malabar (1807) (hereafter AJMMCM)

Volume 1 and

Annual Report On The Administration Of The Madras Presidency 1862–63[3] (hereafter APAM). We can observe that the TC of the latter are more in number than HC of the former and vice versa. Bernard Cohn states,

For many British officials, India was a vast collection of numbers. This mentality began in the early seventeenth century with the arrival of British merchants who compiled and transmitted lists of products, prices, customs and duties, weights and measures, and the values of various coins.

[Cohn 1996, 8]

As he rightly says, such numbers were used to govern and control the colonized people and land as Nicholas B. Dirks, in his Foreword to Cohan’s

Colonialism And Its Forms Of Knowledge The British In India, says “[c]olonial knowledge both enabled conquest and was produced by it; in certain important ways, knowledge was what colonialism was all about” [

Cohn 1996, ix]. As Dirks states, the British officials were so keen in surveying and documenting everything about Indian society. In the selected text AJMMCM, the Scottish surgeon and botanist Buchanan surveyed the recently annexed kingdom of Mysore, Canara, and Malabar in southern India at the beginning of the nineteenth century. The survey covers the physical and human geography of the region, commerce, detailing agriculture, arts, culture, indigenous religions, society, customs and natural history. In this Volume 1, he emphasizes the agricultural aspects, including irrigation systems, variety of crops and their cultivation details, the condition of the soil and many more. AJMMCM is divided into six long chapters with specific sub-headings. On the other hand, APAM, containing thirty-six headings, offers diverse information and details on legislative, judicial, criminal justice, and also topics related to forest conservancy, plantations, and irrigation. The aim of AJMMCM is to survey the features of the recently annexed southern regions, and the concept and theme of the text are consistent through its lengthy descriptive narrative. Hence, APA found a few crucial concepts to cluster for the primary model. But they clustered the unique heterogenous concepts in each heading that might not be overlapped with other parts text. Conversely, APAM has numerous concepts and information but is presented concisely in analytical narrative. Therefore, APA could not find many clusters in the headings but,

de facto, grouped many diverse concepts that appeared throughout the text.

Nevertheless, this helps us fathom out how the concepts are distributed in each heading and the entire text, which can vary. Corpas and Seghiri rightly point out that “[t]he number of tokens and/or documents a specialized corpus should contain may vary in relation to the languages, domains, and textual genres involved, as well as to the objectives set for a specific analysis (i.e., a corpus should provide enough evidence for the researchers’ purposes and aims)” [

Corpas Pastor and Seghiri 2010, 135]. It also brings attention to the selection of the text for building this corpus. As I mentioned in the introduction, my aim is to mine the details of water in British India colonial documents, particularly the documents, texts, reports, and surveys of The Madras Presidency. I aggregated texts that might have any potential data about water. The above-mentioned two texts, for instance, although vary in terms of their rationale and aim, have much data about water.

Studying the models

To comprehend the semantics of the mined clusters, I explored the labels of clusters and its exemplar feature of APA. According to Frey and Dueck, “[a] common approach is to utilize data to learn a set of centers such that the sum of squared errors between data points and their nearest centers is small. When the centers are chosen from actual data points, they are referred to as ‘exemplars’” [

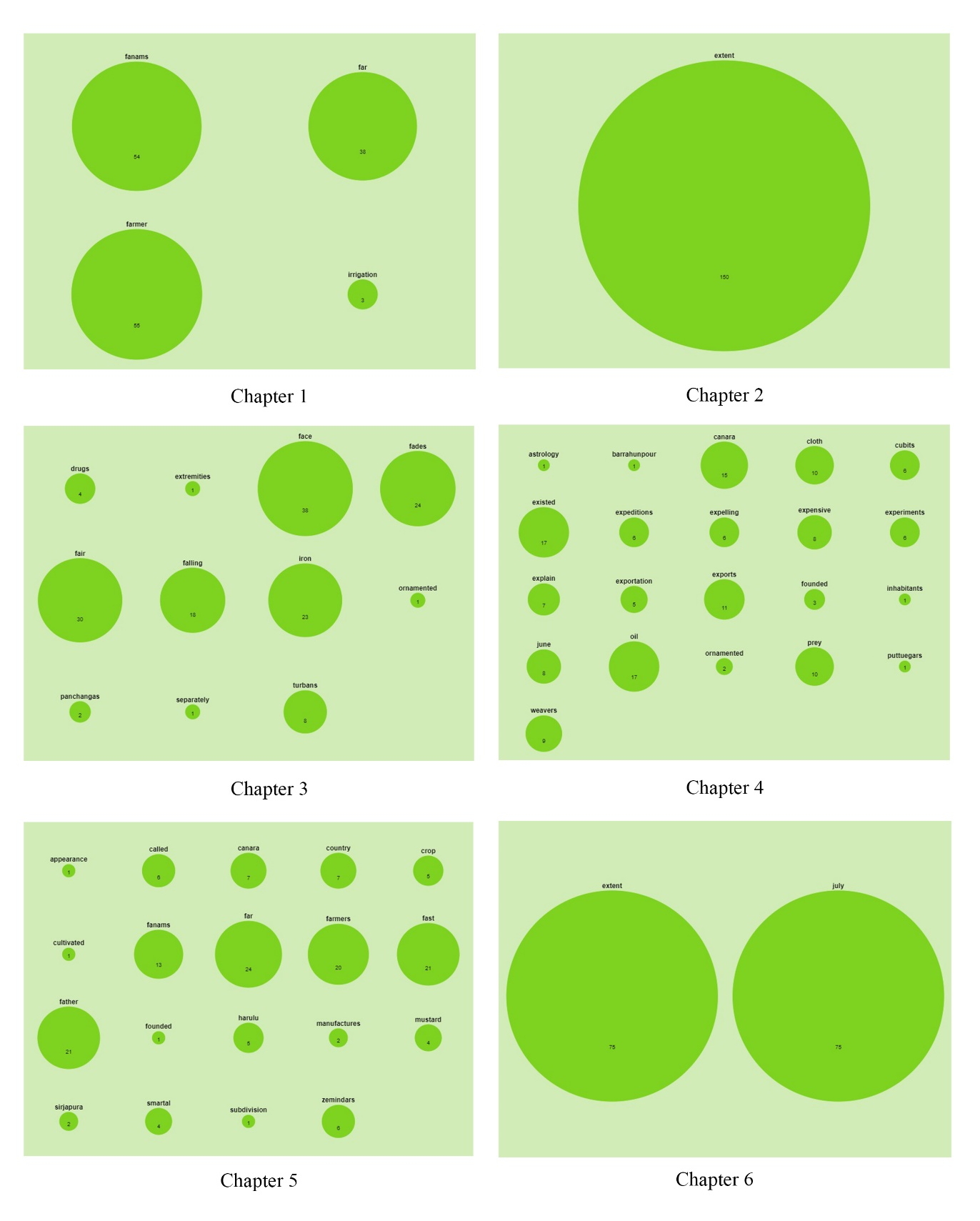

Frey et al. 2007, 972]. Exemplars serve as representatives of their respective clusters and also help us to build the semantic model of clusters. However, a comprehensive exploration of the entire labels of clusters and exemplars extends beyond the scope of this article; therefore, I will closely study AJMMCM. The potential sub-models derived from the six chapters of AJMMCM encompass fanams, farmer, irrigation, drugs, fades, fair, iron, turban, canara, cloth, cubits, oil, prey, weavers, crop, zemindars, extent, and july (see

Figure 6). These exemplars and their clusters succinctly encapsulate the distinct concepts of AJMMCM. For instance, the exemplars fanams, fades, cloth, turbans, and weaver and their significant clustered terms such as families, brahmans, devangas, villages, natives, strata, customs, cotton, silver

[4] etc. from Chapters 1, 3 and 4 signify Buchanan’s survey of social and cultural milieus of southern India. These models hold relevance for research inquiries concerning socio-cultural settings in southern India. Similarly, the exemplars irrigation, july, crop, harulu, farmers, cultivation, extent, country, and zemindars and their clustered terms such as ragy, casts, seed, rice, corn, buffalo, water, field, straw, plough, soil, barugu, weights, grain, sugar, bees, tobacco

[5] etc. from all six chapters convey Buchanan’s detailed study of the agricultural system in the southern regions. These models offer valuable insights for research related to environmental, agrarian, and economic history.

It is crucial to acknowledge that these exemplars should not be entirely relied upon, as they do not serve as either the topic or title of the clusters; instead, they merely function as representatives. They provide only a glimpse into the clusters. To comprehend the model, one must delve into the terms of the clusters. Moreover, not all exemplars are truly useful and provide an immediate sense of the clusters. For instance, in the previously mentioned exemplars, terms such as extent, fades, and july did not contribute any meaningful sense to construct the concepts. However, a meticulous examination of the terms of these exemplars, including customs, measures, plough, sows, sesame, palm gardens, cultivation, soil, bushes, jola, barugu, etc., once again signifies the extended discussion on the agrarian culture of the regions. For example, in the quotes below, Buchanan explains the crop of Jola, its kinds, and cultivation.

Of these crops Jola (Holcus sorghum) is the greatest. There are two kinds of it, the white and the red which are sometimes kept separate, and sometimes sown mixed. The red is the most common. Immediately after cutting the Vaisaka, crop: of, rice, plough four times in the course of twenty days.

[Buchanan 1807, 283]

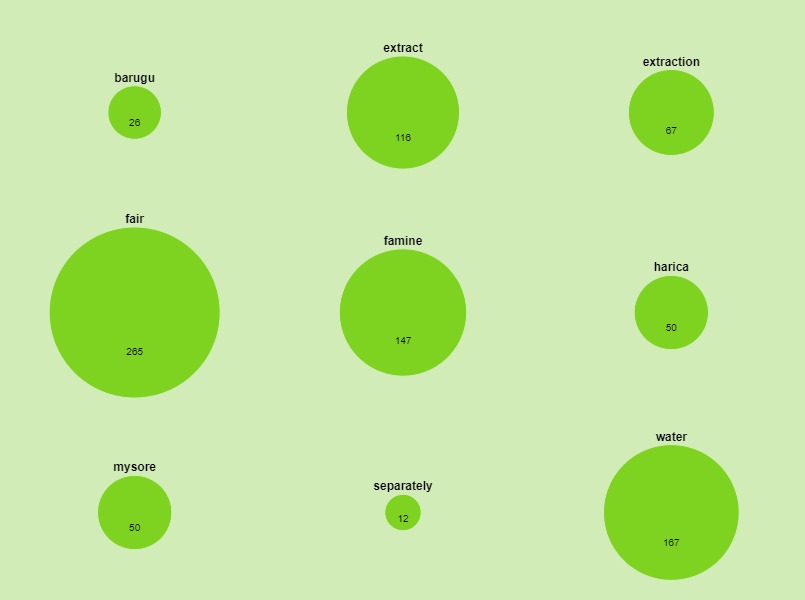

There are nine clusters in the primary model and the important exemplars are barugu, extract, fair, famine, harica

[6], water etc. (see

Figure 7). These representees and their terms such as rice, fanam, sugarcane, cultivation, irrigation, jola, ragy, july, bushes, plough, seed, trade, dry, land etc. indeed convey the agricultural facets which is the primary concept of the text. Although the close study of the terms presented in the model can be associated with the pivotal concept of the text, the nuanced heterogenous concepts extracted in the sub-model have been disregarded in the primary model.

For example, Buchanan demonstrated a keen interest in surveying autochthonous resources, as manifested in exemplars such as drugs, oil, and iron. The text features subheadings specifically addressing these resources. The term drug occurs 11 times, oil 111 times, and iron 68 times in the text. Nevertheless, in the clustered terms of the primary model, oil appears 5 times, and iron appears once. Remarkably, the term drug does not appear at all due to its dense paucity.

Drugs. A kind of drug merchants at Bangalore, called Gandhaki, trade to a considerable extent. Some of them are Banijigaru, and others are Ladaru, a kind of Mussulmans. They procure the medicinal plants of the country by means of a set of people called Pacanat Jogalu, who have huts in the woods, and, for leave to collect the drugs, pay a small rent to the Gaudas of the villages. They bring the drugs hither in small caravans of tea or twelve oxen, and sell them to the Gandhaki, who retail them. None of them are exported.

[Buchanan 1807, 204]

In the above excerpt, Buchanan elucidates the procurement process of drugs by Gandhaki, drug merchants in Bengaluru, from local suppliers Pacanat Jogalu, who gather them in the woods. Additionally, he provides a detailed explanation of the manufacturing, trade, and application of various oils, including coconut oil, sesame oil, castor oil, bassia oil, and hoingay oil. Likewise, Buchanan delves into the examination of natural minerals. In a subsequent passage, he narrates how a specific local community acquires materials for iron manufacturing and he dedicates a substantial portion in Chapter 3 to elucidate the comprehensive iron production process.

Iron forges. About two miles from Naiekan Eray, a torrent, in the rainy season, brings down from the hills a quantity of iron ore in the form of black sand, which in the dry season is smelted. The operation is performed by Malawanlu, the Telinga name for the cast called Parriar by the natives of Madras. Each forge pays a certain quantity of iron for permission to carry on the work.

[Buchanan 1807]

Owing to the extensive discussions on these resources within the Chapters, APA has selected drugs, oil, and iron clusters based on their density in the corresponding headings in the sub-model. These diverse concepts were disregarded in the primary model. On the contrary, as detailed in the

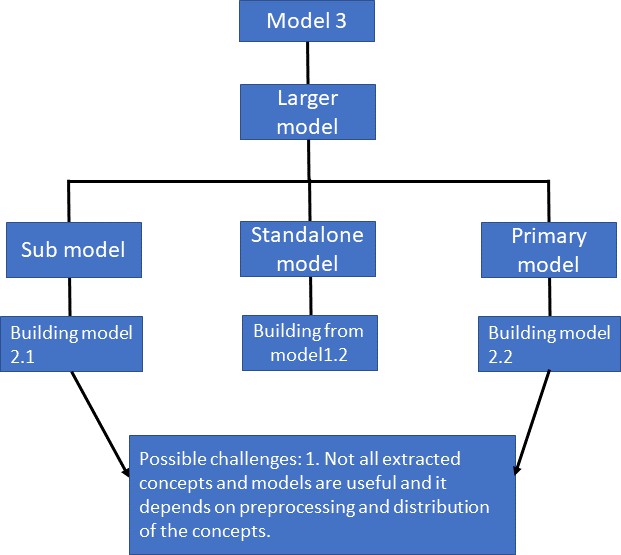

initial subsection of this section, certain texts exhibit more clusters in the primary model than in the sub-model, or in the case of the APAM, wherein crucial concepts like “settlement” have been omitted from its sub-model. Nevertheless, when scrutinizing the British colonial India corpus, the integration of these models demonstrates greater efficacy in formalizing the heterogeneous concepts.

The model, in general, is designed to identify overarching patterns in the data. However, it is imperative to incorporate the sub-model in the exploration. Theoretical DH should address these crucial considerations in the characteristics of formal models for examining a complex corpus. Nevertheless, building computational models to extract primary and sub-models was quite challenging as big data models, in general, are significantly utilized to discern trends within the data to generate novel insights [

Bhattacharyya 2017]. The data models might neglect non-trends that still constitute part of the data. Sayan Bhattacharya conducted an experiment utilizing the Bookworm tool, designed to visualize language usage trends within millions of digitized texts in HathiTrust. He contends that the model, crafted to explore and visualize language usage trends, has a limitation in identifying “words from less hegemonic languages” [

Bhattacharyya 2017, 34]. He illustrates his argument by showcasing underreported transliterated words (English) from Global South languages and delves into the causes behind such limitations

[7]. Indeed, the issue stems from tools like HathiTrust Bookworm relying on an index that, for performance reasons, excludes entries for low-frequency words. This disproportionately impacts the representation of low-frequency words in larger collections.

This is applicable when studying corpora like British colonial India, as less trendy concepts are overshadowed by the trend concepts within the text in both models. Unlike digital tools, which do not permit alterations to their frameworks, computational models can be manipulated to formalize these less-trendy concepts in the corpus. Hence, the amalgamation of primary and sub-models proves advantageous in studying and formalizing the heterogeneous concepts within the British colonial India corpus as demonstrated using the selected text AJMMCM. I can reorganize the texts in the corpus based on the formalized concepts and apply formal models for further investigation.

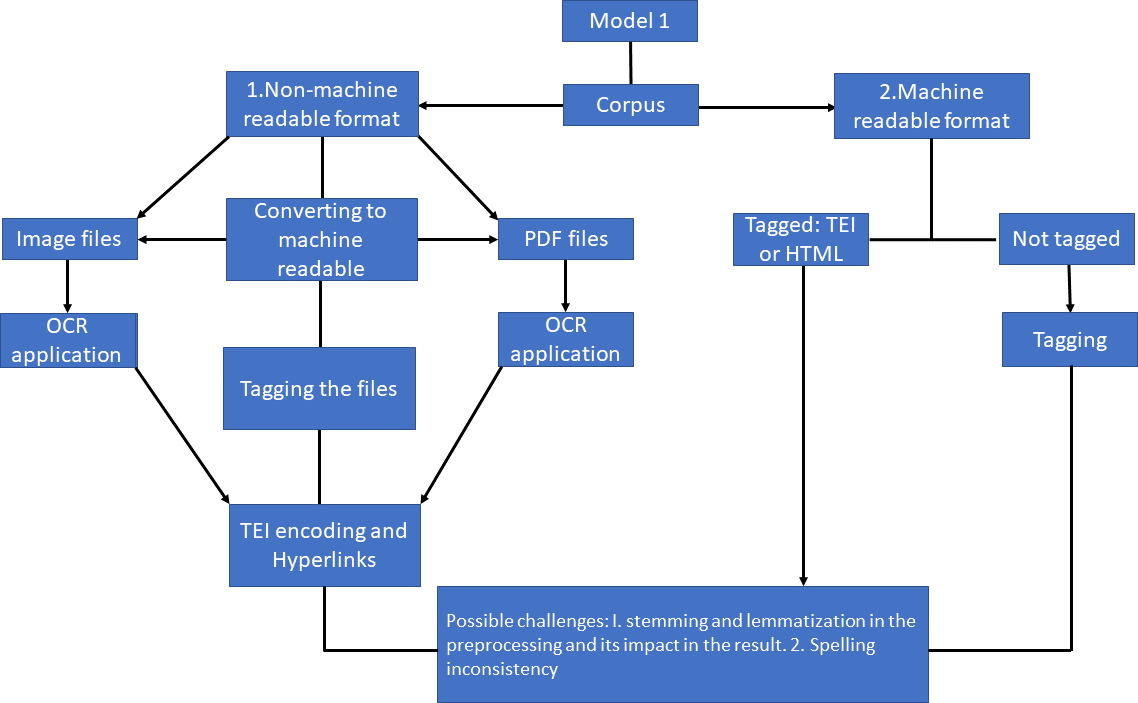

Disadvantages, challenges and future work

Numerous issues were encountered during the experiment, including problems with text format, non-standard text, parameters for cleaning texts, and limitations in the selected algorithm. The first issue arose from tagging the table of contents. Some texts lacked a table of contents but had headings inside the text, while others had neither. Separate algorithms were designed for each case. The second issue was the exclusion of stemming

[8] and lemmatization despite removing stop words. These processes could impact clustering, especially sub-models derived from a few paragraphs or pages. Lemmatization might reduce counts and, additionally, the inconsistent content distribution across headings, with some having only one or two paragraphs, led to an increase in outliers

[9]. Spelling variation in Indian names and place names posed another challenge. For instance, the river name “Noyal” had various spellings like “Noyil,” “Noel,” and “Noyl” affecting frequency and clustering patterns.

Another challenge is the inclusion of footnotes and references running throughout most texts. There are many terms and exemplars derived from these ciations. APA accumulated many outliers, such as “separately,” which did not contribute explicitly to clustering concepts, but indicated numerous tables attached separately with the content. Subsequently, I excluded tables, prioritizing the narrative over statistics in The Madras Presidency reports. Involving grain details, surveyors included various statistics — crops, revenue, a census of houses, and population categorized by religion, castes, and more. These details are crucial for event-based research questions and should be formalized in future work. Another limitation is in the chosen algorithm, APA, with constraints like “high time complexity” for larger datasets. On the FAQ page for Affinity Propagation, Frey and his team addressed dataset size concerns, assuring APA’s reliability for small datasets. For instance, they answered a question: “Is affinity propagation only good at finding a large number of quite small clusters?” Their answer is:

It depends on what you mean by “large” and “small”. For example, it beats other methods at finding 100 clusters in 17,770 Netflix movies. While “100” may seem like a large number of clusters, it is not unreasonable to think that there may be 100 distinct types of movies. Also, on average there are 178 points per cluster, which is not “quite small”. However, if you’re looking for just a few clusters (eg, 1 to 5), you’d probably be better off using a simple method [

Affinity Propagation FAQ 2009].

In this case, APA was suitable for sub-models and should also work for primary models since I mined the latter per text, which is not indeed a large dataset. However, it did not select potential exemplars for all primary models due to inconsistency in dissemination of the concepts.