Abstract

With the Maker Movement promoting a refreshing DIY ethic in regard to creation

and epistemology, the time might be ripe for scholars to adopt such techniques

into their own research, particularly in the subfield of mobile communication

studies. One can now relatively easily participate in the building and

implementation of a variety of digital products, such as mobile apps, that can

then be used to study user experiences through interactions with rhetorical

forms, including a variety of types of informatics. Our experiences in several

projects that use both large- and small-scale mobile apps offer a critique and

lessons learned directly from engaging in this type of field experimentation,

including reflections on observations, survey responses, and other types of data

collection made possible through this model. Four larger issues are addressed

here, about conducting research through making apps, providing potential

research paths, opportunities and challenges to consider. Perhaps most

importantly, this research approach offers the ability to tailor an instrument

specific to research needs and then test that instrument in a natural setting,

affording a true sense of how people interact with their environments in real

situations and real settings.

Just as a scholar studying music might benefit in various ways from having composed

and played at least a few tunes, digital humanists might gain broader and deeper

knowledge about mobile media through the process of building and testing more mobile

apps. These integrated processes could lead to richer understandings of the

technical backend of the media ecosystem as well as heightened awareness of

practical communication issues related to real-world performance and audiences.

Imagine a photography class taught by a person who has never taken a picture. Or

think about a painting class led by a person who has never mixed and applied

different types of pigments to canvas. Or, even more poignantly, ponder a writing

teacher who might read a lot but has never written.

Illustrated in those examples, a certain level of expertise clearly seems gained

through direct perfomative experiences with media communication technologies,

whether those are expressing audio, visual, or textual messages. If such experiences

also are valued in scholarly work, mobile media researchers are poised right now to

transform knowledge about this field. These digital humanists could create new

research methods through the integration of their everyday practical experiences

with smartphones, tablet computers, smart watches, etc., and the development of new

mobile apps that reflect their research interests (not necessarily a third-party

company’s profit orientation), guided by their robust training in methodologies and

theoretics as well as their academic ethics.

Normative rhetoric, observations, surveys, counting users, dollars, interactions,

etc., has helped to build the field to where it is today. But what’s the next step

in developing our understandings about mobile media? Could direct participation in

the development processes open up the scope of what we know as scholars? If we

hypothesize that digital humanists could benefit from participating more in the

mobile medium in various ways, through alternative research approaches, several

critical questions come trailing close behind, such as: What are those ways? What

are the appropriate participatory roles for scholars? And, what systematic

methodological standards could be employed in this pursuit? The following piece –

part critique, part reflections on lessons learned, part argument for more active

research approaches – intends to prompt such discussions and delve into related

issues.

To complicate these associated matters even more, such active research approaches are

not easy. Digital humanists who single-handedly can write code, mark up data,

construct databases, design interfaces, write grants, manage projects, and provide

expert content as well as teach, publish, and serve on numerous university

committees are rare [

Reed 2014]. Consequently, much of the core

content and future of computing will be multidisciplinary, collaborative, and

understood through mixed methods [

Rosenbloom 2012]. In such a complex

and fluid research environment, Porsdam [

Porsdam 2013] argued, the

“read-only” ethos of

traditional humanities needs to be replaced with a “read/write/rewrite” approach, rooted in making

and integrating processes of design, which could be framed by a broad Action

Research ethic.

Action Research, as a process, typically features a researcher (or research team) who

works with a group to define a problem, to collect data to determine whether or not

this is indeed the problem, and then to experiment with potential solutions to the

problem, creating new knowledge [

Hughes and Hayhoe 2008]. This insider

positionality blends “the experiential,

the social, and the communal,” as described by Burdick, Drucker,

Lunefeld, Presner, and Schnapp [

Burdick et al. 2012, 83]. In turn, they

contended, we are leaving the era of scholarship based upon the individual author’s

generation of the “great book”

and entering the era of collaborative authoring of the “great project.” These projects today often either

involve mobile technologies in some way or are conceptualized and created through

the mobile medium, giving this piece its particular arena, as a way to focus our

exploration in an area. But we also hope this work transcends any particular fields

or subfields and speaks broadly to the concept of limitations on a researcher’s

mindset. As part of the increasingly mobile-oriented environment – again, as an

example – digital humanities scholars can choose to build the cyberinfrastructure

they want or be inherently constrained by the ideas, policies, tools, and services

bequeathed to them by non-humanist domains. That is the foundation of the social

constructivist perspective of this article. That is the position from which we

launch. That is the ground we all stand upon and share.

Participating allows for more control of the research environment

This piece argues that by creating our own instrument designs, for our own

purposes, and building our research tools specifically for the research we want

to do, we can control our scholarly inquiries more efficiently and therefore

create more effective studies than otherwise possible through outsider,

uninvolved viewpoints. By plunging deeply inside these processes that we are

studying (and that inherently shape our studies), we, as researchers, can gain

deeper understandings of the phenomena we are investigating. We also can

discover new questions that need to be asked in similarly engaged ways. Without

an Action Research approach, many of the facets of a situation would be cloaked

and inaccessible, in a practical manner, to the outsider, who wouldn’t be able

to see them or even perceive they are present. The advantages of outsider

research, from our perspective, are many, but they also create distinct

constraints and limits on our knowledge. Sometimes, some people need to go past

those boundaries and explore new territory in unconventional ways. We can miss a

lot of the richness of our processes by not directly participating in them. So

we tried Action Research approaches, and we benefitted as researchers. We argue

that you, too, could benefit from such research strategies and tactics, if you

incorporate those into your studies of the digital humanities in pursuit of

methodologically appropriate questions.

For example, with new technological affordances emerging regularly throughout our

modern lives and interactions with various technologies continually increasing,

prototyping as a form of inquiry has gained traction in many scholarly contexts.

That point especially gains importance when the dizzying speed of information

communication technology development rapidly outpaces the academic publishing

system. Such hands-on efforts include reexamining the past, such as through

Jentery Sayers’ [

Sayers 2015] remaking of communication

technologies that no longer exist. They include exploring the possibilities of

the future, such as through Bolter, Engberg, and MacIntyre’s [

Bolter, Engberg, and MacIntyre 2013] experimentations with augmented reality. And they

include epistemological examinations and critiques of research methodologies,

such as Henze, Pielot, Poppinga, Schinke, and Boll’s [

Henze et al. 2011]

argument that the creation of their mobile app

is the experiment.

Along those lines, this article aims to synthesize the fully engaged ethic of

those types of studies and bring them together through a holistic paradigm that

values the acts and artistry of “making something.” We

anticipate this approach will become an integral part of the future of research

in the digital humanities. From this perspective, we contend, if digital

humanists more fully appreciate and value the process of this kind of research

approach sooner rather than later, we in turn will more fully understand the

products of our research and the ramifications of our scholarship, creating

better academic work as well as a platform from which we can explain that value

to people with more external resonance.

Acknowledging obstacles, then moving onward

Lynch [

Lynch 2014] identified a variety of the reasons why such

work needs to be done and why essential framework-building efforts, like this,

have been uncommon in mobile-based digital humanities, including a paucity of

readily available resources, expertise, and rewards. For such projects, digital

humanists typically need to collaborate across disciplines and with industry

practitioners. They also usually need to raise significant external funds.

Recognition within current academic systems for collaboration and community

engagement can be diffused or even disregarded, particularly when related to

tenure and promotion [

Bartanen 2014]

[

Cavanagh 2013]

[

Cheverie, Boettcher, and Buschman 2009]

[

Harley et al. 2007]. Most schools grant tenure based in some combination

of the “Big Three,” consisting of research, teaching, and

service. Time spent building and maintaining websites, mobile apps, social media

channels, databases, and similar artifacts generally does not fit within those

goals.

Takats [

Takats 2013], for example, described the amorphous and

shifting opinions of his digital humanities work by senior faculty and

administrators throughout his tenure process. The college-level committee

decreed that the project management, prototype development, design and coding he

conducted for such major public projects as the research tool Zotero, and for

activities funded by The National Endowment for the Humanities, Institute of

Museum and Library Services, and The Andrew W. Mellon Foundation should be

classified as the typically least-valuable of the Big Three, service (a notion

later overridden by his dean). In such cases, Lynch [

Lynch 2014]

argued, digital humanists must persevere, overcome the policy and political

issues, and lead this type of cyberinfrastructure development, because they

alone can judge the usefulness of technologies in the field and guide their

related scholarly innovations.

Scholars with such a mindset might ponder how so many outside of the academy have

realized and reaped rewards in recent years because of the mass societal

integration of ubiquitous mobile technologies. That list includes: Corporations,

doctors, spy agencies, artists, teenagers, media companies, personal trainers,

criminals, lawyers, farmers, game makers, and many others. These rewards range

from capitalizing on the novel mobilities that the devices enable to the

intimate analytics that they track. Scholars also might question why they aren’t

allowed (practically speaking) to join this Maker crowd to experiment with these

tools and see what they can do for public good, by creating and implementing new

mobile ideas into authentic settings, without lumping that exploration in with

other traditional research practices and getting academic credit for only a

portion of the work, if any.

“Authentic,” as a term, has been chosen and will be used in the descriptions

in this piece due to its extensive development in the social sciences as a label

used to classify perceptions of human experiences. Wang [

Wang 1999] traced that term back to verification procedures in museum usage, via

Trilling [

Trilling 1972], and MacCannell [

MacCannell 1973] began integrating the label into sociological

work in tourist settings. Many other scholars have followed that path, including

Cohen [

Cohen 1988], Bruner [

Bruner 1989], Hughes

[

Hughes 1995], Wang [

Wang 1999], and Gilmore and

Pine [

Gilmore and Pine 2007]. From the social constructivist strain of this

perspective, an authentic setting is one within which the participants align

their self-reflection with the experience they are having, and consider if it

matches their expectations of a sense of realness. They meanwhile feel that they

have a self-determined freedom to do what they want, as they would do it, in the

situation, while the researcher has been allowed to observe such

“authentic” conduct and record data about it.

In seeking the “real,” and conducting research in authentic

situations, we wanted to open this approach up for deeper discussion. This

article, reflecting upon such sentiments and contexts, therefore describes a

sample of our recent project-based experiences in mobile app development for the

digital humanities, infusing ideas from the Maker Movement, and driven by an

Action Research ethic. Such reflection, as a core cycle of Action Research,

intends to illuminate, from an insider’s perspective, some of the key issues

that arise during these kinds of participatory scholarly projects. This piece

also identifies affordances and constraints, in terms of related opportunities

and obstacles, of the app-maker approach, in an effort to move these ideas past

the inertia phase and to propel them into a problem-solving phase beneficial to

other researchers encountering the same, or similar, difficulties.

In this vein, a critique of the situation, through our perspective, is intended

to initiate these important conversations. The lessons-learned portion of this

piece, though, also is intended to connect the criticism to real-world examples

and help to carry scholarship over the pervasive gap separating practice and

research, a daunting leap but one that is seemingly surmountable. Therefore,

after exploring the potential of Action Research as well as the possibilities of

research in the wild, we will share some of the experiences we have had in

conducting a systematic program of inquiry that involved building several mobile

apps for research purposes. Four important concerns and challenges merited

consideration here: 1) App building requires broad expertise and extensive

resources, infrastructure needs not to be taken lightly, 2) Audience

authenticity creates some issues but solves others, 3) Action Research is not a

scholarly magic bullet, and entails a certain amount of risk, from a variety of

fronts, and 4) New ways of data collection could help to evolve the type of data

researchers can collect, as part of an expansive approach to research fusing

technological advances and the ideals of the Maker Movement.

Making as a research approach

The larger Maker Movement encompasses the cultural and technological factors that

have aligned to entice people into active participation and manipulation of the

objects of the world, both physical and digital. A smattering of researchers

have adopted this do-it-yourself ethic in mobile contexts, too, as some of these

scholars have experimented extensively with what could be considered Maker

methodologies, such as Action Research, demonstrating promising and novel

results that deserve more documentation and further consideration.

With the introduction of

Make magazine in 2005, the

Maker Faire concept in 2006, and the opening of the Apple App Store in 2008, the

Maker Movement not only broadly made connections – from John Dewey’s

progressivism to Seymour Papert’s constructionism, as Resnick and Rosenbaum [

Resnick and Rosenbaum 2013] noted – but also brought together all of the

learning-by-doing advocates in-between. The Maker Movement spread deep into the

computing industry’s cultural foundations, reminiscent of when old-school

hackers experimented with the possibilities of new media forms through the

process of building them and playing around with them, just to see what was

possible. Through the creation and improvement of those formative methodologies

over multiple iterations, makers showed the development of new knowledge through

the created objects, shared via public demonstrations. They also created

knowledge through the discussions about their exploratory activities, as acts of

both learning and teaching in a world yearning for new ways to account for – and

adapt to – the perpetual uncertainty and rapid change happening across

industries, cultures, and societies [

Hayes 2011]

[

Dougherty 2012]

[

Resnick and Rosenbaum 2013].

In mobile media and communication research, from the late 1970s through the

1980s, many early ideas were born out of abstractions, through theorizing in

environment-independent settings. Those initial studies included a mass of

normative writings about conceptual frameworks. Mobile telephony in the early

1990s, when cell phones became more accessible and widespread, then generated

another wave of interest and methodologies. That period included the rise of the

highly controlled laboratory experiments, which tested specific stimuli and

behaviors in artificial settings. Many still employ this laboratory approach

(e.g., [

Kjeldskov and Paay 2012] and [

Jones, Karnowski, Ling, and von Pape 2013]).

Yet as mobile research grew and expanded, particularly in the smartphone era, it

began to raise other kinds of questions. New paths of inquiry have appeared,

diverging, and spreading fast, with many of those related to the wide social

implications of mobilities and ubiquitous computing (e.g., [

de Souza e Silva 2013]

[

Frith 2015]

[

Jones, Karnowski, Ling, and von Pape 2013]). As different questions have emerged, scholars have

begun to also examine the possibilities that mobile technologies might afford

for distinct, efficient, and efficacious digital humanities methodologies ([

Büscher, Urry, and Witchger 2011]

[

D'Andrea, Ciolfi, and Gray 2011]

[

Fincham, McGuiness, and Murray 2010]

[

Merriman 2014]

[

Sheller 2015]

[

Svensson and Goldberg 2015].

Of particular interest has been the potential for mobile technologies to connect

to the holy grail of methodological pursuits, to develop a reliable measure of

the events occurring in the stream of consciousness over time [

Csikszentmihalyi 1978]

[

Csikszentmihalyi and Csikszentmihalyi 1988]

[

Hektner, Schmidt, and Csikszentmihalyi 2007, 6]

[

Karnowski 2013]. Much research still relies on survey measures,

rather than direct, in-situ observation and accounting of a rich spectrum of

related activities. In the realm of user-experience studies, for example,

research so far heavily relies upon survey questionnaires. Yet with the sensors

and relatively unobtrusive data collection abilities of smartphones and other

types of high-tech trackers, users now also can be observed – in a triangulated

manner – having their behaviors documented in great detail across time and

space, and even be surveyed while in the moment, as experiences shift, rather

than in summary, at the end of a multifaceted activity [

Bouwman, de Reuver, Heerschap, and Verkasalo 2013]. In turn, sampling data from mobile experiences,

within the “earthy” environment in which they happen, allows

researchers to avoid the inherent limitations of methodologies that rely solely

on memory performance or reconstructions of respondent behavior, through

reflective thoughts and attempts at recollection [

Karnowski 2013].

So why aren’t more researchers instinctively jumping into this Maker fray to

find out what they can do in it?

Into “the wild”

As a quick historical summary of the issues involved, Kjeldskov and Graham [

Kjeldskov and Graham 2003] decided – well before the iPhone, and the mass

rise of smartphones in society – that mobile human-computer interaction needed

to have a methodological snapshot taken of it. This would help identify

shortcomings and to propose opportunities for future research. Inspired by

similar work in Information Systems, they analyzed more than 100 human-computer

interaction (HCI) publications. Among the methodological trends noted were

infrequent study in natural settings, including Action Research, case studies,

and field studies, which was perceived at the time as a lack of attention to

both the design and the use of the mobile systems.

Mobile interaction design in the early 2000s, in turn, was dominated by

trial-and-error evaluations in laboratories. These studies usually segregated

into two distinct camps: those who studied people, and those who studied

systems. In retrospect, Kjeldskov and Graham warned of three prevalent

assumptions of the field at that time: 1) We already know what to build, 2)

Context is not important, and 3) Methodology matters very little. Having

assumptions, such as already knowing the problem to solve, made it difficult to

put those ideas aside and identify more fundamental challenges at play. A lack

of a focus on methodology influenced the results subsequently produced [

Kjeldskov and Paay 2012]. That issue led to a diffusive research agenda

shared in some streams by relatively small factions but generally without broad

consensus on approaches to addressing the major issues of the field [

Büscher, Urry, and Witchger 2011]

[

D'Andrea, Ciolfi, and Gray 2011]

[

Fincham, McGuiness, and Murray 2010]

[

Merriman 2014]

[

Sheller 2015]

[

Svensson and Goldberg 2015].

The evolution of HCI methodologies then was compared longitudinally – over a

seven-year span – by Kjeldskov and Paay [

Kjeldskov and Paay 2012], who

found much more collaboration existing between the original group of

researchers, primarily in engineering and computer science, and the next wave,

which included the social sciences, the humanities, and the arts. The first

group, in general, had created the technological possibilities with mobile. The

next wave brought with it new ideas about the problem space, the importance and

role of context, and the impact and critical nature of methodology. The nearly

complete absence of Action Research in the field in the early 2000s, they

argued, pointed to both the lack of an established body of knowledge within the

discipline and the wariness of failure, in an era when experimentation was

expensive and time consuming.

As Kuutti and Bannon [

Kuutti and Bannon 2014] described, HCI has expanded its

scope and eclecticism in recent years. As part of that growth, it has developed

a “practice” paradigm to accompany its more traditional

“interaction” paradigm. When focused on interaction,

researchers tend to study the human-machine dyadic relationship in ahistorical

situations, often within a highly controlled lab setting and involving

predetermined experimental tasks. The practice paradigm has a more long-term

perspective, examining both historical processes and performances, and studying

actions over the full length of their temporal trajectory. These studies are

situated in specific time and space, and they are dependent on their

surroundings and cultural context.

In short, the practice paradigm considers practices as the origin of the social

(rather than in the mind, in discourses, or in interactions). Those practices

consist of routines that interweave any number of interconnected and inseparable

elements, both physical and mental, affected by the material environment,

artifacts and their uses, contexts, human capabilities, affinities and

motivations. From the practice paradigm, practices are the minimal units of

analysis, at the nexus of essential and interesting social issues, accessible

for study in natural and authentic ways. So while the interaction paradigm has

been helpful in numerous cases, for many years, it also has created blind spots

in the field that the practice paradigm – as developed through approaches like

the app-maker model – could help to reveal in new and valuable ways.

Because so many of our technologies have been embedded in our everyday lives,

Crabtree, Chamberlain, et al. [

Crabtree et al. 2013] contended that

researchers should seek to understand and shape new interventions “in the wild.” Those include

the novel technologies developed to augment people, places, and settings without

designing them for specific user needs but also new technological possibilities

that could change or even disrupt behaviors. As the costs of mobile app

experimentations, though, have diminished in recent years, and while the body of

knowledge in the field and interest about in-situ experimentation appears to

have grown, the amount of mobile-related Action Research projects has not

suddenly increased. As Crabtree, Chamberlain, et al. [

Crabtree et al. 2013] document, scholarly literature has emerged that shows in-situ user

experiences reporting different findings than what was predicted for them in

laboratory settings. In other words, what participants tend to do in controlled

settings, under instruction and regiment, can be dramatically different from

what they might do, under their own volition, in an authentic situation. A

growing realization of such disconnect suggests that researchers need to develop

more paths that are reflexive and focused upon experiences as well as creative –

and often serendipitous – inquiries [

Adams, Fitzgerald, and Priestnall 2013]. Action Research,

in its various forms, has been developing as an approach for decades and

provides opportunities to study practices in authentic settings, which could

guide researchers to venture into “the wild.”

Action Research as a base

Unlike in the early days of mobile research, scholars in the field today no

longer have to lock themselves into the stereotype of wearing white lab coats,

carrying around clipboards and staring at people through one-way glass. They do

not necessarily have to remain pure spectators, completely detached from their

surroundings, and seeking “Truth,” as an isolated Baconesque

figure. Instead, through Action Research methodologies, they can bridge the gap

between scholars/science and industry/practice in more directly involved ways

[

Herr and Anderson 2005]. Action Research can be known by many other

labels, including participatory action research, practitioner research, action

science, collaborative action research, cooperative inquiry, educative research,

appreciative inquiry, emancipatory praxis, teacher research, design science,

design research, design experiments, design studies, development research,

developmental research, and formative research [

Design-Based Research Collective 2003]

[

Herr and Anderson 2005]. But the basic idea is the same: get inside the

system, and study it from that viewpoint.

According to Archer [

Archer 1995, 11], such an inquiry simply

for “the purposes of,” and

having underpinned a particular practitioner activity, should not necessarily

earn the label “research.” He stated that the cases that legitimately

warrant the “research” designation generally come from research activity

carried out through the “medium of

practitioner,” in which the best way to shed light on “a proposition, a principle, a material,

a process, or a function is to attempt to construct something, or to enact

something, calculated to explore, embody or test it” and generate

communicable knowledge. These tests tend to generate spirals of action, starting

with planning and implementation, followed by observations of the effects and

then a reflection as a basis for more planning, action, observation and so on,

in a succession of cycles [

Kemmis 1982]. This article, as an

illustration of the approach, is part of a reflection stage of our recent Action

Research efforts, which will then lead us to further planning, action, and more

observation. As part of the Action Research methodological framework, these

techniques for inquiry are practical, cyclical, and problem-solving by nature,

meant to generate change and improvement at the local level [

Taylor, Wilkie, and Baser 2006].

Also embedded within this type of research is the idea that the builders of a

system gain knowledge in ways that an observer cannot, as our earlier examples

of musicians, painters, and writers illustrated. The relationship between

knowledge and practice is complex and nonlinear, and the knowledge needed to

clarify practice relates to context and situation [

Campbell and Groundwater-Smith 2007].

The app-maker approach, posited here – often generating interrelated qualitative

and quantitative data – provides a depth-seeking philosophy to gaining

understanding about complicated issues that otherwise could be inaccessible to

system outsiders. From this perspective, romanticized non-participatory

“objectivity” and personal subjectivity are not

polarities but rhetorical constructs, and participation in the research process

inherently integrates the researcher [

Daston and Galison 2007]

[

Douglas 2004]

[

Kusch 2011].

A key question that emerges in this epistemological process, then, is how openly

the researcher’s values are described and debated [

Douglas 2004].

An alternative scale of consideration with this type of methodology could be

conceptualized as the positionality of the researcher, either as an insider or

an outsider. The interpretive perspective, from which Action Research generates,

transparently acknowledges the researcher as an insider, as a part of the fabric

of the inquiry, and an indivisible element of the environment, within which

people, including the researcher, are interacting [

McNiff and Whitehead 2006].

Research objectives, of course, should dictate the methodology of a study, and

not all research questions warrant the use of Action Research. Various practical

challenges also exist with the methodology, and those comprise substantial

barriers to even attempting the approach. Action Research, for example, can be

relatively more time consuming than some other approaches, and the objectivity

of the research perspective can be difficult to maintain when immersed in the

environment as a participant and engaged in the project as a stakeholder [

Kjeldskov and Graham 2003]. Yet interest in Action Research continues to

develop in HCI as well as in other fields that could be of interest to digital

humanists, as scholars seek to conduct democratic, collaborative, and

Maker-oriented research within – not just about – their communities [

Hayes 2011]

[

Hayes 2012]

[

Hayes 2014]. This piece attempts to transform principles of the

Maker Movement, and similar exploratory scientific practices, into a coherent

research paradigm, based on the founding idea that first-hand prototyping serves

a critical role in the research process in the field of mobile media and should

be valued as a legitimate method of scholarly inquiry on its own, without having

to employ a Trojan horse of a more traditional methodology, just to gain entry

into academic castles.

A longitudinal look at mobile methodologies

When Kjeldskov and Paay [

Kjeldskov and Paay 2012] examined a snapshot of

mobile methodologies again, the second literature review – in the same 10

outlets – showed a more than quadrupling of the number of publications on the

topic. While lab experiments still dominated at 49 percent, field studies grew

dramatically, to 35 percent. The purpose of both approaches, most often, was for

evaluation. Field studies meanwhile developed into three distinct subcategories

of

field ethnography,

field experiment, and

field survey. Normative writings, in turn, nearly disappeared

from the field (2 percent). Case studies (6 percent) and Action Research (less

than 1 percent) gained little traction. While the expansion of field studies

encouraged Kjeldskov and Paay, the often-incomplete images that they created

could be misleading without the triangulation of the more comprehensive accounts

that case studies and Action Research could provide.

Around 2010, mobile researchers began to explore the app stores as potential

delivery systems for the distribution of research instruments, in the guise of

mobile apps, which could be used to conduct large-scale and relatively

unobtrusive human subjects studies [

Henze 2012]. Just as in

traditional studies, researchers develop an apparatus for their study. However,

the apparatus in these cases gets embedded into an app, which then is published

to the public mobile application market [

Henze and Pielot 2013]. In

“the wild,” researchers can gain insights about how

people might fit new systems into their existing practices and contexts of use

as well as how people might change their contexts and practices to accommodate

or take advantage of new systems [

McMillan et al. 2010]. As part of a

user’s real-life context, such studies ensure a high external validity and allow

for findings otherwise impossible to obtain [

Henze 2012].

Projects by Henze, Pielot, Poppinga, Schinke, and Boll [

Henze et al. 2011]

are examples of such an app-building approach, in which scholars can relatively

easily create and distribute research instruments to the masses through Apple’s

App Store and Google’s Android Play market. In their case, the research team

produced and distributed five differing mobile apps with various research

objectives to observe what would happen. These apps were downloaded more than

30,000 times. Some of the apps were successful in gathering many participants

and unobtrusively compiling research data quickly and in large quantities. The

app Tap It, for example, a simple game of touching rectangles – devised as a way

to assess touch performance of users in relation to screen locations and target

sizes – gathered 7,000 users, contributing 7 million data points, in just two

months. Some of these efforts also definitely did not reach their goals,

including one app – called SINLA, an augmented reality viewer of nearby points

of interest – that only collected eight samples over the course of a year, a

sample the researchers deemed worthless. Therefore, merely creating a research

app – even if the same talented people work on it, with the same amount of

resources, with the same objectives – does not guarantee the return of a large

data set.

In another public app project, using the App-Maker Model, Henze [

Henze 2012] created a simple screen-touching game that allowed him

to not only collect basic device details – such as handset type, time zone, and

screen resolution – but also to log each touch event, in relation to the

position and size of the targets, as a way to determine error rates. In the

process, which garnered more than 400,000 downloads, the data also documented a

systematic skew to the touch position that could be corrected with additional

device calibration. So many factors dictate the app adoption and use, including

the marketing of the project, the perceived production value of the app, and the

realistic nature of the related activities.

On the negative side of this approach, Lew, Nguyen, Messing, and Westwood [

Lew, Nguyen, Messing, and Westwood 2011] contended that the authenticity of the experience

significantly affects internal and external validity. If a mobile experiment,

for example, lacks ecological realism in any of four dimensions – appearance,

content, task, and setting – the study can be compromised, and test subjects

could be inadvertently responding to the treatment rather than the controlled

research condition. Other significant issues with data collection included the

difficulty of complementary observations, in an ethnographic sense, because the

use of the devices is tough to monitor, as the movement of the users, and the

intimate relationship of the device to the user, makes viewing the screens and

interactivity generally impractical [

McMillan et al. 2010]. Apps also get

used in unpredictable ways, such as getting turned on and inadvertently left on,

when the user leaves the device behind (giving the false appearance of long

use), creating the potential for noise in the data that needs to be filtered

[

Henze et al. 2011]. With an insider’s positionality, and systematic

and thorough accounting of the procedures and data collected, the researchers

can have access to more material, in more direct ways, often allowing them to

make more informed interpretations of the data generated in the study. The

App-Maker Approach might not be a perfect one, and it does include

disadvantages. But, it also has advantages as well that should not be

discounted.

Our experiences in the field

After conducting several traditional user studies, through observations and

surveys, and building a few functional app prototypes in 2010 at the Fort

Vancouver National Historic Site, a National Park Service hub near Portland, OR,

we started to build and release to the public early versions of a location-based

history-learning smartphone app in the spring of 2011. Eventually, after dozens

of iterations, the beta version of the app was released in June 2012 [

Oppegaard and Adeopse 2013]. As of this writing, significant work continues on

the project, with development of the app planned for as long as resources for it

can be gathered and maintained, as well as for an offshoot tablet app. In the

meantime, we also created and used in studies several separate mobile apps

designed specifically to answer particular research questions. These were mobile

apps built for one-time use, at public events, and designed to gather precise

data from users, in-situ, about their responses to mobile media within the

authentic environment of intended use. For the larger projects, we gathered data

from the devices of the users, through the app, but for the one-time apps, to

save time, we brought our own devices. The users then simply used the research

instrument apps as is, with a consistent experience.

The first prototype of this one-time model tested social facilitation and user

engagement at a community festival in 2012 by providing unique historical

background on the festival. This app enabled the delivery of video and audio

files as well as easy sharing of that information to users not present at the

time of use. The second prototype, in 2013, expanded upon the idea even further

by gathering data about user satisfaction. Both prototypes encased all of the

research procedures within the app, including the consent letter, the survey,

and the acknowledgements, along with the various shared media forms. Later

prototypes, employed in 2014 and beyond, generally were modeled after the second

version.

Our hope was that this iterative process would create ever-improving models of

mobile apps that could be quickly tailored and deployed to address any research

question we had about mobile app use, in context, including investigating issues

related to interface design, physical-digital interaction and media-form choice,

within the mobile medium. The mobile apps generated a highly detailed log of

user behaviors during the tests. Users were given various media forms in

randomized scenarios, with randomized ordering of questions. They interacted

with the device within the situation, and all of this logged information was

stored locally on the device, avoiding complications related to Internet

connections and data transfers, or third-party interference (something we

learned after the first round of data collection, as we discuss later).

Exporting the logs into a data analysis system not only was streamlined and

easy, because we designed the app with that transfer in mind, but the

information was rich, precise, and responded directly to our predetermined

research questions.

The following remarks therefore primarily relate to the formative experiences we

have had during these processes, either in summative composite, or to specific

incidents that are identified as such. These reflective comments – as part of a

Maker cycle intended to prompt further Action Research – are intended to

corroborate, strengthen, and add detailed particulars to the theoretical

assertions being made. They also provide an insider’s perspective and could

prompt discussions about some of the most significant methodological issues

encountered during these Action Research efforts:

Lessons Learned

Issue 1: App building requires broad expertise and extensive

resources

Opportunities:

Unlike the apocryphal tales of teenagers developing million-dollar apps

in an evening hack-a-thon, the thought of building your first mobile app

research tool should seem like a daunting task, because it is. Such a

project can overwhelm you and your team, as it has ours at times,

especially if not managed within the scope of your expertise and

resources. The larger projects we mentioned earlier involved multi-year

endeavors with dozens of professionals, more than 100 volunteers, and

more than $100,000 in grant monies to produce. That said, we also did

not need to build such enormous programs to test many of the ideas that

we eventually started focusing upon, including most of our recent

studies. The bigger projects had numerous and varied goals, including

civic engagement, service learning, and even some exploratory

meandering.

For the most recent studies, though, we simply built spare formulaic apps

that had the functions we wanted, without any aesthetic flourishes. We

then installed those apps onto a set of identical tablet computers

dedicated to this research. That refined approach avoided the

complications we have had with the development and installations of the

other apps, and various problems with compatibilities to the many

different handsets that could be using the apps. The smaller and

more-focused apps took roughly a day for a professional coder to

complete, and even less in cases in which existing code could be reused

in similar ways. So the hack-a-thon approach can be fruitful, as such,

if focused.

Yet that does not mean these more-limited efforts were cost-free. A full

day of paid mid-level programming typically costs about $1,000, and that

does not include the pre-planning, post-analysis, or fixing the errors

that become apparent during the design process. If the app design is

simple enough, and if the scholar has some programming experience, an

app of this nature probably could be made in a week or so by hobbyists

familiar and comfortable with basic HTML5, CSS 3, and JavaScript. The

initial design work of the throw-away app – including how it would

function, and precisely what media would be delivered when – was roughly

the same amount of work as any other equivalent research design. Instead

of printing and handing out surveys, for example, that same data was

gathered and delivered digitally through the app. In the past 15 years,

collecting survey data online has become the norm, and this merely

represents, from our perspective, the next iteration of taking digital

data collection into the field.

Publishing apps to the App Store or Google Play market requires another

level of expertise and more time, but Android APK files (the program

installed on the devices) can bypass the markets altogether and be

shared directly via email. As an open system, making, testing and

distributing via Android can be relatively easy (although it creates

different sets of issues related to the multitude of devices that

operate on Android OS). Apple has some work-arounds at this point, too,

such as

HockeyApp,

which allows non-store distribution. If the goal is to conduct research

on specific devices that you can reach via email, rather than a mass

market reached through the app markets, then a lot of the hassle of

publishing and sharing apps can be avoided simply by focusing on Android

development or working through services such as HockeyApp.

Challenges:

As digital-media delivery systems become more and more accessible and

open to the general public, mobile app development is an example of an

activity within a ubiquitous system that many scholars could navigate

within and directly participate. While reachable, the bar still remains.

Scholars without adequate coding skills and/or resources to hire a

programmer could tailor the content of readily available third-party

software and test through that system, but then the researcher would

have limited access to the data collected and to the controls of the

overall app environment, which we consider significant trade-offs.

Large-scale public projects, such as Fort Vancouver Mobile (

www.fortvancouvermobile.net), create several research paths,

but they also have significant drawbacks as well, including the exertion

of enormous amounts of energy, often without the equivalent academic

credit in many institutions, and with continual maintenance concerns.

Starting small, with a focused research tool, and taking that mobile app

all of the way through the implementation process, before raising

ambitions and the stakes in sustainable increments, is the path we

recommend as a lesson learned. Building a mobile app also means becoming

aware of the many possibilities that mobile technologies offer. Scope

creep and feature creep are ever-present. Focus is difficult to

maintain, with so many available options, and easily reachable

enticements, along the lines of, “We can do that, too,” which

easily can derail original intent or distract from the research purpose

and drain resources, of which the needs already are many.

This broader challenge was one we could have anticipated, of course.

Trial and error remains a common element of research and development

procedures, as well as of collecting data in new ways through emerging

protocols. But the details of these difficulties were revealed in the

discovery process, not in the abstract conversations about what could go

wrong. For example, we initially used both mobile phones and tablet

computers for our field tests. After finding no significant differences

between them in regards to how people reacted to our research app (data

from our first collection confirmed this), we used tablet computers

exclusively in ensuing field work. The bigger devices were easier for us

to track in the field, provided a larger interface for the participants,

were not as likely to be pocketed and stolen, and also importantly, were

easily accessible to us in terms of equipment with which we had access

and were familiar. We encountered a few minor issues regarding the

alteration of survey items as we planned the study; rather than having

the ability to directly change the items, for example, working through a

developer bogged the process a bit. Working with trusted development

partners, though, cannot be overstated. We hired and worked with a few

different developers before and during this project. The ones who did

not deliver sapped our resources – including time, money, and enthusiasm

– but the highly valuable people who did seemed to be able to nearly

perfectly translate our ideas to the technologies and in the process

generate new forms of research instruments.

Issue 2: Audience authenticity exacerbates control issues

Opportunities:

Mobile technologies can gather deep data about a person, doing real

tasks, in real situations, in real settings, but that data – even if it

is considered “authentic” – is not complete, and it

is not highly controlled. Mobile apps can acquire extremely detailed

data from people unobtrusively, in the flow of life, and in situ. With

our apps, for example, we have tracked from the exact moment a user

opens a program until the user closes it, and everything in-between.

These acts included the precise time and location of the person when any

sensor, such as a touch of the screen, is triggered. That geospatial

context – directly connected to specific interactions and users, in

particular places at exact times – offers unprecedented potential for

the granularity of data collection and data-point triangulation.

That insight is arguably the most meaningful addition to our

understandings of the research topic, but problems arise when

researchers, including us, begin to parse that data, or combinations of

that data, into usable units, in terms of determining exactly what

variables are affecting what behaviors. In short, one cannot possibly

control for all of the variables in “the wild.”

Action Research with mobile devices, though, can create fascinating

forms of self-contained triangulation. The devices gather detailed

analytics of use, and then they also can push users into traditional

research instruments, with more methodological certainty, such as

Likert-like scale surveys, which are geolocated and taken in the context

of the other data points. If a researcher observes the test subject

performing the tasks with the app and taking the survey, another

perspective dynamically is added to the rich mix of app-collected data.

We also have found that people generally enjoy participating in research

that does not feel like research. We typically have kept our

hypermediate interventions, such as pushing a survey to the user, to a

minimal time (ideally 5 to 7 minutes). As such, we tend to ask just a

few questions at a time, which has reduced the traditional data

collection results, in exchange for the instrumental transparency of

in-flow data of ordinary use. The trade-off comes in the form of a

high-quality sample composed not of a homogeneous group (e.g., students,

members of an organization), but of a wide-swath of the surrounding

community. Too often, in our opinion, validity gets sacrificed for

statistical “purity.” This App-Maker Model approach

allows for both, though the breadth of some statistics is lost in the

pursuit of a more-representative sample.

Challenges:

An authentic real-world data sample is one drawn from the complexities of

humanity in action in authentic settings. Laboratory scientists,

reductionists, and those who favor highly controlled experiments, of

course, might be troubled by the inherent messiness of this style of

data gathering (and have told us so in various formal and informal

settings). The App-Maker Model approach means looser levels of control

compared to traditional laboratory experiments. In a lab, we can put

people into evenly divided groups, in the same environment, and examine

their behaviors within a consistent experience. In the field, conditions

are harder to manipulate (though our randomized app conditions, more or

less, address this), environmental factors such as sunlight, noise, and

temperature can impact the results, and the wider swath of humanity

studied requires more adept communication skills and responsiveness to

the situation than, for example, interactions with homogeneous crowds of

college students in a lecture hall.

In addition, the mass of data collected by mobile devices could be

misleading, or could be misinterpreted, without other methodologies to

triangulate and fully comprehend the results. People might not naturally

cooperate in pursuing the goals of the research agenda. They might not

stay on task. They might act in ways unaccountable to the device, and

irrational, such as leaving an app turned on and running, so it appears

to be in use, while instead the person has placed the smartphone into a

pocket and forgotten about the app. “Constant use” could be

mistaken for neglect.

We can anticipate some of these issues, but they also often come in forms

we do not expect and don’t discover until in the midst of the situation.

To us, those experiences just deepen our understandings of mobile media.

For the most part, as an example, we have had pleasant weather during

our tests, and everything has proceeded according to plan. On days we

didn’t (during a couple of early prototype testing periods), rainy days

turned highly organized and resource-intensive testing environments into

wastelands of resources. From those failures, we learned to constantly

check the weather reports. We also learned how environmental conditions

(such as nearly unavoidable screen glare caused by the sun at high noon)

significantly could affect use. In another testing situation, we had a

train park next to a collection site less than an hour into the event.

It proceeded to backfire at irregular intervals for the duration of the

data collection. Many people had trouble hearing the multimedia portions

of the app (and rated their experiences accordingly). The noise held

constant across conditions, and although the raw numbers experienced

some differences, the distinctions between groups we investigated likely

did not. During another collection, the cold weather impacted people’s

abilities to effectively utilize the touch screen (apparently cold

fingers do not register on a touch screen well), a problem that

potentially could have been mitigated with a stylus, if we had thought

to bring one. Ultimately, these observances constitute a real view of

the environmental factors that people face in the wild and help remind

us to take these things into account in the design process.

The last and perhaps most important issue we faced involved connectivity,

as most outdoor spaces do not have accessible Wi-Fi. Our field

experiments at Yellowstone National Park, for example, have been

severely constrained by connectivity issues. During our initial data

collection with the one-time-use app, for example, we relied on a cell

phone data plan, which became erratic. For ensuing studies, everything

was stored on the device, allowing the media to load quickly, and data

remained on the local device until we downloaded it to a common file. In

studies such as ours (working in a park with less connectivity), this

higher level of control allowed for a consistent and expedient

experience. Apple has improved its ability to host local apps with the

HockeyApp application, but we found the Android APK to have more robust

features and less glitches. So we would recommend staying in an Android

development environment for initial pilot explorations, at least at this

point in history.

Issue 3: Action Research through mobile technologies is not a scholarly

magic bullet and entails significant risks

Opportunities:

Conducting Action Research within mobile environments offers solid

methodological choices for many situations. The App-Maker Model can

uncover and build knowledge that other approaches simply cannot reach.

It has been potent, in particular, for our studies of the medium, media

forms, interaction design, remediation, and mobile learning. We have

used mobile technologies to track behaviors in traditional HCI contexts,

such as measuring time on task, in design contexts, such as A/B

comparisons of interface features, in learning contexts, such as with

error rates, and in broader medium and media studies, such as comparing

knowledge transfer from a brochure versus a mobile app, and response to

information in video form versus audio-only. Further, we have made new

media forms, such as location-oriented videos, and studied how audiences

reacted to them. We have explored prompts and triggers for audience

interaction, particularly those in which users might be compelled to

make and share media. All of those different approaches have been

enabled by the opportunities afforded when researchers build their own

mobile app instruments, which, in turn, creates some forms of

experimental controls.

By pioneering in this realm of mobile technologies, and actively

participating in its construction, through building in it and seeking

out new areas to explore, a researcher can break ground and set the

foundation for a field or subfield. Such unexplored territory has

significant value, and the findings can feel thrillingly fresh. When we

have built mobile apps, and have seen what they can do, we often have

small moments in which we truly think we might be the first people on

the planet to have encountered a particular scholarly research

situation. New mobile affordances and applications emerge continually.

Digital humanists have to ask digital-humanist questions in these cases,

because no one else is going to do that for us. Generating an original

thought about that sort of situation can be an exciting moment, and it

happens relatively frequently through this kind of formative research.

Those moments, in turn, can open new research paths of all shapes and

sizes. As a research engine, this approach can reveal many otherwise

obscured issues. Ideas for next steps appear everywhere. Those recurring

moments empower and energize, and research feels less like incremental

boulder rolling and more like an adventure.

Challenges:

Action Research through mobile technologies also can be ineffective and

inefficient for some research pursuits. This App-Maker Model approach,

by nature, is time consuming and involves laborious cycles of planning,

action, reflection, and so on. Combine that pace of research with the

tedious nature of app development (and publishing), and some researchers

simply might not have the patience or perseverance, or the interest, to

see what eventually might come from such long-term efforts.

Many other kinds of research methodologies have been developed over

decades and proven themselves in specific niches for answering certain

kinds of questions about particular kinds of things. They also have

developed a methodological certainty that comforts many researchers,

administrators, and publishers. Frankly, they are less trouble to use.

Action Research, by nature, might be more exploratory and also more

unpredictable than other types of methodologies. The App-Maker Model

approach also integrates the researcher directly into the research,

rather than keeping operations at arm’s length and safe from direct

influence by stakeholders’ desires, even subconscious ones. Sometimes,

such observational distance helps provide clarity. Likewise, such

detachment reveals flaws that otherwise could be missed, especially by

researchers invested in the production process.

Risks also can lead to failures, sometimes in grand fashion. The research

community generally wants new ideas and research paths to emerge.

Contradictorily, it often has a difficult time dealing with new

paradigms and findings that challenge the status quo, or scholarly

traditions, in terms of fitting those into pre-existing conventions and

forms. Action Research, for example, has been a widely discussed and

supported scholarly approach for decades, yet its messiness and

methodological uncertainties keep it on the fringe of the academic

publishing world. Work with this approach can be widely unappreciated,

or underappreciated, and confusion about its standards and techniques

contribute to its outlier status. When people in power do not understand

this approach, or dismiss it as unscientific, there are ramifications

beyond just social capital. By not following the specific leads of

others, down well-trodden methodological paths, action researchers can

be ostracized or even punished, especially when issues of promotion and

tenure are raised, especially by non-digital-humanists.

In our experiences, for example, we have had research grants from the

National Endowment for the Humanities indiscriminately classified as

“service” work because they funded these sorts of Action

Research initiatives. Besides the various issues related to credit for

the work, formative studies in any new field, like this, can get lost in

search of landmarks, because of the novelty of the technologies and the

inexperience of the test subjects (and researchers) with those

technologies. That should not be a surprise or be set up for

organizational punishment. This is part of the wayfinding, as getting

lost can sometimes lead to interesting, otherwise unknown places. In the

meantime, working with technologies that change and rapidly update

creates a churning of effort and resources needed just to maintain a

place within the research realm. When operating systems are updated,

when hardware innovations appear, when societal use patterns

dramatically shift as the newness of an idea wears off, action

researchers need to remain nimble and ready to adapt. Otherwise, they

quickly get left behind, and their work could become irrelevant. Those

recurring moments of shifting and reconfiguration can be draining and

exhausting.

Issue 4: New ways of data collection help evolve the types of data

researchers can collect

Opportunities:

Mobile technologies allow data to be collected in novel ways. During our

recent research, we have combined traditional ideas with new mobile

techniques, such as inserting survey questions into an app, so that they

are interspersed throughout the experience, rather than solely being

collected at the end. While the survey itself is standard (e.g., asking

for basic demographic information, using Likert-like scaled questions to

better understand responses to the mobile intervention), the process is

fundamentally changed by the technology’s affordances. For example, the

questions can be automatically randomized. This gives each user the

items in a different order, helping to eliminate any fatigue effects

that the ordering might cause. The data is entered directly into the

app, limiting data entry mistakes to the ones created by users, and the

app exports the data into a common database form. The surveys also can

keep the user from advancing through the app until all of the questions

are answered, eliminating missed questions, and preventing users from

skipping parts, keeping the survey data complete. In turn, the data can

be collected in ways that simply have not been readily available to

researchers in the past. For example, as soon as the research app has

been opened, if the privacy permissions have been allowed, the test

subject will create a complete record of all interactions with the

mobile device when using that app, including a GPS trail of where those

interactions took place, and a time and date stamp. The richness of such

available deep field data is unprecedented.

Challenges:

Yet the more data collected from a test subject, the more choices that

have to be made about how that data is collected, how that collection

process affects the data, and what the data inherently means. In short,

the potential of what we can do now dramatically increases the

possibilities and therefore exponentially expands the complexity of what

can and could be done. We do not know yet, for example, how survey data

collected in a mobile fashion, within an app, and as part of a mobile

experience, affects the ways in which test subjects answer the questions

and approach those surveys. What would the results be if a paper survey

was included in the same experience, in place of the mobile survey, and

given at the same time? Other data collection techniques are more

complicated and need more consideration, whether they are extensions of

traditional methods or some sort of new methodology emerging from the

primordial technological ooze. In turn, this data resembles discovering

a new species of information. We think we know something about it, but

we do not know enough to make many assumptions without carefully

measuring and tempering our leaps. In the process, we also encounter the

risk of becoming too reliant on the data and the machinery and losing

mindfulness about what we study and what the data reveals.

This freshness of perspective was the most predictable benefit, in our

experience. The different types of data allow for new ways to

triangulate findings, and provide ways to validate the data analytically

beyond statistical

p values. The biggest drawback

here goes back to Issue 3; collecting data in a new way does entail risk

and doesn’t fit into the prescribed patterns most people are trained to

recognize and evaluate in graduate school. Combining a humanistic

approach with qualitative and/or quantitative data is historically rare,

but scholars are beginning to integrate these approaches more frequently

[

Kinshuk, Sampson, and Chen 2013]

[

Lynch 2014]

[

Spiro 2014]. A journal editor, in turn, could face

significant challenges finding appropriate reviewers to which such an

article can be sent for feedback, and junior faculty, eager to build

publishing credentials, hardly can afford additional obstacles in an

often highly challenging and ultra-competitive peer-review

environment.

Discussion

Cognition “in the wild” has been a significant topic for

anthropologists since the late 1980s [

Crabtree et al. 2013]. Lave and

Wenger [

Lave and Wenger 1991] furthermore detailed how new members are

brought into knowledge communities, and how those communities both transform and

reproduce themselves. That process starts through peripheral participation by

the new members, but gradually their involvement usually increases in engagement

and complexity the longer they are a part of the community [

Adams, Davies, Collins, and Rogers 2010]. The Maker Movement shares such heritage, as a call

for people, including scholars, to return to learning by doing.

Calls for action at various academic conferences we have attended in recent years

regularly have challenged researchers to actively pursue innovative

methodologies that reflect the distinct affordances of the mobile systems

studied, along with an integrated view of the users of those systems [

Lew, Nguyen, Messing, and Westwood 2011]

[

Kjeldskov et al. 2012]

[

Kjeldskov and Paay 2012]. Action Research approaches, in turn, could be

worth more exploration, as long as the opportunities are weighed with the

inherent challenges. Instead of waiting for someone else to invent research

tools, the way to get started, in summary, is to get started, to try and fail

and try again.

One area not addressed in much depth in the academic literature of this topic,

and a primary motivational factor for us to develop our own programs, is the

stark reality that digital humanist researchers, whether studying mobile or not,

are constrained and contorted by third-party data and third-party systems,

created for other purposes (e.g., making profits). Invariably, that subservient

dynamic leads to projects getting restricted by the whims, agendas, and

benevolence/malfeasance of the developers. Instead of having independent agency

and basic control of the core systematic procedures, like through other

applications of the scientific method, many digital humanist researchers today

have to settle for what they can get in terms of mobile data and trying to make

something useful out of it. Sometimes, even under such heavy constraints,

significant insights emerge.

We think there are clear benefits for researchers in the digital humanities to

have broader procedural control of their projects, especially in terms of direct

involvement in the design, delivery, and data-output decisions. The only way to

assure such oversight and jurisdiction in the future, though, is for digital

humanists to build these data collection systems and sets of data, under the

designs of academic researchers. Instead of data created by for-profit ventures,

or even non-profit organizations with alternative goals, the information

accumulated in these cases can be exactly what the researchers planned for and

needed, governed by established research protocols, and exported into

easy-to-process forms, for analysis and interpretation.

Natural settings, typified by field studies, also have been shown to offer

significant potential, but mobile researchers have tended to not do as much work

“in the wild.” Concerns about control issues and

methodological certainty hold some people back. The App-Maker Model approach

offers an acknowledgment of insider’s positionality and full transparency about

the techniques incorporated, including the option to share the specific

mobile-app code used to allow for easy replication of studies in different

settings. Mobile technologies offer researchers many other new possibilities,

too, for better research procedures. Many other professions already are

embracing such potential, within their respective fields. In our experience,

using mobile devices for research provides a substantial level of control,

without the artificiality of a lab experiment. Whether the App-Maker Model

approach becomes a primary strategy for the field, or remains as a secondary or

tertiary option, some mobile researchers will continue to benefit while others

remain on the sidelines, missing their chance to play and explore in this field

as it forms.

From our Action Research paradigm, participating through this App-Maker Model

approach was an extremely helpful way for us to transform our understandings of

mobile technologies and mobile media from an outsider’s perspective to an

insider’s perspective, where we began to understand more completely why people

acted in particular ways with their smartphones and tablet computers, often for

practical reasons. We did not take this approach as a step toward becoming app

developers or to transition into industry profiteers. We did not take this

approach as a way to circumvent scholarly traditions and work outside the

boundaries of rigorously established and highly valued conventions. We did not

take this approach as a way to reap academic rewards. Instead, we thought that

we understood mobile technologies and mobile media to a certain extent from

observing it but that we might be able to learn more about it by participating

in it. We just had no idea how much existed below the surface, until we were

under there.

If such a perspective seems like a common-sense approach – like the sculptor, who

works with the clay rather than only looking at the creations of others – then

we circle back to the fundamental and underlying question, related to mobile

technologies or not: Why aren’t more scholars, especially in the digital

humanities, experimenting with these kinds of action-research approaches?

Academics working in the digital humanities will never be able to – and

shouldn’t aim to – replicate the approaches and perspectives taken by natural

scientists studying non-human behavior. Action Research, such as through the

App-Maker Model approach, offers legitimate alternatives. But we will only know

how valuable and effective these approaches can be (and become) through applying

them to a variety of situations and environments and writing about those

experiences and responding to the discourse circulating about those efforts.

Mobile media and communication research, as illustrated here, might be an ideal

field for such innovative approaches, as long as researchers can adequately

address and overcome biases and control systems and various other pragmatic

obstacles limiting this type of approach and experimentation. Also, they have to

be willing to take chances and give it a try.

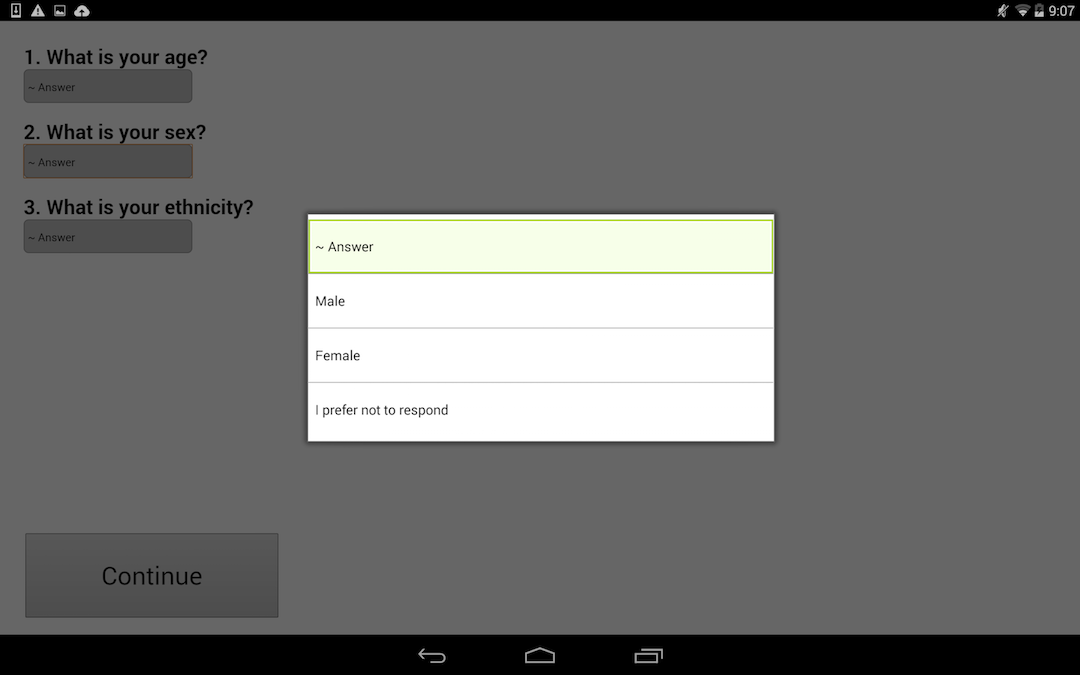

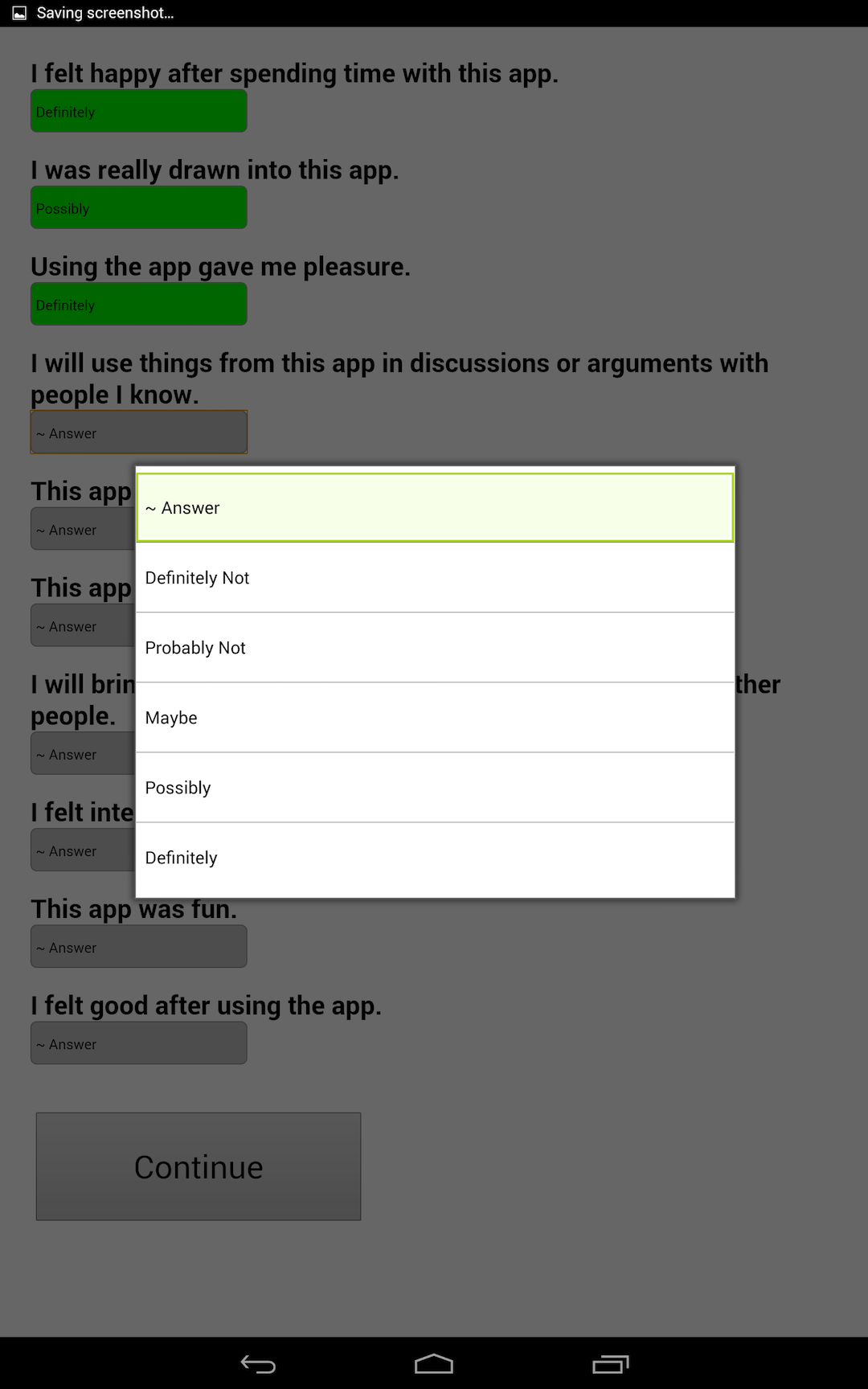

Appendix

Screenshots from the mobile apps mentioned in this paper:

Images showing the research context (Photos courtesy of Michael

Rabby)

Works Cited

Adams, Davies, Collins, and Rogers 2010 Adams, A.,

Davies, S., Collins, T., and Rogers, Y. (2010). Out there and in here: design

for blended scientific inquiry learning. In: 17th

Association for Learning Technology Conference ALT-C 2010, 07–09 Sep

2010, Nottingham, UK.

Adams, Fitzgerald, and Priestnall 2013 Adams, A.,

Fitzgerald, E., and Priestnall, G. (2013) Of catwalk technologies and boundary

creatures. ACM Transactions on Computer-Human Interaction (TOCHI), 20,

(3).

Archer 1995 Archer, B. (1995). The Nature of

Research. Co-Design Journal, 2(11), 6-13.

Bolter, Engberg, and MacIntyre 2013 Bolter, J. D.,

Engberg, M., & MacIntyre, B. (2013). Media studies, mobile augmented

reality, and interaction design. interactions,

20(1), 36-45.

Bouwman, de Reuver, Heerschap, and Verkasalo 2013 Bouwman, H., de Reuver, M., Heerschap, N., and Verkasalo, H. (2013).

Opportunities and problems with automated data collection via smartphones.

Mobile Media and Communication, 1(1),

63-68.

Bruner 1989 Bruner, E. M. (1989). Tourism,

Creativity, and Authenticity. Studies in Symbolic

Interaction, 10, 109–114.

Burdick et al. 2012 Burdick, A., Drucker, J.,

Lunefeld, P., Presner, T., and Schnapp, J. (2012) Digital

Humanities. Cambridge, MA: The MIT Press.

Büscher, Urry, and Witchger 2011 Büscher, M.,

Urry, J., & Witchger, K. (2011). Introduction: Mobile methods. In M.

Büscher, J. Urry, & K. Witchger (Eds.), Mobile

Methods. New York, NY: Routledge.

Campbell and Groundwater-Smith 2007 Campbell, A.

and Groundwater-Smith, S. (2007). An ethical approach to

practitioner research: Dealing with issues and dilemmas in action

research. New York: Routledge.

Cavanagh 2013 Cavanagh, S. (2013). Living in a

Digital World: Rethinking Peer Review, Collaboration, and Open Access. ABO: Interactive Journal for Women in the Arts, 1640-1830,

2(1), 15.

Cheverie, Boettcher, and Buschman 2009 Cheverie,