Works Cited

Agirre et al. 2012 Agirre, E., Barrena, A., De

Lacalle, O. L., Soroa, A., Fernando, S., and Stevenson, M. (2012). “Matching Cultural Heritage Items to Wikipedia.” In

Proceedings of the 8th International Conference on

Language Resources and Evaluation (LREC), pages 1729–1735.

Bingel and Haider 2014 Bingel, J. and Haider, T.

(2014). “Named Entity Tagging a Very Large Unbalanced

Corpus: Training and Evaluating NE Classifiers.” In Proceedings of the 9th International Conference on Language

Resources and Evaluation (LREC), Reykjavik, Iceland.

Bizer et al. 2009 Bizer, C., Lehmann, J.,

Kobilarov, G., Auer, S., Becker, C., Cyganiak, R., and Hellmann, S. (2009).

“DBpedia – A Crystallization Point for the Web of

Data.”

Web Semantics: Science, Services and Agents on the World

Wide Web, 7(3):154–165.

Blanke and Kristel 2013 Blanke, T. and Kristel, C.

(2013). “Integrating Holocaust Research.”

International Journal of Humanities and Arts

Computing, 7(1–2):41–57.

Blei et al. 2003 Blei, D.M., Ng, A. Y., and Jordan,

M. I. (2003). “Latent dirichlet allocation.”

The Journal of Machine Learning Research,

3:993–1022.

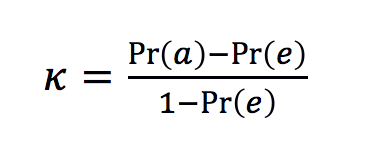

Carletta 1996 Carletta, J. (1996). “Assessing Agreement on Classification Tasks: The Kappa

Statistic.”

Computational Linguistics, 22(2):249–254.

Cohen 1960 Cohen, J. (1960). “A

Coefficient of Agreement for Nominal Scales.”

Educational and Psychological Measurement,

20(1):37–46.

Cornolti et al. 2013 Cornolti, M., Ferragina,

P., and Ciaramita, M. (2013). “A Framework for Benchmarking

Entity-Annotation Systems.” In Proceedings of

the 22nd International Conference on theWorldWideWeb, pages

249–260.

Daiber et al. 2013 Daiber, J., Jakob, M., Hokamp,

C., and Mendes, P. N. (2013). “Improving Efficiency and

Accuracy in Multilingual Entity Extraction.” In Proceedings of the 9th International Conference on Semantic

Systems, pages 121–124. ACM.

DeLozier et al. 2016 DeLozier, G., Wing, B.,

Baldridge, J., and Nesbit, S. (2016). “Creating a novel

geolocation corpus from historical texts.” In Proceedings of LAW X-The 10th Linguistic Annotation Workshop, pages

188–198.

DeWilde 2015 De Wilde, M. (2015). “Improving Retrieval of Historical Content with Entity

Linking.” In New Trends in Databases and

Information Systems, Volume 539 of Communications

in Computer and Information Science, pages 498–504. Springer.

Fernando and Stevenson 2012 Fernando, S. and

Stevenson, M. (2012). “Adapting wikification to cultural

heritage.” In Proceedings of the 6th Workshop on

Language Technology for Cultural Heritage, Social Sciences, and

Humanities, pages 101–106. ACL.

Frontini et al. 2015 Frontini, F., Brando, C.,

andGanascia, J.-G. (2015). “SemanticWeb BasedNamed Entity

Linking for Digital Humanities and Heritage Texts.” In Proceedings of the 1st International Workshop on Semantic Web

for Scientific Heritage at the 12th ESWC 2015 Conference, pages

77–88, Portorož, Slovenia.

Han and Sun 2011 Han, X. and Sun, L. (2011). “A Generative Entity-Mention Model for Linking Entities with

Knowledge Base.” In Proceedings of the 49th

Annual Meeting of the ACL: Human Language Technologies, volume 1,

pages 945–954, Portland, OR, USA.

Hengchen et al. 2015 Hengchen, S., van Hooland,

S., Verborgh, R., and De Wilde, M. (2015). “L’extraction d’entités nommées: une opportunité pour le

secteur culturel?”

Information, données & documents,

52(2):70–79.

Hengchen et al. 2016 Hengchen, S., Coeckelbergs,

M., van Hooland, S., Verborgh, R., and Steiner, T. (2016). “Exploring archives with probabilistic models: Topic modelling for the

valorisation of digitised archives of the European Commission.” In

First Workshop “Computational Archival Science: digital

records in the age of big data”,Washington DC, volume 1.

Kripke 1982 Kripke, S. (1982). Naming and Necessity. Harvard University Press,

Cambridge, MA, USA.

Kupietz et al. 2010 Kupietz, M., Belica, C.,

Keibel, H., and Witt, A. (2010). “The German Reference

Corpus DeReKo: A Primordial Sample for Linguistic Research.” In

Proceedings of the 7th International Conference on

Language Resources and Evaluation (LREC), Valletta, Malta.

Leidner 2007 Leidner, J. L. (2007). “Toponym resolution in text: annotation, evaluation and

applications of spatial grounding.” In ACM SIGIR

Forum, volume 41, pages 124–126. ACM.

Lin et al. 2010 Lin, Y., Ahn, J.-W., Brusilovsky, P.,

He, D., and Real, W. (2010). “ImageSieve: Exploratory Search

of Museum Archives with Named Entity-Based Faceted Browsing.”

Proceedings of the American Society for Information Science

and Technology, 47(1):1–10.

Makhoul et al. 1999 Makhoul, J., Kubala, F.,

Schwartz, R., and Weischedel, R. (1999). “Performance

Measures for Information Extraction.” In Proceedings of the DARPA Broadcast News Transcription and Understanding

Workshop, pages 249–252.

Maturana et al. 2013 Maturana, R. A., Ortega,

M., Alvarado, M. E., López-Sola, S., and Ibáñez, M. J. (2013). “Mismuseos.net: Art After Technology. Putting Cultural Data

toWork in a Linked Data Platform.” LinkedUp Veni Challenge.

Mendes et al. 2011 Mendes, P. N., Jakob, M.,

García-Silva, A., and Bizer, C. (2011). “DBpedia Spotlight:

Shedding Light on theWeb of Documents.” In Proceedings of the 7th International Conference on Semantic

Systems, pages 1–8, Graz, Austria.

Milne and Witten 2008 Milne, D. and Witten, I. H.

(2008). “Learning to Link with Wikipedia.” In Proceedings of the 17th ACM Conference on Information and

Knowledge Management, pages 509–518, Napa Valley, CA, USA.

Moro et al. 2014 Moro, A., Raganato, A., and

Navigli, R. (2014). “Entity Linking Meets Word Sense

Disambiguation: A Unified Approach.”

Transactions of the ACL, 2.

Newman et al. 2007 Newman, D., Hagedorn, K.,

Chemudugunta, C., and Smyth, P. (2007). “Subject metadata

enrichment using statistical topic models.” In Proceedings of the 7th ACM/IEEE-CS Joint Conference on Digital

Libraries, JCDL ’07, pages 366–375, New York, NY, USA. ACM.

Raimond et al. 2013 Raimond, Y., Smethurst,

M.,McParland, A., and Lowis, C. (2013). “Using the Past to

Explain the Present: Interlinking Current Affairs with Archives via the

Semantic Web.” In The Semantic Web – ISWC

2013, pages 146–161. Springer.

Rizzo and Troncy 2011 Rizzo, G. and Troncy, R.

(2011). “NERD: Evaluating Named Entity Recognition Tools in

the Web of Data.” In Proceedings of the 1st

Workshop on Web Scale Knowledge Extraction (WEKEX), Bonn,

Germany.

Rizzo and Troncy 2012 Rizzo, G. and Troncy, R.

(2012). “NERD: a Framework for Unifying Named Entity

Recognition and Disambiguation Extraction Tools.” In Proceedings of the Demonstrations at the 13th Conference of

the European Chapter of the ACL, pages 73–76. ACL.

Rodriquez et al. 2012 Rodriquez, K. J., Bryant,

M., Blanke, T., and Luszczynska, M. (2012). “Comparison of

Named Entity Recognition Tools for Raw OCR Text.” In Proceedings of KONVENS 2012, pages 410–414.

Vienna.

Ruiz and Poibeau 2015 Ruiz, P. and Poibeau, T.

(2015). “Combining Open Source Annotators for Entity Linking

through Weighted Voting.” In Proceedings of the

4th Joint Conference on Lexical and Computational Semantics (*SEM),

Denver, CO, USA.

Segers et al. 2011 Segers, R., van Erp, M., van

der Meij, L., Aroyo, L., Schreiber, G., Wielinga, B., van Ossenbruggen, J.,

Oomen, J., and Jacobs, G. (2011). “Hacking History:

Automatic Historical Event Extraction for Enriching Cultural Heritage

Multimedia Collections.” In Proceedings of the

6th International Conference on Knowledge Capture (K-CAP), Banff,

Alberta, Canada.

Speriosu and Baldridge 2013 Speriosu, M. and

Baldridge, J. (2013). “Text-driven toponym resolution using

indirect supervision.” In ACL (1), pages

1466–1476.

van Hooland et al. 2015 [van Hooland et al.,

2015] van Hooland, S., De Wilde, M., Verborgh, R., Steiner, T., and Van de

Walle, R. (2015). “Exploring Entity Recognition and

Disambiguation for Cultural Heritage Collections.”

Digital Scholarship in the Humanities,

30(2):262–279.