What’s Being Done?

To determine what

should be done (see the “Recommendations” section), we first need to understand what activities are

currently being undertaken to preserve videogames. To do this, two surveys were

constructed: one for game developers, and one for game enthusiasts. The surveys were

distributed through the International Game Developers Association’s white paper,

presented at the Game Developers Conference, announced on the Grand Text Auto blog,

and publicized among known developers and gamers including members of New England

Classic Gaming [

Montfort 2009].

[14] Responses were collected and analyzed using the SurveyMonkey service, and

questions were structured as multiple choice, free text, or a combination of the two.

Very little demographic data was collected, in order to encourage participation by

ensuring anonymity.

Industry response

- Total respondents: 48

- Completion rate: 33.33% (16 respondents)

- Respondents with > 50 employees: 26

Forty-eight individuals from the videogame industry responded to the survey, but only

16 answered every question. Of the respondents, 26 were from companies that had 50 or

more employees, and only 6 of these made it beyond the question, “Have you

considered establishing an archives/records management program?” Nine of the 26

responded that they did have a formal program, 15 did not have one, and 2 skipped the

question entirely. Those with formal programs, in both smaller and larger companies,

grouped their records according to project and/or type of asset (e.g. code or

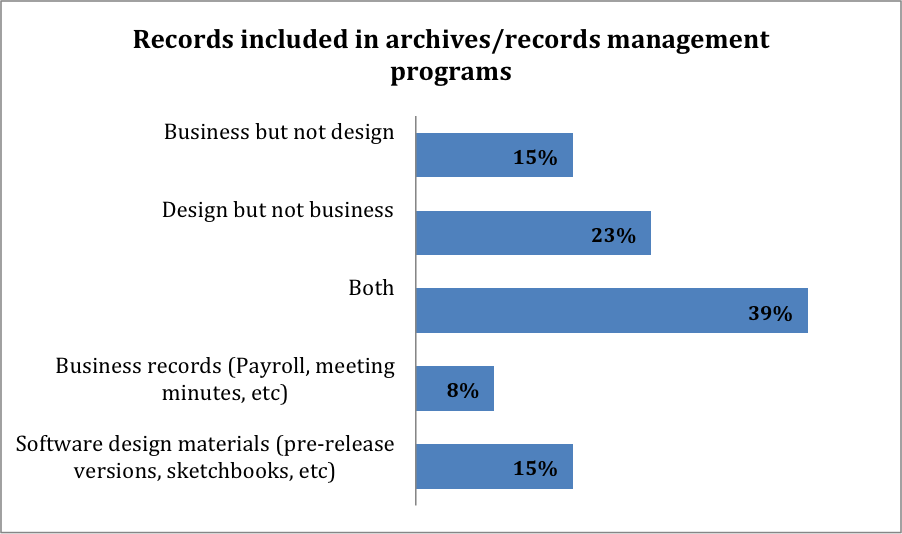

graphic). Figure 1 shows the main divisions of records included

in archives/records management programs.

Comments to this question showed a range of disorganized preservation activity. Some

companies indicated using a wiki to manage and preserve their records, many referred

to a version control system or code repository, and a few mentioned nightly backup

tapes without elaborating on the content of those backups. The most promising

comments were from respondents who claimed they used configuration management (CM)

processes and policies for all of their major game builds, though not for individual

developer materials.

According to Jessica Keyes, CM “identifies

which processes need to be documented” and tracks any changes [

Keyes 2004, 3]. Wikipedia expands on this, noting that it “identifies the functional and physical

attributes of software at various points in time and performs systematic control

of changes to the identified attributes for the purpose of maintaining software

integrity and traceability throughout the software development life cycle.”

[15]

Unfortunately, in the very same question, the same respondents admitted that many, if

not most, developers have no CM training.

Using a code repository or asset management system is certainly an important part of

a good CM plan. And yet, although they were mentioned in an earlier question, only

50% of respondents said they utilized them when asked specifically.

Although 2 respondents said that they did use records schedules, most free-text

responses regarding how permanence is determined displayed a fairly casual attitude

towards preservation. There were many references to item types that were necessary or

reusable being kept, while everything else was tossed, and to materials only being

kept for successful games. Some companies retained related documentation, while

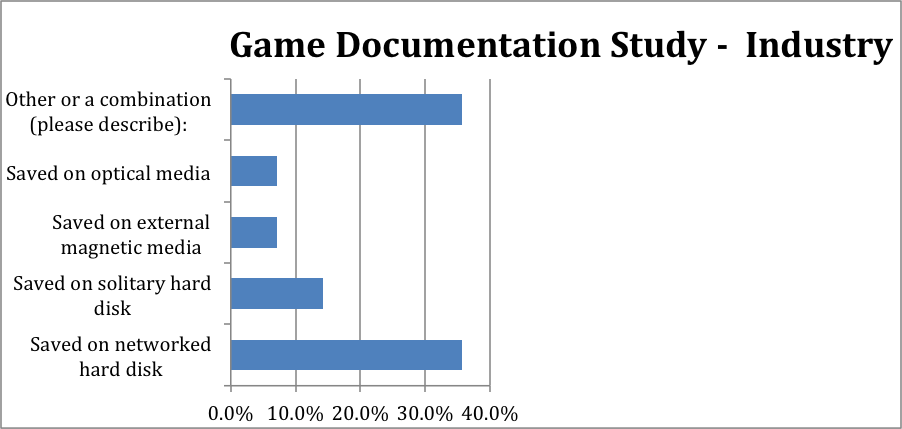

others did not. Storage environments (Figure 3) ranged from

enshrining physical records in an off-limits closet to a hard drive stored under a

developer’s desk. The word “whim” in reference to an individual deciding the

fate of records was used more than once.

In summary, there seems to be a tendency within the game industry to make

preservation decisions at the level of the individual developer, with no official

guidance. When Donahue asked the question of a Garage Games developer at Foundations

of Digital Games, he responded, “[laugh] We don't.

Other than the source code of a game that ships, we don't care. I just had someone

looking for annotated versions of our tools and we don't have them.” To be

fair, this is explicable. Game developers tend to work with a fixed deadline: the

majority of their income is made in the holiday season, so they need to get releases

out early in the 4th quarter. They also operate on a finite budget, which tends to

mean fewer programmers working more hours–leading to everyone working at too

breakneck a pace to give a thought to what happens to any of the interim

products.

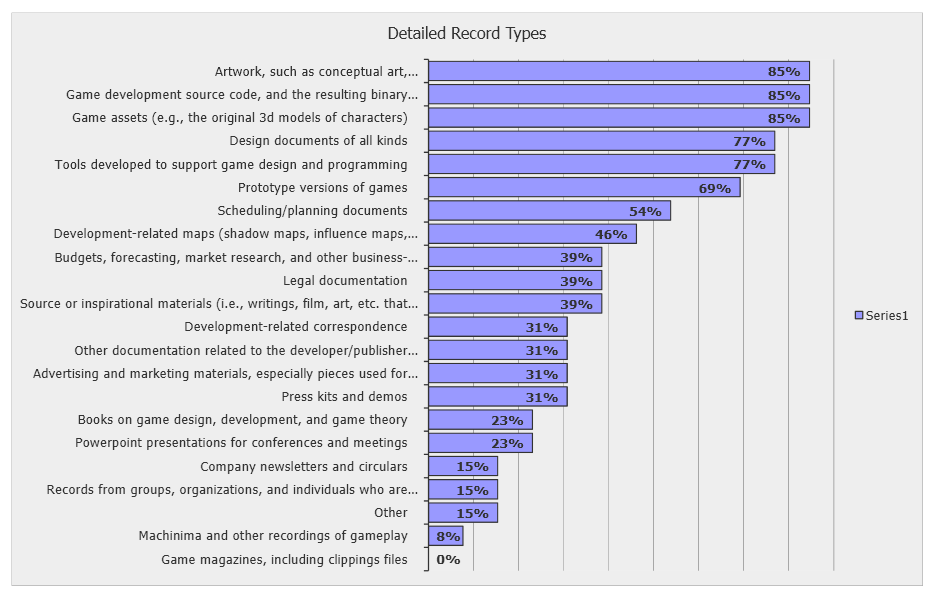

The survey also asked respondents to indicate what kinds of digital and analogue

objects they were preserving (Figure 2). Of the materials listed

in the questionnaire, all categories were saved by at least one company, except for

“Game magazines/clippings files.” The most popular categories were source

code and compiled binaries, as well as game assets and artwork, with 85% of

respondents reporting they preserved each of these item types. Following closely

behind at 77% each were design documents and tools developed to support game

creation.

Only 8% saved recordings of game play or machinima (video productions using a game

engine as the recording/acting mechanism). This finding contrasts significantly with

the practice of game players. At the time of this writing, a YouTube search for

“speed play game” yields 14,800 videos and “game play demo” yields

73,100 results. One example of a user community project, “C64

Longplays,”

[16] offers 270 videos of end-to-end game play and an additional 199 more

generalized videos of “the most popular or obscure” games.

Of the 12 respondents who answered the preservation tactics question, 7 reported

simply saving the files in their original format, 1 migrated to an unspecified

preservation format, and 3 transformed files on demand if the game was needed for

another reason (such as a re-release) in the future. One respondent went so far as to

say that once a game’s platform is gone, the software is, too. Emulation was

referenced only as a test platform during development. Responses to the description

questions revealed that metadata tends to be captured as unstructured text rather

than using a schema or authority list, if it is done at all.

Additional comments support the interpretation that the survey responses reveal a

lack of industry interest in preservation. Many game companies simply are not

concerned with their games beyond the development lifecycle. If the results of this

survey reflect the practices of the industry at large, we are in serious danger of

losing large chunks of our cultural heritage. The concept of “benign neglect”

can be applied safely to paper records and even some analog audiovisual media — but

apply it to digital objects and they will almost certainly end up in the dustbin as

soon as they go out of active use.

Community Response

-

Total respondents: 62

-

Completion Rate: 87%

-

Respondents with preservation experience: 19

Luckily for future historians, gamers, and students, the player community is very

active in preserving the software and artifacts of the games they love. If enthusiasm

can be inferred from response rate, the contrast between the community and industry

surveys demonstrates very different levels of interest. Recall that 16 people (or

33%) of those answering completed the industry survey, a decent rate of response,

according to some. The community, on the other hand, completed the survey 87% of the

time, generating 54 full responses, with numerous people emailing that they wished

they’d had a chance to take the survey before it closed.

Illustrating the diversity of the community, respondents were split between exclusive

collectors of officially released material, and those who collected unreleased as

well. Systems that interested them ranged from the Vectrex (1982–84, a home system

with built-in display, that used vector graphics rather than the more common raster

graphics) and Pong (1972 and after, a single-game console originally popularized in

bars) to the PS3 and Wii. Thirty percent collected ephemera, and about half used

emulators to play games for systems that interested them. The free text comments on

the emulation experience were rich, and, with emulation being a primary access method

for obsolete systems, deserve further exploration and analysis in a later study.

The enthusiasts were also asked which websites they regularly visited and/or

contributed to, with 77% partaking in both activities. These websites represent a

very valuable preservation contribution by the game community. Sites like

GameFAQs.com, MobyGames, and Home of the Underdogs (before its unfortunate demise)

provide metadata and context information at a level many cataloguers would envy. This

data is essentially peer reviewed, as there are scores of well-informed visitors

constantly viewing and updating it. Some sites have a formal moderation system for

quality control.

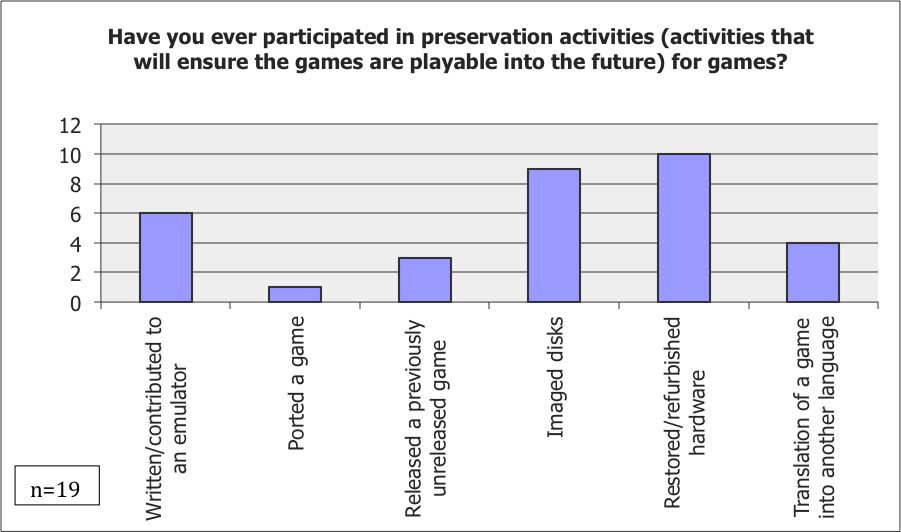

Nineteen of the respondents had engaged in preservation activities beyond website

participation. The most common activities were contributing to an emulator, imaging

disks, and restoring/refurbishing hardware (Figure 4). More unusual activities were

designing a preservation architecture,

[17] writing preservation guidelines for developers, and curating game related

exhibits. Additionally, three of the respondents put their collections to good use,

by releasing previously unreleased games to the public. Whether this was as a ROM or

on physical media is unclear,

[18] but both have been known to

happen. Mike Tyson’s Intergalactic Punch Out for the NES was released as a ROM,

[19]

Star Wars Ewok Adventure for the Atari 2600 was released

in a limited run of 30 cartridges.

[20]

Information Professionals

The archival literature has, relatively speaking, a dearth of

preservation-centered articles for interactive objects. InterPARES (International

Research on Permanent Authentic Records in Electronic Systems) is a series of

projects investigating problems related to the “authenticity, reliability, and accuracy of electronic records throughout their

life cycle.”

[21]

While InterPARES2 dealt with “Experiential,

Interactive and Dynamic Records,” its focus was on files generated by

artists, musicians, and scientists, rather than software. Regardless, a repository

looking to preserve videogames would do well to follow the “Preserver

Guidelines” laid out in the Project Book

[22].

In particular, the oft repeated mantra (in the realm of e-records) of getting

involved early in the lifecycle process is important to game preservation. The

dangers of obsolescence make it extremely useful to have the source code for games

of enduring value. Due to the nature of development and the rapidly changing

market, raw source code is more likely to be available before a game is officially

released – before it has been compiled into the executable programs we run on our

systems at home. Taking a disk image from an official release – making a

hardware-independent copy – only provides the binary files, and these are

difficult to migrate to other formats should it become necessary. The trouble with

binary files is that they obscure the underlying code and are difficult to

impossible to decompile. If, however, we have access to the source code, it would

allow an enterprising programmer to change small amounts of the code to create a

source port to a different operating system. This is somewhat comparable to the

value of having negatives vs. photographs; you can do a lot more with the

negatives, and the same is true for source code.

An example of the utility of source code is seen in the source ports available at

DoomWorld

[23], which were made possible by the release of the Doom Engine to the public

domain by id Software, as well as by the efforts of Doom enthusiasts who helped

port the game to other operating systems. Because just the engine was released,

players had to have a copy of the accompanying files in order to play the game,

helping to preserve id’s intellectual property rights. The game engine essentially

acts as a driver or interpreter for the graphics, sound, and other individual

objects that make up a complete game.

This discussion of objects other than binary files brings us to another important

guideline laid out by InterPARES 2: identify all digital components. One of the

challenges of preserving a videogame is that referring to it in the singular is

deceptive. A videogame is actually made up of as many as hundreds of different

objects, some of which may be hidden depending on the settings of the operating

system one is using to access it. Making sure that every file is identified and

every file-type known is an essential part of keeping an accurate record of the

program. This is another area where having the source code is helpful. Identifying

a file as a binary or executable is essentially useless; all information related

to the structure and programming of the file is obscured.

Similar to InterPARES is the Variable Media Network’s questionnaire for new media

artists.

[24] The

questionnaire allows a curator to learn from the creator exactly what aspects of

his or her work must be presented to allow for an accurate representation to be

made in the future. It also helps to clarify any rights issues, and provides the

rationale for recording the specific technologies used. Creating a similar

questionnaire for videogame creators would give archivists and historians the

ability to track the evolution of design platforms in addition to clarifying

technical metadata and intellectual property concerns.

Beyond these two projects, some of the most useful things to come out of the

archival world are standards for metadata and the Open Archival Information System

(OAIS) reference model, which have obvious applications and import for any digital archive.

[25]

Preservation through Adaptation

Having taken a preliminary look at how three different communities of practice

approach the problem of video game preservation, we will now consider preservation

strategies that involve reprogramming, reimplementation, re-creation, and adaptation.

Our contention is that one of the preservation models that governs certain categories

of virtual worlds, such as non-commercial IF, operates under the sign of modification

rather than reproduction.

[26]

Consider, for example, the case of

Mystery House, the

first graphical work of interactive fiction ever created. Released into the public

domain in 1987,

Mystery House was later reimplemented in

the Inform programming language by the Mystery House Taken Over Occupation Force,

comprised of Nick Montfort, Dan Shiovitz, and Emily Short [

Montfort et al 2004]. While the reimplemented game hews closely to the original Apple II version, even

going so far as to deliberately reproduce a programming error, it nonetheless also

departs from its antecedent in interesting ways–sometimes intentionally (the original

requirement to issue commands in upper-case text is pronounced “too dreary to bother reproducing,” for example),

[27] sometimes inadvertently and unavoidably (consistent with migration as a

preservation strategy more broadly). Moreover, the game’s commented code demonstrates

how MHTO oscillates between a preservation project and a remix project: while the

comments record a wealth of information about the original game, they also reflect a

commitment to getting a younger generation of game enthusiasts interested in early IF

by modding and remixing it. As a result, documentary and explanatory comments coexist

with generative ones. Players learn about how the early graphics were coded, for

instance, even as they are encouraged to substitute their own images for the crude

line drawings that Ken and Roberta Williams originally included. Suggestions to

remove a line or “rip out” a game behavior are interspersed throughout the

source code, and a number of programming decisions have been made with player

customization in mind.

It is clear that the MHTO Occupation Force, which commissioned ten contemporary

digital artists to mod the game using a specially designed kit for the purpose, sees

its reimplementation as an intermediary node in the genealogy of

Mystery House, one that honors its predecessor and anticipates its

successors; that is poised between

what was and what

will

be. Nick Montfort’s contribution, “

Mystery House Kracked by the Flippy Disk,”

[28] pays homage to 1980s-era hackers who would make commercial games available

for download on Bulletin Board Systems after breaking their copy protection

mechanisms. Montfort overlays the original

MH graphics with fragments of

Apple II crack screens, or the piratical equivalent of graffiti tags, that include

the aliases of the crackers and shout-outs to fellow pirates. By ingesting such

materials into the game itself, he inverts the customary relationship between text

and context, transforming the game into an exhibition space for a particular class of

vintage artifacts that help make first- and second-generation computer adventure

games intelligible.

In its conceptualization and design, the MHTO project reflects a view of authenticity

that Heather MacNeil, archives scholar, ascribes to Heidegger when she argues that

cultural objects are “in a continuous

state of becoming”

[

MacNeil and Mak 2007, 33]. According to this perspective, it is not stasis

or fixity that serves as a guarantor of authenticity, particularly in the digital

realm, but rather change and transformation; authenticity is “an anticipation, a process, and a continuous

struggle” to become [

MacNeil and Mak 2007, 29]. Paul Eggert

neatly encapsulates the ineluctable truth that lies behind this model: “works do not stand still”

[

Eggert 2009, 13]. As MacNeil notes, while this particular

formulation of authenticity is controversial in the field of archives and

preservation where practitioners are dedicated to stabilizing cultural records, it

has been fully naturalized over the last 15–20 years in the field of textual

scholarship, a branch of literary studies concerned with the transmission of texts

over time and which can be productively viewed as a sister discipline to archival

science [

MacNeil and Mak 2007, 33–38]

[

MacNeil 2005, 270]. If we plot these two differing views of

authenticity along what Paul Eggert calls the “production-consumption spectrum of the life of the

work,” then it becomes evident that the principle of fixity, which

privileges authorial agency, falls on the production end of the spectrum [

Eggert 2009, 18]; and the principle of transformation, which

privileges user agency, falls on the consumption end.

[29] In one case, the

authenticating function is invested in the author. In the other, it is the user.

It would be a mistake, however, to seize on the apparent oppositions. Where the two

approaches converge is in their shared commitment to the continuity of the

intellectual object; where they diverge is primarily a function of how much variance

each is willing to tolerate in the different manifestations and expressions of the

work. Moreover, the two value systems and the preservation strategies through which

they are realized often work together rather than at cross-purposes. The player

community not only reprograms and modifies classic games, it also develops emulators

for them, allowing users to experience the games in an environment that mimics an

obsolete platform or operating system and in a form that clones the structure and

contents of the original software data files. For this reason the community may be

said to be Janus-faced, looking forward to new incarnations of influential or obscure

titles while at the same time looking backward to canonical versions and official

releases. The availability of Mystery House as

reverse-engineered source code written in a modern programming language and as a disk image of the original executable program (a

byte-exact copy that can be run in an Apple II emulator) testifies to this basic

duality — a duality that need not imply incompatibility.

Patterns of Interaction Across Communities

These inter-preservational approaches happen not only synchronically within a single

community, but also diachronically across communities. The patterns of interaction

often resemble those found in architectural preservation, where there is a clear

reciprocity between residents of buildings, on the one hand, and preservationists, on

the other. Writing about the functions and roles that each of these stakeholder

groups assumes, Paul Eggert summarizes the resulting dynamic that unfolds in time:

“only adaptive reuse [by generations of

inhabitants] will have put very old buildings in a position to be proposed for

professional conservation and curation in the present”

[

Eggert 2009, 22–23]. In the case of digital game preservation, an

analogous relationship exists between user modification and institutional appraisal:

players augment and extend the cultural relevance of computer games by modifying them

for successive generations of users. These adaptations in turn increase the

likelihood that professional archivists will eventually judge the games worthy of

digital preservation.

A good example is

Colossal Cave Adventure, created by

Will Crowther c. 1975, which has the distinction of being the first documented

computer text-adventure game. Inspired by Kentucky’s Mammoth Cave system, the game

inaugurated many of the conventions and player behaviors now associated with the

genre, such as solving puzzles, collecting treasure, and interacting with the

simulated fantasy world via short text commands [

Jerz 2007, par. 1 and passim].

[30] Originally written in FORTRAN, the code has been

repeatedly ported and expanded by hackers, fans, and programmers over the years, most

influentially by Don Woods (c. 1977), who, among other things, introduced a scoring

system and player inventory [

Jerz 2007, pars. 10, 22, and 36]. The

number of versions of

Adventure is legion and continues

to grow, with players mapping the evolution of the game using tree-like structures

showing patterns of inheritance and variation.

[31] In 2008, the

Preserving Virtual Worlds project team selected

Adventure as part of its archiving case set. After 34 years of continuous

transformation under the stewardship of the user community,

Adventure (in one or more of its protean forms) has now been ingested

into an institutional repository, where it will undergo periodic integrity checks to

ensure the inviolability of its bitstream over time. Professional preservation of

Adventure will not supplant player preservation;

rather, the two will co-exist. But it is fair to say that the intervention of the PVW

team would not have happened in the absence of the transformational history that has

kept

Adventure alive for more than three decades.

Most of the game modifications referenced thus far are the product of what Alan Liu

would call “smart constraints”

[

Liu 2006]: they are aesthetic, stylistic, and ludic in nature,

encompassing changes to levels, characters, textures, descriptions, objects, and

storylines. Generally speaking, they are creative hacks designed within the

parameters of a given genre that are intended to enhance game play. By contrast, dumb

constraints involve various concessions to technology; they are the unavoidable

consequence of living in a material world where limited computer memory, storage, and

bandwidth compromise — or at least modulate — artistic vision. While the distinction

between smart and dumb constraints is by no means absolute, its utility lies in being

able to generalize at a sufficiently high level about two variables affecting game

mods.

Although primarily focused on the role of smart constraints in the development of

Adventure, Dennis Jerz also pays attention to dumb

constraints in his groundbreaking article on the game. He quotes M. Kraley, a former

colleague of Crowther’s, who recounts the first time his friend introduced him to

Adventure: “[O]ne day, a few of us wandered into [Crowther's] office so he could show off his

program.

It was very crude in many respects – Will was always

parsimonious of memory – but surprisingly sophisticated. We all had a

blast playing it, offering suggestions, finding bugs, and so forth” (my

emphasis) [

Jerz 2007, par. 20]. Here, Kraley captures with a few

swift strokes the long-standing tension between code size and program performance – a

tension that would have been particularly pronounced in an era when computer memory

was measured in kilobytes rather than gigabytes. By the time Crowther was modeling

the “twisty little passages” of the

Kentucky cave system in his text-based world, the principle of parsimony was already

axiomatic. Steven Levy locates its origins in the mainframe computer culture of the

1950s and 60s, when “program

bumming,” or “the practice of

taking a computer program and trying to cut off instructions without affecting the

outcome,” emerged as its own distinct aesthetic, cast in the crucible of

limited physical memory and punchcard media, whose minimal storage capacity created a

strong incentive for programmers to make their code as efficient as possible, thereby

reducing the size of the program deck [

Levy 1984, 26]. What began

as a consequence of mundane technological circumstance quickly evolved into a

competitive art form, and a hacker eager to prove his programming chops could do

worse than find a bloated program to compress into fewer lines of code. “Some programs were bummed to the fewest lines

so artfully,” Levy notes, “that

the author's peers would look at it and almost melt with awe”

[

Levy 1984, 44].

Parsimony is as much a gamer creed as it is a hacker creed. It is evident in the

subculture of

speedruns: recorded demos of players (or “runners”)

who complete a video game as quickly as possible by optimizing the play-through with

no reversals, mistakes, or wasted effort.

[32]

Like code bums, speedruns represent a trend toward increasing efficiency,

rationalization, and speed. They may not, however, involve the same degree of

capitulation to dumb constraints, notwithstanding the clever exploitation of glitches

in the game system to improve a run.

[33]

The migration of parsimony from the code level to the game level is discernible in

other ways as well. The concept implies not only efficiency, but also economy of

expression, with a “less is more” ethos. It is this dimension of parsimony that

game mods based on dumb constraints often reflect. One sees it, for example, in the

readiness of an earlier generation of software pirates to sacrifice seemingly

significant game properties in the interest of low-bandwidth “warez”

distribution. The resulting game rips, which drained the digital objects of high-end

features such as audio or video,

[34] recall Kraley’s association of parsimony with crudity. But crudity, as

Matthew Kirschenbaum has observed, is a core tenet of hackerdom, not to be confused

with lack of skill [

Kirschenbaum 2008, 226–227]. It is consistent

with a set of maxims in software design that fly under the banner of pragmatism and

simplicity, boasting names such as “Worse is Better”; “Keep It Simple,

Stupid”: and “The Principle of Good Enough.”

[35] Taken together, they imply a coherent approach to the problem of significant

properties.

As part of the CAMiLEON emulation Project, Margaret Hedstrom and Christopher A. Lee

have defined

significant properties within the context of digital

preservation as “those properties of

digital objects that affect their quality, usability, rendering, and

behaviour”

[

Hedstrom and Lee 2002, 218]. Similarly, the authors of the InSPECT report

denote them as essential characteristics “that must be preserved over time in order to ensure the continued accessibility,

usability, and meaning of the objects”

[

Wilson 2007, 8]. Despite the availability of these definitions,

the InSPECT authors lament that “most of

those who use the term would be hard-pressed to define it or say why it is

important,”

[

Wilson 2007, 6], adding “to date, little research has been undertaken on the

practical application of the concept and approach. It is therefore widely

recognized that there is a pressing need for practical research in this area, to

develop a methodology, and begin identifying quantifiable sets of significant

properties for specific classes of digital object[s]”

[

Wilson 2007, 7].

The convictions of the hacker community offer an interesting counterpoint to such

ambivalence. The manifesto-like intensity with which hackers revere the principle of

“all information should be free”

[

Levy 1984, 40], as well as its corollaries, the freedom to tinker

and share, means that reproducibility functions as a kind of acid-test for

significant properties. Outwardly, there is nothing particularly remarkable about

this: the relationship between the sustainability of a creative object and the

iterability of its constituent parts is widely recognized. The subject receives what

is perhaps its fullest treatment in Nelson Goodman’s

Languages

of Art, which remains one of the most formidable works on semiotics ever

published. “The study of such engineering

matters” that includes the “duplicability,”

“clarity,”

“legibility,”

“maneuverability,” and “performability” of the individual units that

comprise a notational system, is, Goodman states, “fascinating and [intellectually] profitable”

[

Goodman 1976, 154]. But while reproducibility is likely to inform

any conversation about significant properties, it assumes pride of place in the

context of software hacking. This is not to dispense with other criteria – one need

only invoke the notorious failure of the Atari VCS port of

Pac-Man in 1982 to realize that once a cultural artifact achieves iconic

status, it becomes much harder to assess trade-offs between paying homage to the

original and yielding to the technical exigencies of reimplementation on a different

platform. But the siren call of adaptive reproduction frequently outweighs all other

considerations. Indeed, a recurring theme “that runs throughout the history of the Atari VCS

platform,” according to Nick Montfort and Ian Bogost in

Racing the Beam, is “the transformative port,” especially of arcade

games [

Montfort and Bogost 2009a, 23]. In this context, what counts as

“significant” is whatever the target platform can accommodate. Those

properties that resist being shoehorned into the new system end up as collateral

damage. In the case of the

Pac-Man port, this included

such venerable features as the paku-paku sound effects and the distinctive visual

attributes of the ghosts, bonus fruits, and food pellets [

Montfort and Bogost 2009a, 77–79]. The logic of adaptive reproduction,

however, means that many properties that are casualties of an initial port can be

re-integrated into subsequent ports. As Montfort and Bogost note, “later VCS

Pac-Man hacks and rebuilds” re-introduced “credible arcade sounds, revised colors, better sprite

graphics, and colored fruit.”

[

Montfort and Bogost 2009a, 77]. Thus a relatively crude rendition of the

game paved the way for more faithful renditions down the road. This ongoing process

demonstrates how systems of semiotic relevance can be recalibrated over time in

response to changing techno-cultural circumstances.

[36]

Although a work of variable media art rather than a video game, the history of

William Gibson’s

Agrippa illustrates another model of

digital preservation that relies on asynchronous collaboration between different

communities of practice, in this case hackers and scholars.

[37] Like

Adventure,

the transmission and reception of

Agrippa dramatizes the

interaction between preservation-as-adaptation and preservation-as-reproduction, with

the difference that dumb constraints play an even more decisive role in the fate of

Agrippa. The crux of the challenge for hackers in

1992 was how to take a complex, auto-destructive, and cross-media work of art stored

on a 3.5” floppy; copy it; and reformat it for online distribution prior to the era

of the graphical web browser and at a time when ASCII was still the predominant

currency of the internet. The crude hack they devised allowed

Agrippa to survive as a text-only fragment on the network for sixteen

years, creating the pre-conditions for its eventual emulation by Matthew

Kirschenbaum, Doug Reside, and Alan Liu in late 2008.

[38]

Agrippa was co-authored by the writer William Gibson and

the artist Dennis Ashbaugh, and published by Kevin Begos, Jr. in 1992. Described in

contemporary press releases as a “multi-unit artwork,” it is difficult to classify, both physically and

generically [

Gibson et al 1992, introduction]. It was originally sold in

two versions: the deluxe version came wrapped in a shroud, its cover artificially

aged and its pages scorched – “time-burned,” like the photo album described in

Gibson’s poem, which functions as the central node of the work. Inside are etchings

by Ashbaugh and double columns of DNA that ostensibly encode the genome of a fruitfly

[

Kirschenbaum 2008, xi]. Nestled in the center of the book is a

3 ½ inch floppy disk that contains a poem by Gibson, a meditation on time, memory,

and decay. Its governing metaphor is that of the

mechanism

“a trope,” notes Matthew

Kirschenbaum, “that manifests itself

as a photograph album, a Kodak camera, a pistol, and a traffic light, as well as

in less literal configurations”

[

Kirschenbaum 2008, ix].

Agrippa,

among other things, is about our misplaced faith in the permanence and objectivity of

media. Like human memories, media distort, invent, and erase the very objects they’re

designed to preserve: handwriting fades and becomes illegible, photographs break the

fourth wall by constantly reminding us of the world that lies just outside the

picture frame, and inert technological artifacts put up no resistance when new ones

come along to replace or destroy them. Paradoxically, the speaker of the poem takes

recourse in his own recollections to supplement the incomplete records of the past,

records that were originally intended to compensate for the limitations of memory. By

such a process, he tries to recapture, for instance, the smells of the saw-mill once

owned by his father, whose “tumbled boards

and offcuts” are pictured in an old photograph [

Gibson et al 1992].

Drawing on a synaesthetic imagination, he uses the visual stimulus to prompt an

olfactory memory. This complex interplay between mechanism and memory structures the

poem as a whole and shapes its manifold meanings.

Agrippa’s core themes are expressed through form as well

as content: some of Ashbaugh’s etchings are overlaid with images printed in uncured

toner, which are inevitably smudged and distort those beneath and facing them when

the pages are turned [

Kirschenbaum 2008, xi]. More stunningly, as

has been often described, Gibson’s electronic poem is encrypted to scroll

automatically down the screen once before being irrevocably lost, its text

disappearing after a single reading.

Agrippa is

therefore subjected, like all material objects, to the forces of decay, but here

those forces are manufactured rather than natural, causing the work to disintegrate

at an accelerated rate. Ashbaugh, in particular, took considerable delight in

anticipating how this volatility would confound librarians, archivists, and

conservators: as Gavin Edwards explains, to register the book's copyright, Ashbaugh

“would need to send two copies to the

Library of Congress. To classify it, they . . . [would] have to read it, and to

read it, they . . . [would have to] destroy it.”

[

Edwards 1992]. Significantly, however, it was not the librarians who

found a workaround to the problem, but the pirates. On December 9, 1992, a group of

New York University students, including one using the pseudonym of “Rosehammer”

and another that of “Templar”, secretly video-recorded a live public performance

of

Agrippa at The Americas Society, an art gallery and

experimental performance space in New York City. After transcribing the poem, they

uploaded it as a plain ASCII text to MindVox, a notorious NYC Bulletin Board, “the Hells Angels of Cyberspace,”

according to Wikipedia, where it was readily available for download and quickly

proliferated across the web.

“The Hack,” as the incident has come to be called, is told

with the hard-boiled suspense of a detective story by Matthew Kirschenbaum, who

uncovered the details while working on his award-winning book

Mechanisms: New Media and the Forensic Imagination. For Kirschenbaum, the

creation of the surreptitious recording marked a fork in the road for the NYU

students: “What,” he

asks, “were Rosehammer and

Templar to

do with their bootleg video” [my emphasis] [

Kirschenbaum et al 2008].

In 1992, of course, uploading the actual footage to

the Internet would have been impossible to contemplate from the standpoint of

storage and bandwidth (let alone with available means of playback). Therefore,

just the text of Gibson’s poem was manually transcribed and posted to the MindVox

BBS as a plain-text ASCII file, which allowed it to propagate rapidly across

bulletin boards, listservs, newsgroups, and FTP sites. Today, however, the footage

would have undoubtedly been posted direct to YouTube, Google Video . . . or some

other streaming media site, eliminating the need for scribal mediation. It is

worth remarking, then, that the brilliant act of low-tech, manual, and analog

transcription through which Rosehammer and Templar accomplished their “hack”

of Agrippa (analog video copied by hand as text)

ineluctably dates Agrippa as the product of a certain

technological moment at the cusp between old and new media [Kirschenbaum et al 2008].

In the final analysis, network throughput served as the arbiter of significant

properties, forcing the hackers to attempt to distill

Agrippa down to its essence: a static 305-line

“semi-autobiographical” poem. If this austere version seems wholly incapable

of functioning as a proper surrogate for the original, then it’s worth underscoring

the role it played in bringing about the revival of

Agrippa through high-fidelity emulation of the software program.

Reflecting on the web-based “Agrippa Files” archive where

the disk image of the program now lives, Kirschenbaum explains its significance:

These materials . . . offer a

kind of closure to anyone who, like me, has ever stumbled across the text of

Agrippa on the open net and wondered, but how did

it get there? Those mechanisms are now known and documented. So we take

satisfaction in the release of these new “Agrippa

files” (as it were) to scholars and fans alike, and we marvel that after

sixteen years in the digital wild a frail trellis of electromagnetic code once

designed to disappear continues to persist and to perform. [Kirschenbaum et al 2008]

The example of Agrippa reminds us that preservation

sometimes courts moderate (and even extreme) loss as a hedge against total extinctive

loss. It also indirectly shows us the importance of distributing the burden of

preservation among the past, present, and future, with posterity enlisted as a

preservation partner. However weak the information signal we send down the conductor

of history, it may one day be amplified by a later age.

Recommendations

[39]

There is a need for memory institutions interested in establishing video game

repositories to support policy positions and offer services that are better

aligned with the preservation and use practices of the game community, not only

because of the potential for integrating the members of this community into the

larger preservation network, but also because their attitudes and values may well

influence those of professional archivists in years to come. With their deep

curatorial investment in games, players have adopted a versatile set of approaches

for collecting, managing, and providing long-term access to these cultural

artifacts. While bitstream preservation and emulation are an essential part of the

overall picture to which gamers have made enormous contributions, so too are

re-releases, remakes, demakes, ports, mods, and ROM hacks.

[40]

As a result, game archives in the wild often reflect notions of authenticity that

are different from those of memory institutions.

[41] While the dominant orientation of archivists is toward the stabilizing of

cultural records, gamers can tolerate, and indeed embrace, greater variability in

the objects with which they interact.

[42] The Mario Brothers franchise, for example, has persevered largely because

of the consumer appetite for new and altered game levels, powerups, graphics,

characters, and items. The “softer” view of authenticity that underlies these

strategies operates at what Seamus Ross would call a “lower threshold of verisimilitude”

[

Ross 2010].

What policy initiatives and preservation services might be adopted in response to

the needs, practices, and perspectives of players and player-archivists? We

propose the following:

Digital Preservation Services: Comparative Methods and Stemmatics

In addition to providing authenticated capture, ingest, hashing, and storage

services for archival copies of games, digital repositories might also offer

appropriate services for access copies of games in the wild. Because these

copies are often modified rather than fixed representations and in line with

the “softer” canons of authenticity previously mentioned, repositories

could provide users and player-archivists with the means to analyze, document,

and measure their inter-relationships using similarity metrics and other

approaches.

Applying the techniques of digital stemmatics, archivists could help users

visualize and interpret these patterns in sophisticated ways. Developed in the

19th Century, stemmatics codified a set of methods for analyzing the filiation

of literary manuscripts. Significantly, the tree structures representing these

relationships have parallel importance in evolutionary biology and historical

linguistics, where they are used to group genomes or languages into families;

show how they relate to one another in genealogical terms; and reconstruct lost

archetypes [

Kraus 2009]. Speculating on the role of digital

stemmatics (or phylogenetics, as the comparative method is called in biology)

in the context of personal digital archives, Jeremy John of the British Library

has postulated that “future

researchers will be able to create phylogenetic networks or trees from

extant personal digital archives, and to determine the likely composition of

ancestral personal archives and the ancestral state of the personal digital

objects themselves”

[

John et al. 2010, 134].

Stemmatic methods have already been applied to board games: Joseph Needham, a

pioneering historian of East Asian science, technology, and culture, published

a family tree of board games connecting divination, liubo, and chess through a

long line of ancestry and descent [

Needham 1962, 331]; and

biologist Alex Kraaijeveld has applied phylogenetics to variants of chess to

help determine its place of origin [

Kraaijeveld 2000, 39–50]. The methodology therefore shows great promise for the study of video and

computer games in the wild, where variability rather than fixity of

representation is often the norm.

Two other tools cited recently by Jeremy John are also relevant in this

context:

-

ccHost – an open-source content management system developed

under the auspices of Creative Commons that can be used to track and

document how media content is used, reused, and transformed on the web.

(ccMixter, the popular music site for remixing and sharing audio samples, is

powered by ccHost.)[43]

-

Comparator – a tool developed by Planets (Preservation and

Long-term Access through Networked Services), a four-year project funded in

part by the European Union. Comparator is designed to measure degrees of

similarity between different versions of a digital object.[44]

Digital Preservation Services: Calculating Trust in Fan-Run Game

Repositories

Because game archives in the wild cannot usually be authenticated according to

standard integrity checks, an alternative method for evaluating the

authenticity of their holdings might involve the application of trust-based

information. Jennifer Golbeck, for example, has demonstrated how the trust

relationships expressed in web-based social networks can be calculated and used

to develop end-user services, such as film recommendations and email filtering

[

Golbeck 2005]. Applying Golbeck’s insights, archivists could

leverage the trust values in online game communities as the basis for judgments

about the authority or utility of relevant user-run repositories, such as

abandonware sites and game catalogs. Under this scenario, authenticity is a

function of community trust in the content being provided. One consequence of

this approach is that authenticity and mutability need not be considered

mutually exclusive terms; on the contrary, fan-run game repositories that make

provisions for transformational use of game assets — such as altering the

appearance of avatars or inventory items — might in many instances increase

trust ratings.

Coda

In her book

Reality is Broken: Why Games Make Us Better and

How They Can Change the World, veteran game designer Jane McGonigal

argues that far from “jeopardizing

our future,” as author Mark Bauerlein would have it [

Bauerlein 2009], video and massively multiplayer online games have

the potential to help us solve some of the world’s most pressing challenges, from

energy crises to climate change to global hunger. “Game design isn’t just technological craft,”

she says, “it’s a

twenty-first-century way” of collaborating, thinking, problem-solving,

and changing attitudes and behavior [

McGonigal 2011, 13]. As a

proof of concept, McGonigal has spent the last several years developing and

implementing a number of highly influential Alternate Reality Games – including

World Without Oil, , and

Evoke – whose collective purpose is to arrive at “creative solutions to our most urgent social

problems”

“through planetary-scale

collaboration”

[45]. Although the games have

spawned a gripping counterfactual record of convincing antidotes to and scenarios

of near-future epidemics and calamities, “none of them,” McGonigal has remarked, in

referring to the entire universe of MMO and video games, not just her own, “have saved the real world yet.”

[

McGonigal 2010]

Or have they?

Vincent Joguin, an expert in computer emulation, was an avid gamer and demoscene

programmer in the 1990s, producing so-called “intros” to

cracked games, organizing demoscene parties, and later helping to develop a number

of widely used emulators for classic home computer and console systems, including

the Atari ST.

[46] In 2009, Joguin and his collaborators secured external funding for an

ambitious project to create emulation-based preservation services and technologies

in partnership with a number of renowned European institutions and the already

extant PLANETS initiative.

[47] One component of the system is Dioscuri, an emulator that is modular in

design: “modularity” in this context means that a range of standard hardware

components have each been individually emulated, creating a collection of

mini-emulators that in theory can be combined and extended in myriad ways to mimic

almost any imaginable computer architecture. Joguin’s signature contribution,

however, is a virtual computer small and simple enough to be continuously ported

to new platforms. One virtue of Olonys, as the system is called, is that it in

effect liberates emulators such as Dioscuri from hardware-specific environments,

allowing them to execute on any computer. It thus addresses one of the great

paradoxes of preservation through emulation: the need to create meta-emulators to

run already existing emulators, whose host systems will themselves expire in

time.

[48]

In its broad strokes, the trajectory of Joguin’s career – from video game

enthusiast to programmer hobbyist to dedicated digital preservationist – is

recapitulated in the trajectories of countless numbers of players, who over the

last two decades or more have been modeling community-driven practices and

solutions to collecting, documenting, accessing, and rendering video games,

arguably the most culturally resonant digital artifacts of our time. As we have

seen, there is no shortage of preservation challenges with which to contend: bit

rot, the scourge of technological obsolescence; the parasitic reliance of software

programs on code libraries supplied by the operating system and other software

packages; and the growing number of digital collections sitting on “shifting foundations of silicon, rust,

and plastic” as one writer has ominously expressed it [

Bergeron 2002]. Complex interactive computer games represent the

limit case of what we can do with digital preservation. If we can

figure out how to keep a classic first-person shooter alive, we’ll have a better

idea of how to preserve computational simulations of genetic evolution, climate

change, or the galactic behavior of star systems. Just as importantly, we’ll also

have the technical and social knowledge to maintain the billions of quotidian

electronic records — emails, memos, still images, sound files, videos, e-books,

instant messages — that constitute the collective memory of our civilization.

To speak of the contribution of gamers exclusively in the future tense, however,

is to fail to do justice to the scope and ingenuity of their accomplishments thus

far, as demonstrated in this paper. “The

internet is populated with legions of amateur digital archivists,

archaeologists, and resurrectionists,” wrote Stewart Brand of the Long

Now Foundation more than a decade ago [

Brand 1999, 91]. He went

on to elaborate:

They track down the

original code for lost treasures such as Space

Invaders (1978), Pac-Man (1980), and

Frogger (1981), and they collaborate on

devising emulation software that lets the primordial programs play on

contemporary machines. The . . . techniques pioneered by such vernacular

programmers are at present the most promising path to a long-term platform

migration solution . . . such massively distributed research can convene

enormous power. (emphasis added) [Brand 1999, 91].

It is no accident that this tribute to the heroic efforts of the player community

comes at the conclusion of a chapter entitled “Ending the

Digital Dark Ages.” For Brand, video games serve as a skeleton key for

unlocking the complexities of digital preservation, empowering us to prevent an

epochal “digital black hole.” The import of his message is clear: for love of

space invaders and bonus fruits, of rescuing the girl and fighting and defeating

the boss; for love of treasure, power-ups, and sprites; for love of all these

things, players have been inspired to save our video game inheritance. But in the

process of salvaging these fragile 8-bit worlds, they have also helped save some

of the real world. Like the technology trees found in popular strategy games,

where the acquisition of one technology is dependent on that of another, the

solution to any of the superthreats conjured by McGonigal, from pandemics to fuel

shortages to mass exile, is predicated on methods for reliably transmitting

legacies of knowledge from one generation to the next. When those legacies are

encoded as bitstreams, it is video game players who have been in the vanguard of

figuring out how to do it, bolstering McGonigal’s assertion that gamers are a

precious “human resource that we can

use to do ‘real-world’ work ”

[

McGonigal 2010].

“Games can save the world,” Jane McGonigal

emphatically tells us.

Jane, they already have.