Abstract

The aim of PO.EX: A Digital Archive of Portuguese Experimental

Literature (http://po-ex.net/) is to

represent the intermedia and performative textuality of a large corpus of

experimental works and practices in an electronic database, including some early

instances of digital literature. This article describes the multimodal editing of

experimental works in terms of a hypertext rationale, and then demonstrates the

performative nature of the remediation, emulation, and recreation involved in digital

transcoding and archiving. Preservation, classification, and networked distribution

of artifacts are discussed as representational problems within the current

algorithmic and database aesthetics in knowledge production.

1. Digital Editing for Experimental Texts

The performative dimension of experimental literature challenges our archival

practices in ways that draw attention to the performative nature of digital archiving

itself.

[1] Decisions about standards for digital encoding, metadata fields, database

model, and querying methods impose their particular ontologies and structures to

collections of heterogenous objects derived from historical practices that emphasized

the eventive nature of signification as an interactive process of production. Instead

of a transparent remediation of an original autograph object in its digital surrogate

– a visual effect of the digital facsimile experience that is frequent in scholarly

archives – multiplicity of media and versions, as well as the programmatic focus on

live performance as poetic action, call for a self-conscious engagement with the

differentials of inscription technologies and, ultimately, with the strangeness of

digital codes as an expression of the performativity of the archive. The PO.EX

project provided both a context for this heightened awareness of digital archiving as

an act of transcoding, and an environment for experimenting with forms of archiving

that attempted to respond to that self-consciousness about archival intervention.

This article contains an overview of the project and offers an account of how the

PO.EX Archive has wrestled to address the difficult theoretical questions raised by

the progress of our research.

The name “PO.EX” contains the first syllables of the words

“POesia EXperimental” and it has been used as a general

acronym for those and subsequent experimental practices since the first retrospective

exhibition of the movement, which was held at the National Gallery of Art, Lisbon, in

1980. A second major retrospective was organized by the Serralves Museum of

Contemporary Art, Porto, in 1999.

[2] The PO.EX project

involved a multidisciplinary team of 13 researchers, with expertise in literary

studies, communication and information sciences, contemporary art, and computer

science. At various stages, the project also benefited from the collaboration of 6

research assistants. A significant part of the work consisted of locating sources,

digitizing, and classifying them. The

PO.EX Digital

Archive holds c. 5000 items in multiple formats (text, image, audio, and

video files) and is scheduled for online publication at the end of 2013.

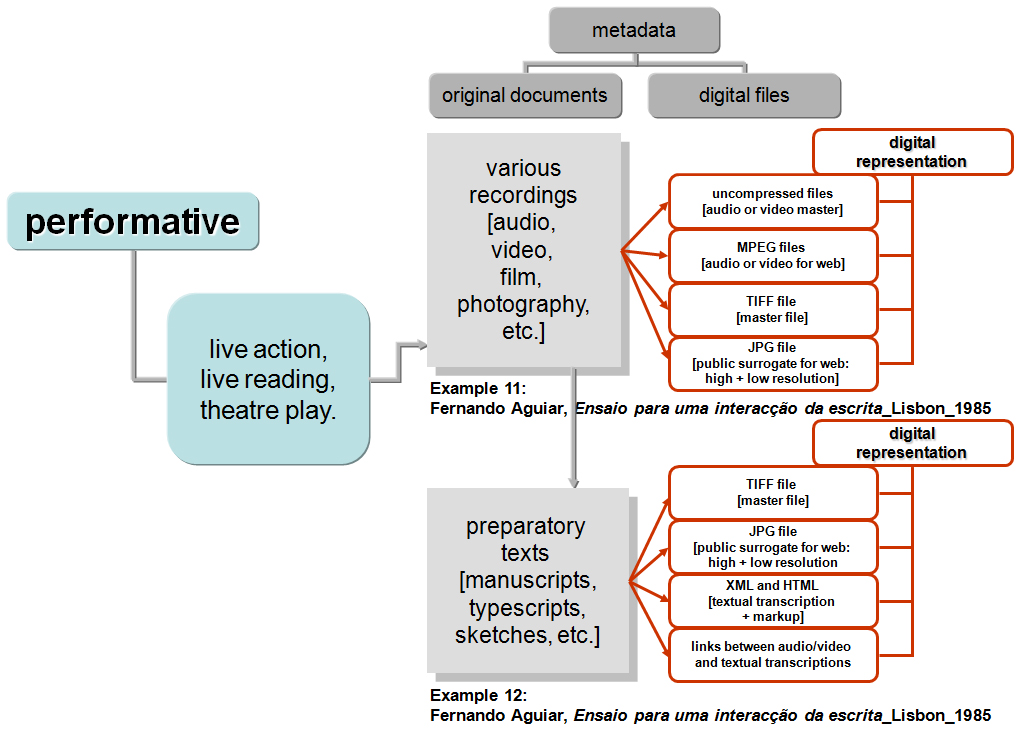

[3] The recently

finished second stage of the project (

PO.EX ’70-80,

under development 2010-2013) digitized works from the 1970s and 1980s. It was

preceded by an earlier project (

PO.EX ’60, under

development 2005-2008) that digitized works from the 1960s, namely the magazines and

exhibition catalogues of the Experimental Poetry movement. The result of the first

stage was published in 2008 both as a web site and a CD-ROM. In its present

instantiation PO.EX will be published as an open repository using DSpace.

Portuguese experimental literature of the 1960s, 1970s, and 1980s includes visual

poetry, sound poetry, video-poetry, performance poetry, computer poetry, and several

other forms of experimental writing. Experimental literary objects, practices, and

events often consist of an interaction between notational forms on paper (or other

forms of media inscription) and site-specific live performances. In experimental

practices, the eventuality of literary meaning is dramatically foregrounded by

turning the text into a script for an act whose performance co-constitutes the work.

Such performative practices are poorly documented and yet they may exist in several

media and in multiple versions. Multiplication of versions across media is another

aspect of the laboratorial dimension of experimental aesthetics: a visual poem may

have a version for gallery exhibition, and another for a book collection; its live

reading may have been sound recorded or it may have become a script for a film or

computer animation. Fragility and ephemerality of materials also characterize works

that may have been produced in very small editions or in a single exhibition version.

The aim of PO.EX: A Digital Archive of Portuguese Experimental

Literatutre is to represent this intermedia and procedural textuality in a

relational database and to explore the research and communicative potential of this

new archival space.

The multimedia affordances of hypermedia textuality seem particularly adequate for

representing those intermedia and performative dimensions of literary practices, and

also for preserving the ephemeral nature of inscriptional traces that have taken

multiple forms, including paper and book formats, video and sound recordings, live

performances and public installations, computer codes and screen displays. The

aggregation, structuring, and marking up of digital surrogates of this large

multimodal corpus have interpretative implications that challenge our representations

of experimental works and practices, and our database imagination for digital

possibilities. Whether taking the form of facsimiles of books, photographs of

installations, sound recordings of readings, videos of performances, or emulations of

early digital poems, digital remediation re-performs the works for the current

techno-social context. Editing intermedia texts for a digital environment forces us

to address a number of specific questions related to documents, methods, contexts,

and uses.

The materials included in the

PO.EX Digital Archive

are, in many ways, similar to those we may find in archives such as

UbuWeb, particularly in its early versions, which were

focused on visual, concrete, and sound poetry.

[4]

UbuWeb, however, has grown as an open and decentered

hypertext without explicit editing principles or scholarly methodology that would

control the metadata about original sources and their digital remediations. Its

emphasis falls on collaborative non-institutional construction and on a rationale of

open access that uses the networked reproduction and distribution of files to provide

global access to digital versions of rare materials in multiple media (print, sound,

video, film, radio) that often exist only in limited copies or in inaccessible

archives. Digital republication, by itself, contributes to a redefinition of the

cultural and social history of creative practices, since many of these

experimentations and communities of practice were generally absent from institutional

print-based narratives of artistic and literary invention.

UbuWeb’s inclusive approach has created a vast repository of art

practices, media, genres, and periods. This approach has produced a new context for

understanding the nature and history of experimental forms in English (and, to a much

lesser extent, in a few other languages and cultures) simply as a result of their

hypertextual contiguity and networked availability. A similar effect may result from

the PO.EX project: the mere aggregation of dispersed works and textual witnesses in

various media – some of which were unaware of each other and entirely absent from

mainstream accounts of contemporary literature and contemporary art – will produce a

new perception of Portuguese experimental literature both for inside and outside

observers. The following section briefly sketches some of the questions raised by the

PO.EX Digital Archive, and describes its

infrastructure and content.

2. Material and Textual Dynamics in Multimodal Remediation

Given that a significant part of the materials are rare, and in some cases exist only

in a single instance, one of the declared aims of the PO.EX project is to collect and

preserve our artistic and cultural heritage through digital remediation. This is

especially true of (1) visual texts, collages and other ephemeral works of which

there is only the original object, (2) sound and video recordings that have never

been published or distributed before, and (3) computer works that cannot be run in

current digital environments (see Torres 2010). A survey of published and unpublished

materials housed in public and private collections, and sometimes in the writers’

archives, revealed the existence of a large body of work in multiple media.

[5] Three features are

common to PO.EX authors: openness to experimentation in different technological media

(such as printing techniques, audiotape, film, video, and computers); willingness to

participate in the public sphere and engage in social and political debates

(producing works for television, for instance, or works of public art); and a general

inter-art sensibility that places verbal language in an intermedia tension with

visual art, video art, installation, performance, theatre, and music. Representing

the polymedia and polytextual dynamics of these works – within a network that

includes, in some cases, preparatory documents and multiple versions, and also

authorial and non-authorial critical texts – became the main theoretical and

technical challenge of the project.

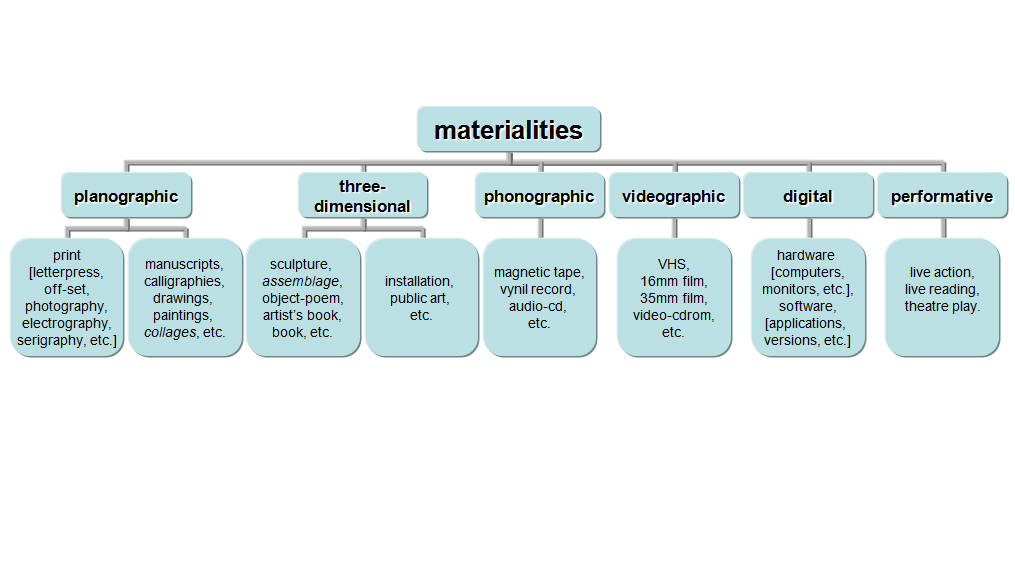

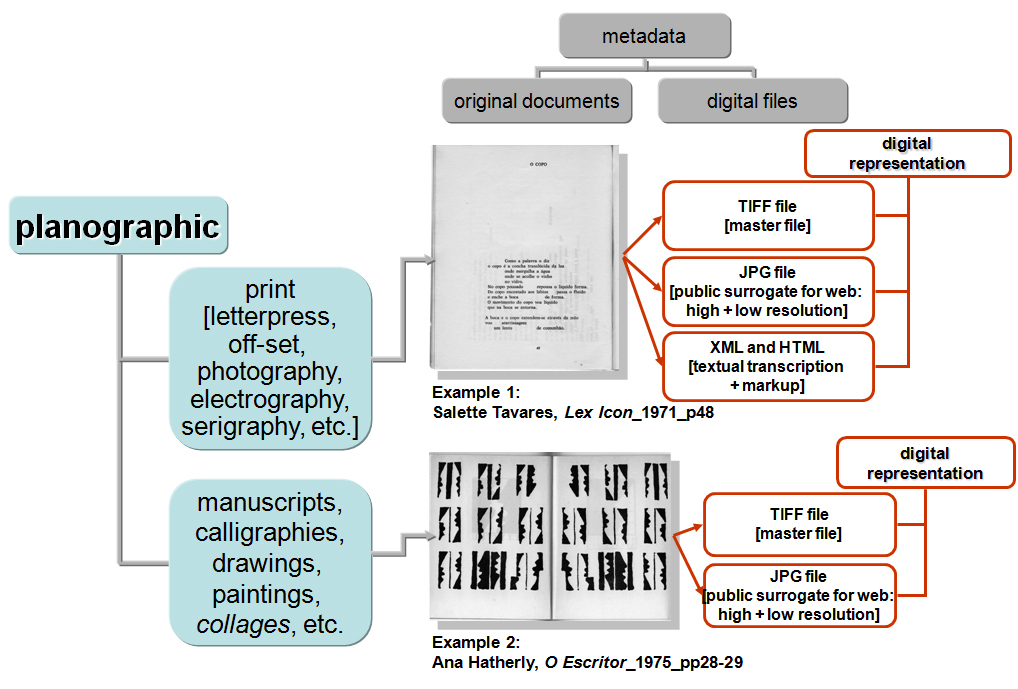

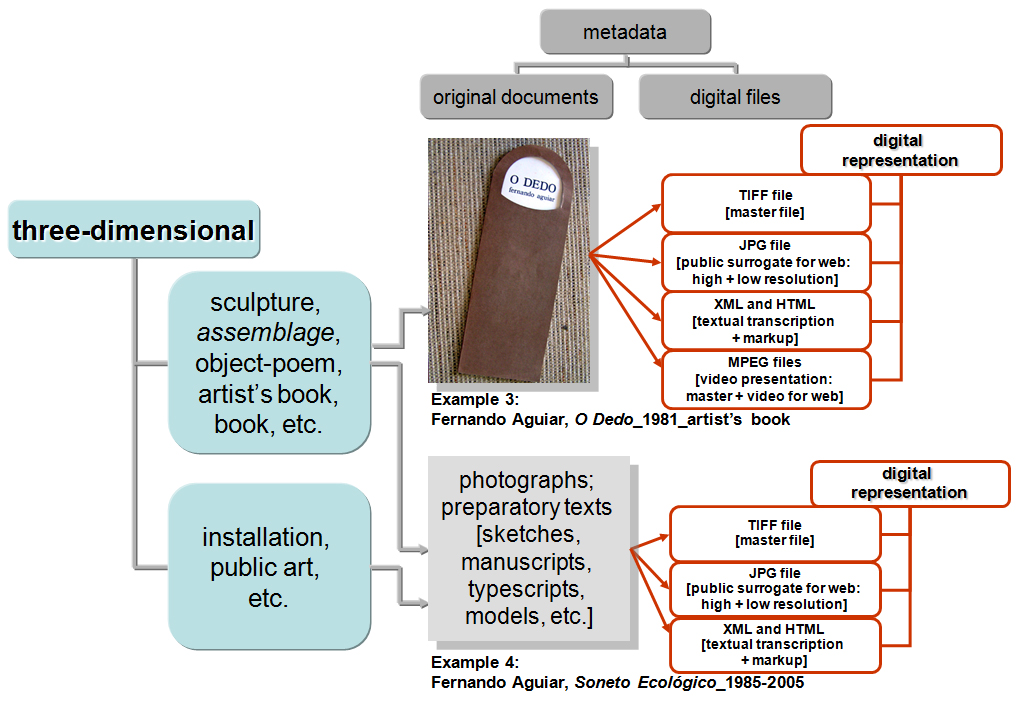

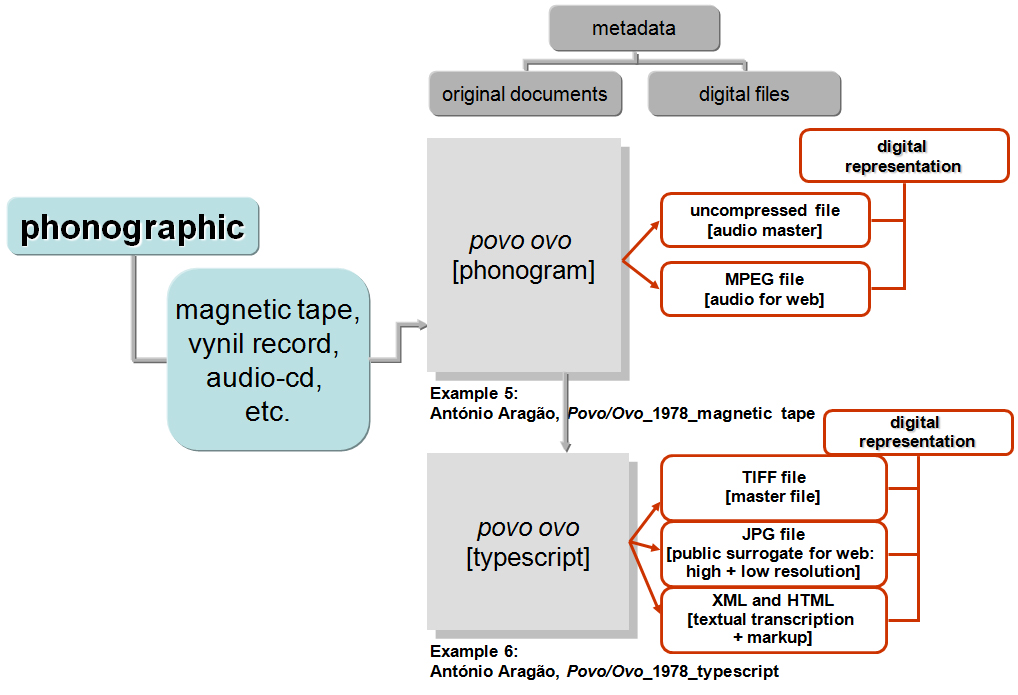

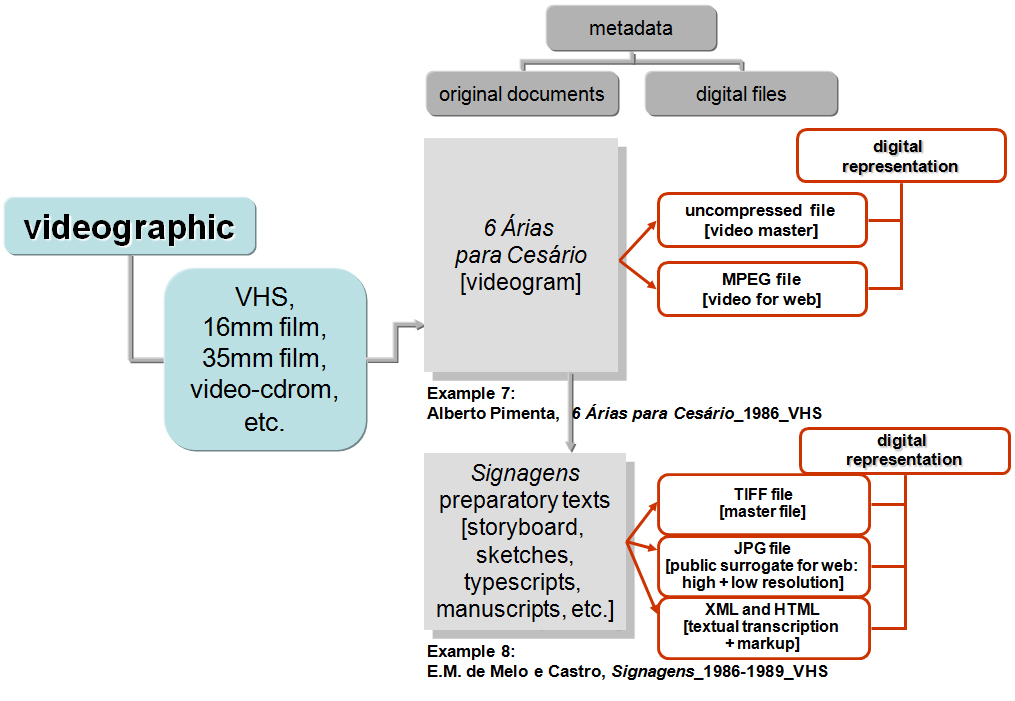

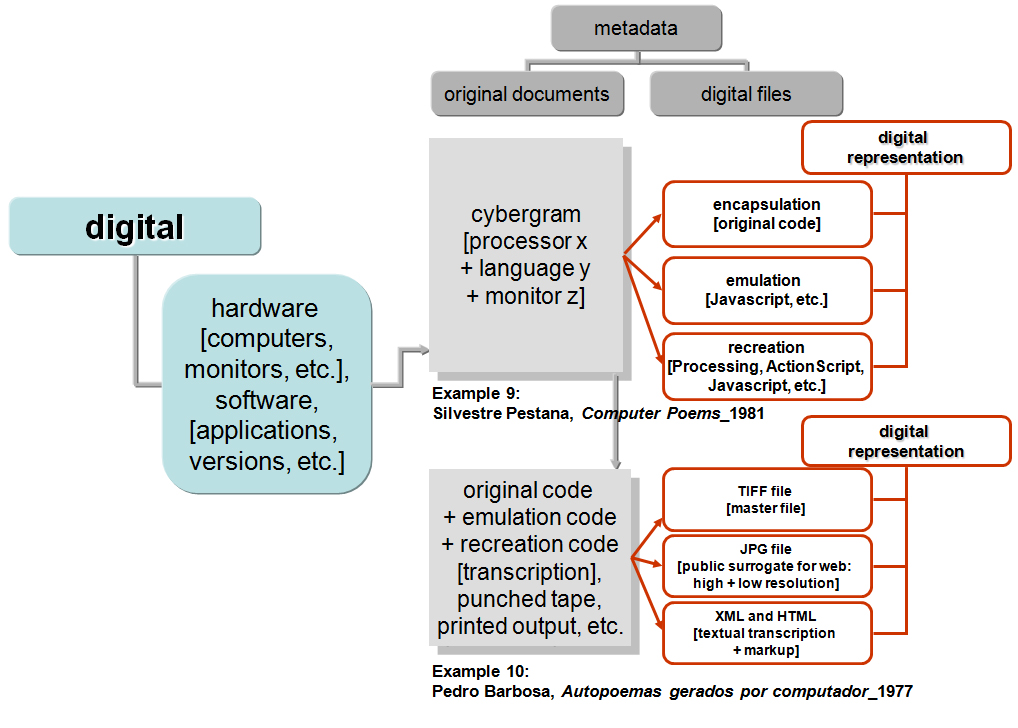

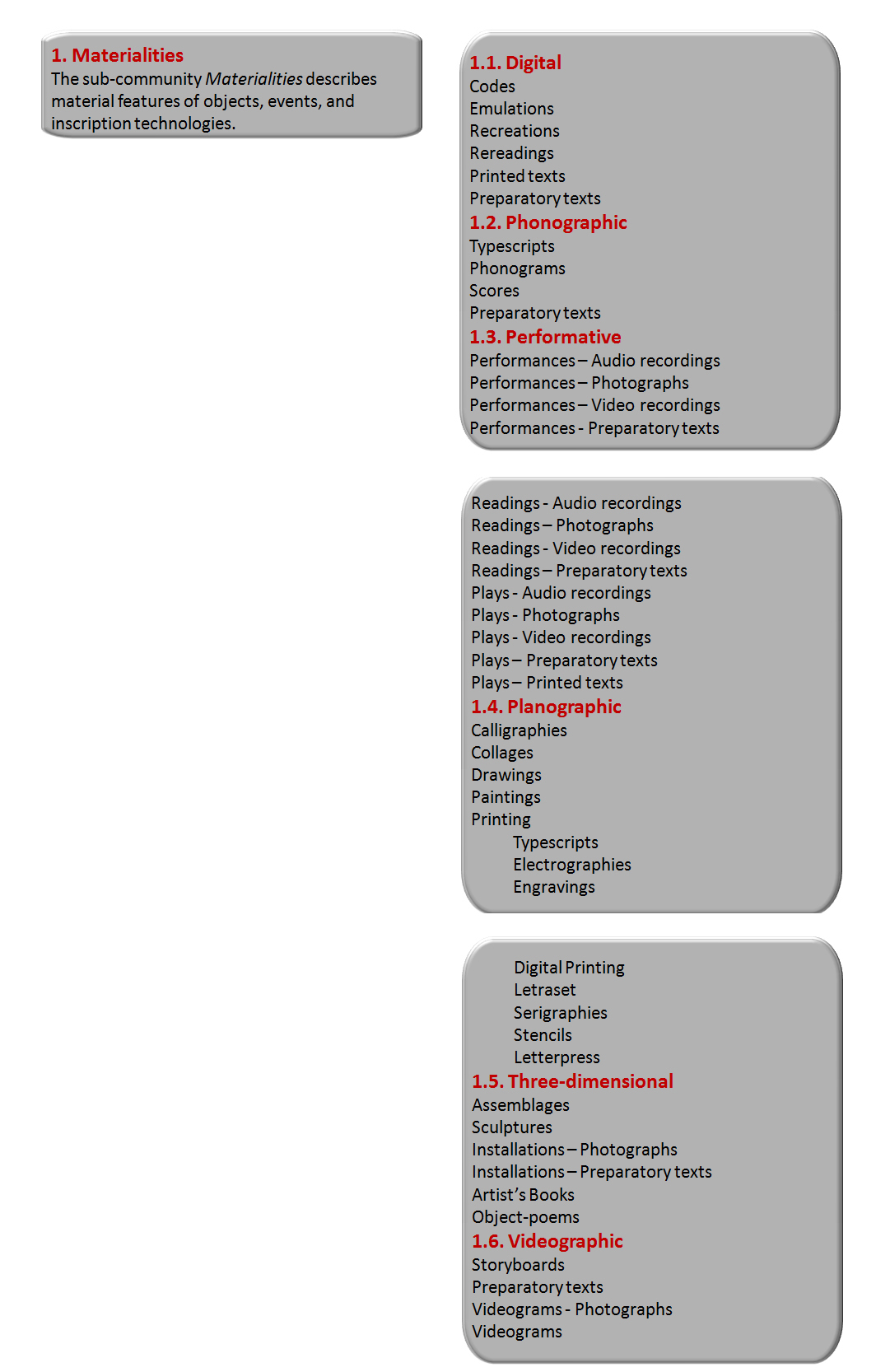

Our analysis and description of the collected materials recognized six types of

materialities that were to be digitally represented in the archive: planographic,

three-dimensional, phonographic, videographic, digital, and performative [see

Figure 1]. Materiality, a category that subsumes the

artistic medium and production techniques of the originals, was also conceived in

terms of a differential relation to the code-based medium of digital reinscription

with its screen-based interface for perceptual experience. Thus the mode of original

technical inscription and of its particular perceptual experience, rather than the

artistic discipline or genre, became the basis for categorizing the work’s

materiality. For each item a digital surrogate would be generated through specific

encoding procedures and formats, and its metadata would contain fields describing the

original objects as well as fields describing the surrogates. The following diagrams

explain the remediating dynamics for different kinds of objects: printed pages of

text, including visual poems [see

Figure 2], artists’

books and public art [see

Figure 3], audiotaped sound

poems [see

Figure 4], video works [see

Figure 5], early computer works [see

Figure 6], and performance-based practices [see

Figure 7]. Two examples are given for each type of

remediation.

[6]

The multiple material and textual mediations in a digital archive of multimodal works

can be illustrated with “Soneto Ecológico” [“Ecological Sonnet”] (1985, 2005), by Fernando Aguiar. This

work is a public park in which seventy trees of ten different species (autochthonous

from Western Iberia) have been planted according to line and rhyme patterns of

Portuguese classical sonnets (14 lines, abab cdcd efe fef). The work was originally

created as three-dimensional model and blueprint for the park, and was exhibited

several times in this form. Twenty years after its first presentation, the projected

park was finally created in the town of Matosinhos [see

Figure 8]. Digital representation of this work in the archive is achieved

by means of a network of files: photographs of the original 3D model, digitized

images of textual descriptions, plans and diagrams, as well as photographs and videos

of the actual park. These are further complemented with an interview of the author,

and several reception documents. The archive will thus generate three contexts for

reading this work: the context of its own textual and material history; the context

of parodies of the sonnet form in the PO.EX movement; and the context of ecological

awareness in national policies for forestation and protection of local tree

species.

3. Problems of Remediation and Theoretical Frameworks

Remediation of this multimodal corpus raises two clusters of theoretical and

technical problems. The first cluster of problems originates at the level of the

source materials for the archive, such as selecting from the source materials those

documentary evidences (in multiple media) that will come to represent a given body of

works and, in some cases, live performances of works. Selected works and documents

can be transcoded according to several technical protocols. Digital representation of

the source objects generally follows a hypertext rationale, as established and

formalized by scholarly electronic editions of literary and artistic works published

since the mid-1990s. This means producing digital facsimiles and transcriptions,

marking up variations and versions, and creating elaborate metadata both about the

digital surrogates and their source objects. The second cluster of problems derives

from issues posed by the new archival medium. We need to work with a model of

electronic space that takes full advantage of its processing, aggregative, and

collaborative functionalities as a new space for using the archived materials in new

contexts, including teaching, research, and other creative practices. In this

section, I discuss issues of remediation related to print, audio, and born-digital

artifacts, and I will place the archive within theoretical frameworks useful for

thinking about editorial questions raised by the multimodal and experimental nature

of its content.

3.1. Remediating Print: The Hypertext Rationale as a Model for E-Space

The first problem concerns the re/presentation of texts and books in ways that

embody current principles for electronic textual editing [

Schreibman 2002]; [

Renear 2004]; [

Vanhoutte 2004]; [

Siemens 2005]; [

Burnard 2006]; [

Deegan 2009]. Major scholarly

electronic textual editing projects of the late 1990s and early 2000s have adopted

Jerome McGann’s “hypertext rationale” (2001[1996]). McGann

conceived of hypertext as a metacritical tool that would allow editors to move

beyond the codex rationale, liberating texts from their hierarchical confinement

in the inscriptional space of the book. Once texts were remediated in digital

form, bibliographic codes could be more thoroughly apprehended and investigated,

while the genetic and social dynamics of textual production could be marked and

digitally represented as a network of historically situated inscriptions:

The exigencies of the book form forced editorial scholars to

develop fixed points of relation – the “definitive text”,

“copy text”, “ideal text”, “Ur

text”, “standard text”, and so forth – in order to

conduct a book-bound navigation (by coded forms) through large bodies of

documentary materials. Such fixed points no longer have to govern the ordering of

the documents. As with the nodes on the Internet, every documentary moment in the

hypertext is absolute with respect to the archive as a whole, or with respect to

any subarchive that may have been (arbitrarily) defined within the archive. In

this sense, computerized environments have established the new Rationale of HyperText. [McGann 2001, 73, 74]

Every material instantiation is a new textual instantiation that can be

represented as an item in a database of electronic files. Peter L. Shillingsburg

sums up this view of the material incommensurability of each textual instantiation

through the concept of “script acts” as networks of genetic and

social documents: “By script acts I do not mean just those acts involved in

writing or creating scripts; I mean every sort of act conducted in relation

to written and printed texts, including every act of reproduction and every

act of reading.”

[

Shillingsburg 2006, 40]. Once encoded and marked-up as electronic texts, past textual iterations

are reiterated as machine-processable forms. The “hypertext

rationale” implies digitizing and structuring materials in ways that

give a meta-representational function to the process of de-centering and

re-constellating their textual modularities in digital formats. Texts are not just

pluralized in their various authorial and editorial forms but they are also

re-networked within large ensembles of production and reception documents.

Hypertext became a research tool for understanding the multidimensional dynamics

of text, and for testing social editing as theory of textuality. In the electronic

medium, the scholarly edition is reconfigured as an archive that attempts to make

explicit the editorial frames that have produced each textual instance in its past

bibliographic materiality. Self-awareness of textual transmission and intertextual

dependence, coupled to a hypertext rationale for electronic editing, resulted in a

general movement away from the discrete book-like digital

edition to

a radial and fragmented all-inclusive

archive.

This hypertext rationale for electronic editing of print (and manuscript) works

has been tested and embodied in several literary archives. That is the case, for

example, of text-and-image digital archives such as the

Rossetti Archive (1993-2008),

The William Blake Archive (1996-present),

Radical Scatters: Emily's Dickinson's Late Fragments and

Related Texts, 1870-1886 (1999),

Artists’ Books Online (2006) and, more recently, the

Samuel Beckett Digital Manuscript Project (2011-present). All of them can be described as experiments in critical

editing and metatextual representation that use the aggregative and simulative

affordances of the medium for a heightened perception of the materiality of

inscriptions, and for an open production of context as a reconfigurable network of

textual relations. This networked reframing of textual production and reception

through hypertextual remediation is often expressed in terms of documentary

inclusiveness and descriptive exhaustiveness:

The Rossetti Archive aims to include high-quality digital images

of every surviving documentary state of DGR's works: all the manuscripts, proofs,

and original editions, as well as the drawings, paintings, and designs of various

kinds, including his collaborative photographic and craft works. These primary

materials are transacted with a substantial body of editorial commentary, notes,

and glosses.[7] (Rossetti Archive, 2008)

The core of ABsOnline is the presentation of artists' books in

digital format. Books are represented by descriptive information, or metadata,

that follows a three-level structure taken from the field of bibliographical

studies: work, edition, and object. An additional level, images, provides for

display of the work from cover to cover in a complete series of page images (when

available), or representative images.[8] (Artists Books Online, 2006)

The representation of texts and images as a networked, aggregated and socialized

archive is also a way of testing a theoretical approach by exploring specific

features of the electronic writing and reading space. Johanna Drucker has

eloquently argued for the importance of modeling the functionality of e-space in

ways that reflect a thorough understanding of the dynamics of book structures, but

also in ways that go beyond the structures of the codex and take full advantage of

programmable networked media. She highlights the following affordances of digital

materiality: continuous reconfiguration of digital artifacts at the level of code,

the capacity to mark those reconfigurations, the aggregation of documents and data

in integrated environments, and the creation of spaces for collaboration and

intersubjective exchange [

Drucker 2009, 173]. Designing a

digital archive depends on the best possible articulation between re/presenting

and remediating the materials and inscribing that remediated representation in the

specifics of the database ontology and algorithmic functionalities of networked

digital materiality [see Figure 9].

3.2. Remediating Audio: Close Listening Texts in E-Space

A second re/presentation problem in the

PO.EX Digital

Archive derives from the centrality of multimodal modes of

communication for experimental literary practices. Audio, film, video and other

media technologies were creatively explored in various institutional and

non-institutional settings. Verbal and written experimentation extended to a

programmatic exploration of the expressive potential of sound and video recording

or computer processing, for example. These multimedia textualities, which

challenged the codex- and print-centric hegemony of mainstream literary forms,

also raise specific textual problems when it comes to editing. Charles Bernstein’s

apologia of poetry’s audiotextuality [

Bernstein 1998] – which later

became a justification for the

PennSound archive –, is particularly useful in this connection. Bernstein stresses

the historical and poetical value of the recorded human voice reading poetry.

Sound recordings are seen as evidence of the plural existence of literary works.

Each recorded reading is a unique textual instantiation of the work:

The poem, viewed in terms of its multiple performances, or

mutual intertranslatability, has a fundamentally plural existence. This is

most dramatically enunciated when instances of the work are contradictory or

incommensurable, but it is also the case when versions are commensurate. To

speak of the poem in performance is, then, to overthrow the idea of the poem as

a fixed, stable, finite linguistic object; it is to deny the poem its

self-presence and its unity. Thus, while performance emphasizes the material

presence of the poem, and of the performer, it at the same time denies the

unitary presence of the poem, which is to say its metaphysical unity.

[Bernstein 1998, 9]

He also highlights the fact that audiotexts are yet another instance of the

multiple textuality of the poem: “The audio text may be one more generally discounted

destabilizing textual element, an element that undermines our ability to fix

and present any single definitive, or even stable, text of the poem.

Grammaphony is not an alternative to textuality but rather throws us deeper

into its folds.”

[

Bernstein 2006, 281]. The availability of sound recording thus creates a historical situation in

which the written text can cease to be identified as the primary literary

instance: “A widely available digital audio poetry archive will have

a pronounced influence on the production of new poetry. In the coming

digital present, it becomes possible to imagine poets preparing and

releasing poems that exist only as sound files, with no written text, or for

which a written text is secondary.”

[

Bernstein 2006, 284]

His close listening rationale, as expressed in the

PennSound

Manifesto (2003), for instance, suggests a combination of bibliographic

principles with the modular and social affordances of the new medium. In this

sense, it parallels McGann’s hypertext rationale as a programmatic intervention

for placing written textual instances in a radiant dynamics that is independent

from their bibliographic origins. Bernstein envisions audio textualities as a

series of discrete, single, and technically standardized files that can be

appropriated and combined by listeners in terms of the modularity of the digital

file and not in terms of their pre-digital media source:

- It must be free and downloadable.

- It must be MP3 or better.

- It must be singles.

- It must be named.

- It must embed bibliographic information in the file.

- It must be indexed. [Bernstein 2003]: “PennSound Manifesto”

A de-centered downloadable audiotext archive would encourage a renewed aural

perception of poetry, which could then be released from its grammacentric frame of

perception. Bernstein wants the sound archive to foster live encounters with

audiotexts perceived as literary experiences and not merely as a collection of

historical documents. Networked distribution of artistic and cultural forms has

transformative implications for our politics of cultural transmission.

The

PO.EX Digital Archive includes a series of sound

and video recorded readings that highlight the presence of audiotextuality and

performativity as an intrinsic layer of textual practices

Figure 10. These readings were commissioned specifically for inclusion

in the archive, and they are part of a critical intervention in the presentation

of the materials that explores remediation as an opportunity for creative

appropriations beyond the mere phonographic archaeological reconstitution. This

particular mode of presentation follows an audiotext rationale that offers users

non-hierarchical access to multiple pairs of visual text and audio clips. In

several instances, recordings contain performances of visual texts that have never

been read aloud before. Vocalization of their visual patterns opens up their

notational strategies to new sound appropriations. The audio is producing the

visual anew and cannot be perceived as a mere record from the past. The

documentary function of the digital facsimile as a surrogate of a printed visual

poem has been displaced by a reading intervention that calls attention to itself

and to the archive as a performance space.

3.3. Remediating the Digital: Reconstructing Born-Digital Artifacts

A third set of problems originates in the republication of early digital works

for which it is no longer possible to reconstitute the original hardware and

software environment. Information and library science protocols and standards for

the preservation of digital information have been defined, and various

institutional initiatives have addressed specific problems posed by digital media

artworks.

[9] Matthew G. Kirshenbaum and others [

Kirschenbaum 2009a]

[

Kirschenbaum 2009b] have developed the concept of “computers as complete environments” in the context of

preserving writer’s archives that contain born-digital artifacts.

[10] They argue for the evidentiary value of hardware and storage media, the

importance of imaging hard drives and other disk media, the use of forensic

recovery techniques, and documenting the original physical settings in which the

writer’s computers were used [

Kirschenbaum 2009b, 111, 112].

The Electronic Literature Organization started a directory and repository of

electronic literature in 1999, and its “Preservation,

Archiving, and Dissemination” (PAD) initiative produced two significant

reports outlining strategies for preserving present and past forms of electronic

literature [

Montfort 2004]; [

Liu 2005]. The 2004

report by Montfort and Wardrip-Fruin makes a set of recommendations for keeping

electronic literature alive, that is, readable and accessible. The authors

describe four strategies for the preservation of digital information: “ 1) Old Hardware Is Preserved to Run Old Systems; 2) Old Programs

Are Emulated or Interpreted on New Hardware; 3) Old Programs and Media Are

Migrated to New Systems; 4) Systems Are Documented Along with Instructions for

Recreating Them”. They further summarize their ideas in 13 principles

for long-lasting electronic literature [

Montfort 2004]. Early works

were often poorly documented and authors were generally not concerned whether

their programs and texts survived technological changes, making recovery and

reconstruction a difficult task.

Recognizing that current e-literature communities are already investing in

strategies for preserving digital information, Liu

et al.

emphasize that born-digital artifacts are characterized by having “dynamic, interactive, or networked behaviors and other

experimental features — including, but not limited to, works making use of

hypertext, reader collaboration, other kinds of interaction, animated text

or graphics, generated text, and game structures.”

[

Liu 2005]

The authors consider preservation as part of a generic migration process and they

highlight two major strategies: the first involves interpreting and emulating

electronic literature so that works that are now difficult or impossible to read

can be tested again “in a form as functionally like the original as

possible”

[

Liu 2005]; the second migration strategy is to describe or represent work in a format

that can later be moved to alternative formats and software: “[t]his representational method may not always be able to

maintain all the functions of the original work. But even so, it has the

advantage of being standardized (for interoperability); and it can

supplement or enhance the workings of the original.”

[

Liu 2005]

The PO.EX Digital Archive contains examples of

computer works by three pioneers of digital literature: Pedro Barbosa, Silvestre

Pestana, and E.M. de Melo e Castro. In most cases, the possibility of

reconstructing the code for their early works is limited by lack of specific

documentation. In the case of Pedro Barbosa our archive includes handwritten notes

and diagrams describing iterations and transformations, and also printed outputs

of generative texts from mid to late 1970s. Melo e Castro produced a series of

animated poems between 1986 and 1989 in collaboration with the Open University in

Lisbon, but they were recorded as VHS videos, and little technical information

exists about the specifics of software and hardware used for generating and

animating those texts. In both cases, any code recovery would have to work back

from the effects observed in the printed output, in one case, or in the videos, in

the other, and try to infer the codes and processes for the observable visual and

textual operations. Recovery would imply some kind of interpretive emulation that

translates paper output or video output back into some form of input code

compatible with current systems, or perhaps some kind of media archaeology

approach capable of identifying their original machines and rewriting their

programs. In these two cases, users of the archive will be able to see facsimiles

of the original handwritten notes and punched tapes as well as the videopoems, but

the texts will not be generated or animated by code.

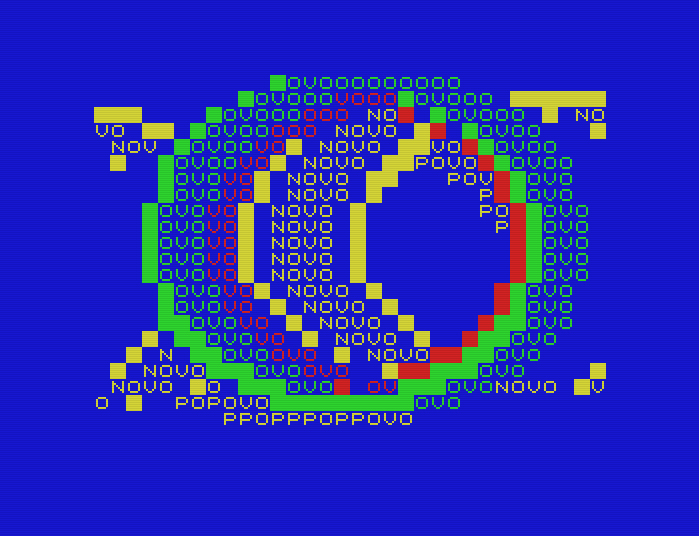

The work of artist Silvestre Pestana provides another example of how PO.EX has

addressed the problems of migrating born-digital work.

Computer poetry is the title of a series of poems he produced in 1981

[see

Figure 11]. At the time, Pestana purchased a

Spectrum personal computer with which he made the first three versions of his

monochrome visual computer poetry: the first is dedicated to E. M. de Melo e

Castro, the second to Henri Chopin, and the third to Julian Beck. Despite being

the only instance of such work in Portugal in 1981 (Pedro Barbosa, active since

1975, was working mainly with text generation), this work of dynamic and

generative visual poetry still offers important clues for understanding the

evolution of computer-animated visual poetry and its relation to the printed

visual poetry of the experimental poets. The work was never published. Its code

(with some errors recently identified and corrected by the author) was published

in the book

Poemografias, an anthology of visual

poetry co-edited by Pestana [

Aguiar 1985, 214, 215, 216]. In

this case, Pestana’s early “Computer Poems” can be

emulated for the current networked environment, since the community of Spectrum

users has made available code forms that run in a Java environment. Although

unable to recover the material environment of its original execution, this

migration resulted in a version that approximates the functionality of the

original.

4. Representation, Simulation, Interaction

Shillingsburg has formalized a working model for electronic scholarly editions [

Shillingsburg 1996] and [

Shillingsburg 2006]. Based on his

observation of the achievements and shortcomings of editions and archives developed

during the first two decades of electronic scholarly editing, Shillingsburg proposed

four sets of questions that must be answered by the infrastructure and

functionalities of the edition or archive [

Shillingsburg 2006, 92, 93]. The first group of questions concerns the relations between

different documentary states of the text and how these relations are made visible for

readers. The second set deals with methodological issues about how editorial

interventions are made clear. The third group addresses the ways in which a context

for the primary texts is produced in the archive through both autograph and allograph

materials. Finally, the fourth set of questions details the uses of the edition or

archive, including interactions such as making personal collections and annotations

on the materials.

Although some of those questions may be more relevant for historical materials for

which there are multiple print and handwritten witnesses, most of them can be applied

to a project devoted to contemporary experimental literature. Contemporary practices

take place in a multimedia environment in which versions are also a function of their

multiple media inscriptions and not necessarily from later transmission or

remediation processes. Because of their programmatic emphasis on process and

performance, experimental practices tend to generate multiple textual instances of

any given work: a sound poem, for instance, will often have one or several written

notations (a performance manuscript or typescript, sometimes a published version),

and one or more sound or video recordings of live performances. This combination of

ephemerality and multiplicity, of scarcity and abundance, poses challenges for any

editing and archival project of late 20th-century experimental literature in a

digital environment that are similar to problems raised by documentary and critical

editing of historical material of earlier periods.

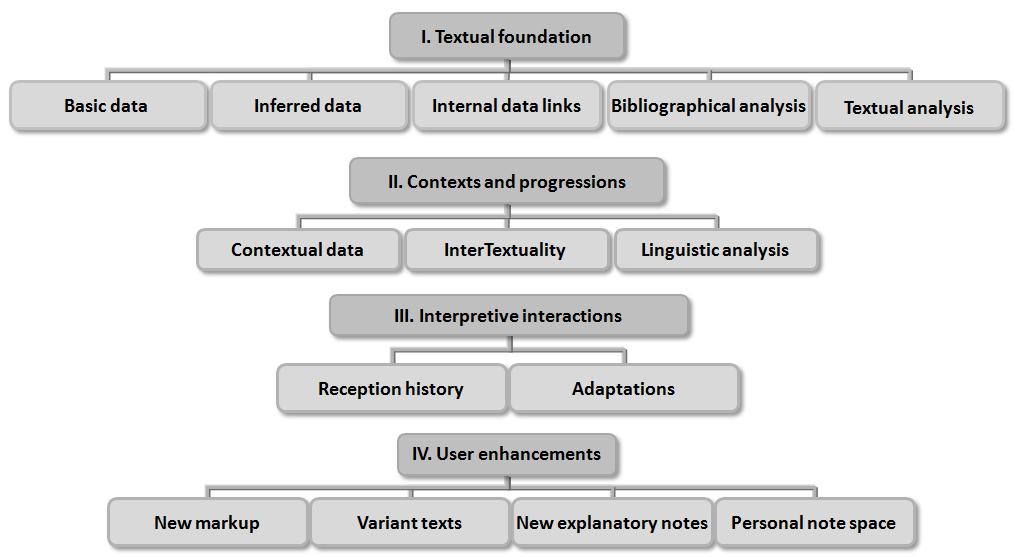

By means of the concept of electronic edition or archive as “knowledge site”, Shillingsburg has tried to capture a set of textual,

contextual, interpretive, and interactive features that any literary edition or

archive must provide for current and future users. These features imply a synthesis

between documentary and critical edition that takes advantage of the affordances of

the medium. We could say that his model, based on electronic editing projects

developed in the 1990s and early 2000s, is an attempt to invent the digital medium

for editing purposes. Shillingsburg has structured his “electronic infrastrutcture for script acts” into four levels: textual

foundations; contexts and progressions; interpretive interactions; and user

enhancements [see

Figure 12]. “Textual foundations” refers to basic data, inferred data, internal data

links, bibliographic analysis, and textual analysis; “contexts

and progressions” includes contextualized data (for each stage of textual

existence), intertextuality, and linguistic analysis; “interpretive interactions” refers to reception history and adaptations;

and “user enhancements” considers the possibility of users

adding new markup, creating new variant texts, and writing commentary and explanatory

and personal notes [

Shillingsburg 2006, 101, 102].

Shillingsburg’s model for electronic editing helped us to make explicit our options

for document selection and for digital representation, including database structure,

bibliographic and semantic metadata, and interface form. Despite its critical and

theoretical sophistication, this model is still insufficient for the specific nature

of this project. On the one hand, textual, methodological, and contextual principles

need to be adapted to the multimedia textuality of this particular corpus of

experimental literature; on the other hand, we want to experiment with creative

possibilities for digital remediation of the collected materials. Theoretical and

technical issues triggered by ongoing research also impact on the possible uses of

the archive. Especially important in this regard, are the social and academic uses

that we want and expect this archive to support and encourage. The gathering of rare,

disperse, and often inaccessible materials, and the creation of a user-friendly

interface to a relational multimedia database should be equally productive for

general online reading/viewing/listening practices (including access through mobile

devices) and for specialized teaching and research purposes.

Although the computational implementation of all aspects identified by Shillingsburg

is more complex, time consuming, and costly than what we could hope to accomplish in

a three-year project, we tried to explore them at least in their theoretical

implications. The aim would be for this archive to fruitfully combine the

preservation, repositorial, and dissemination functions, with the creation of a

research resource capable of generating new knowledge in the future. This seems to be

the best way to maximize the simulation and interactive capabilities of digital

environments and digital tools as knowledge sites. Even if we had to limit the

structure and functionality of the archive to what was computationally feasible

within our resources, we have tried to think through the electronic infrastructure of

PO.EX Digital Archive with our best theoretical and

technical imagination.

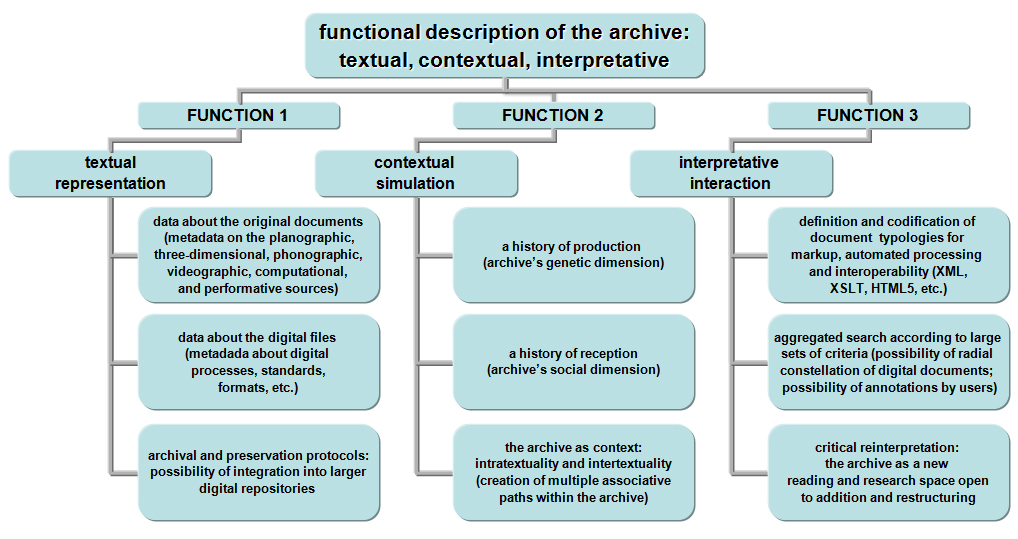

As a multimodal archive of experimental literature it should be both an aggregate of

digital surrogates of various media works (and related documentation) and an

investigation into the possibilities of digital representation and database structure

that creatively explores the materiality of the medium and of its codes. The

possibility of creating a fluid environment – open to addition and restructuring as a

result of ongoing and future critical interactions between users and objects – is one

example of the kind of remodeling and progressive categorization inherent in the

flexibility of digital representation as reconfigurable and self-documenting data.

Reconceived as a remediated critical environment, the digital archive should be able

to sustain and encourage reflexive feedbacks between textual representation,

contextual simulation, and interpretative interaction [see Figure 13].

5. Performing the Digital Archive

Current modes of knowledge production participate in the general database aesthetics

of digital culture. Situated between information science protocols and digital

humanities research projects, “digital archives” have become the

current historical form of an institutional desire for structured, aggregated,

displayable, and manipulable representations. If archival meaning is codetermined in

advance by the structure that archives, the order of the archive – its politics of

representation – coproduces the writings and readings by means of specific

preservation and presentation strategies. Inclusion and exclusion, as well as the

conceptual and interpretative apparatus expressed in structure and interaction

design, frame the archive also (1) as an archive of its own editorial theory, (2) as

an instantiation of its technological affordances and institutional settings, and (3)

as a program for perception and use. The following sections describe the digital

archive as a performance of its contents. Performative transcodification in the PO.EX

Archive can be seen in digitization and metadata, on the one hand, and in the

recreation of works, on the other.

5.1. Intermediation, Translation, Classification

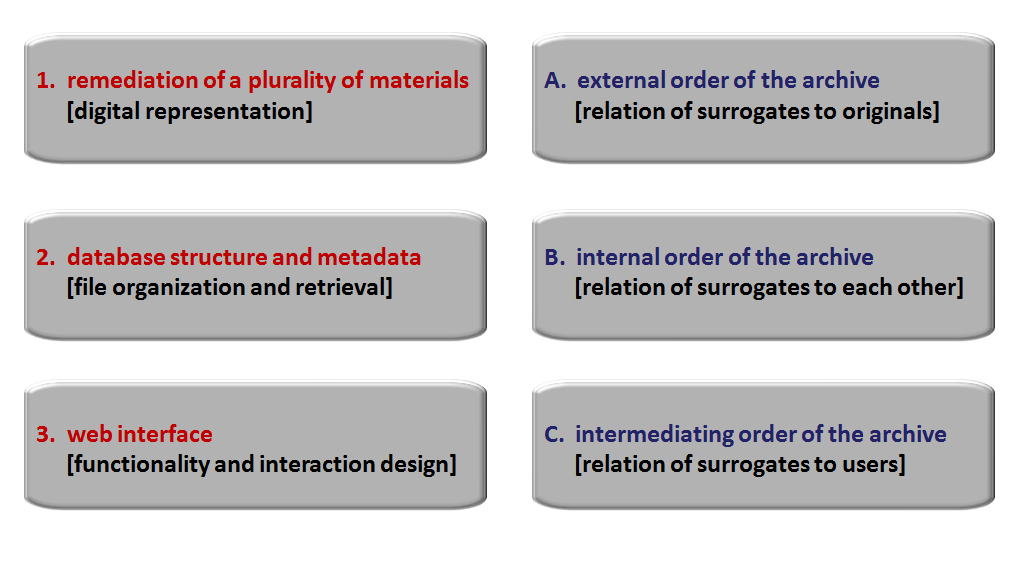

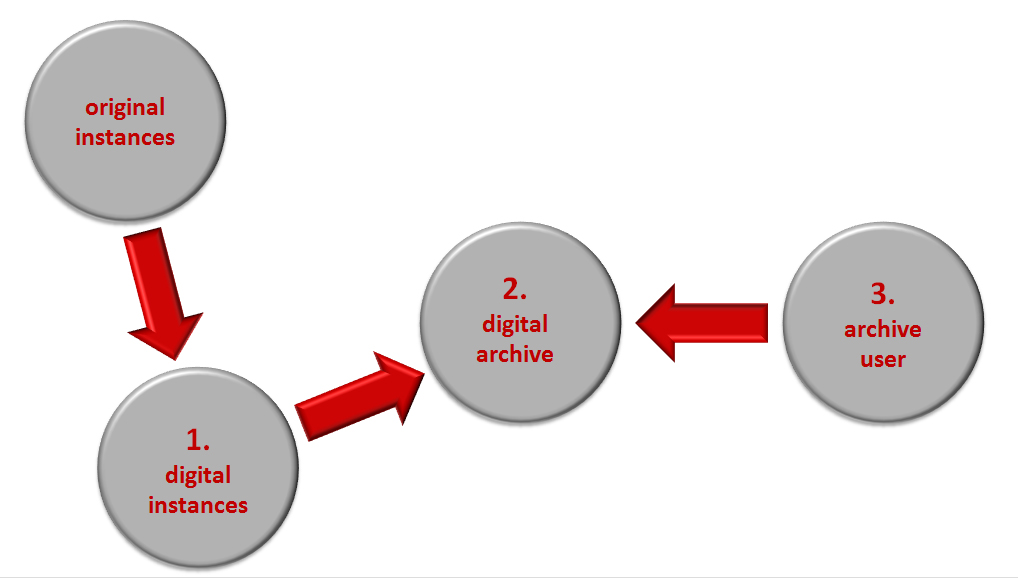

We can distinguish three levels of performativity in digital archiving, each of

them involving one cluster of problems: (1) the first level relates to problems of

digital representation such as selection of objects, digitization protocols, and

file formatting – the archive performs the relation of digital surrogates or

versions to their originals through the remediation of a plurality of materials;

(2) the second level relates to problems of organization and retrieval of digital

objects, including database structure, document modeling, and metadata – at this

level, the archive performs the relations of digital objects to themselves and to

each other, according to category-building processes; (3) the third level relates

to problems of functionality and interaction design, such as search algorithms,

display, and web interface – the archive performs the relations of digital objects

to subjects as users and readers [see Figure 14].

When these three levels are fully articulated, we can say that the archive

functions as an instance of intermediation between prior material and media forms

and their digital remediation, on the one hand, and also as an instance of

intermediation between those digital representations and certain practices of

reading and use, on the other [see

Figure 15].

Considered in its digitally mediated condition, the archive (a) models

handwritten, print, audio, video, and digital documents through specific data

formats and markup, (b) makes them searchable and retrievable through algorithmic

processes, (c) displays them as a network of navigable related items, and (d)

aggregates them in a collaborative space for further analysis and manipulation.

Through those functions, the archive expresses the database aesthetics of digital

culture, and engages the encyclopedic, procedural, spatial, and participatory

affordances of the medium, as described by Janet Murray [

Murray 2012, 51–80].

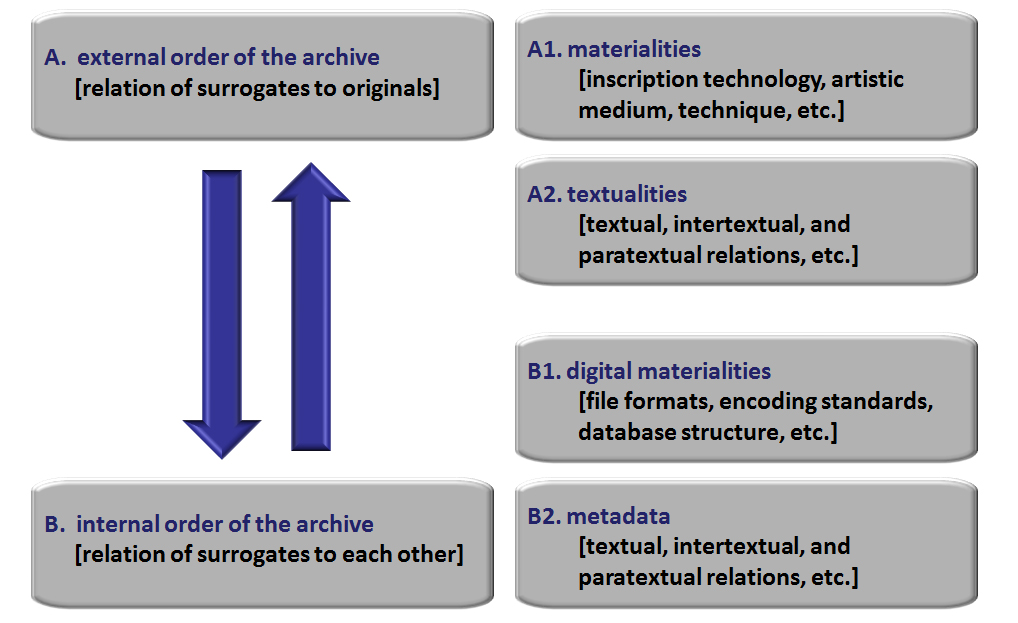

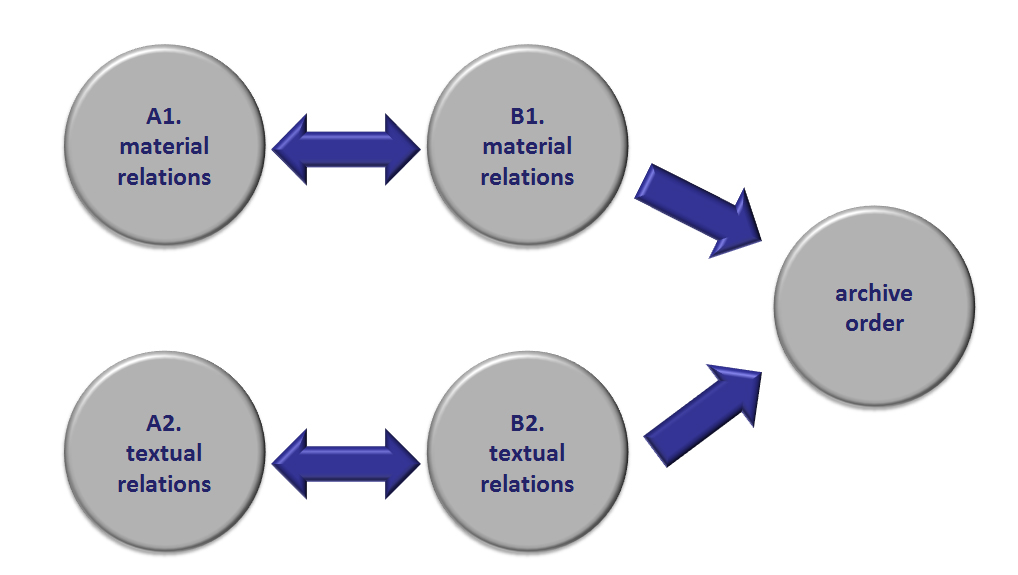

Another way of understanding the material and textual performativity inherent in

digital archiving is to think of the digital archive as a process of translation

[See Figures 16 and 17]. The transcoding of objects in digital format is based on

an assessment of material and textual features of the source objects and on

decisions about the best way to represent those features in digitized form –

facsimile images, textual transcriptions, standards for audio and video encoding,

transcoding between digital formats. Remediation creates object-to-object

correspondences across media, while submitting the surrogates to constraints and

affordances of the new medium, such as modularity and processibility. Material and

textual relations are reconstituted within the archival order also as a network of

interrelations whose internal order is in tension with an external order.

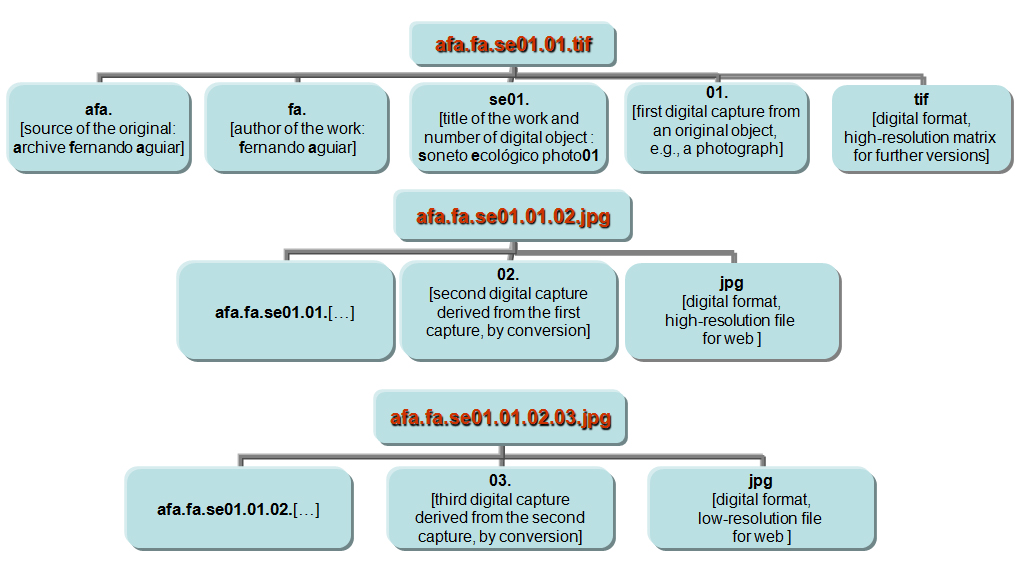

This process of media translation can be illustrated with the naming system for

files in the archive [see

Figure 18]. The creation

of a digital archive implies the cumulative production of a large quantity of

files that maintain a network of relationships among themselves and between them

and their sources. Descriptive metadata (that identify the contents and technical

data of the file) and administrative metadata (that identify creators and editors

of the file) enable producers and users to assess the authenticity, integrity, and

quality of the data. In addition to this self-description associated with each

file, it is also important that the overall organization of the archive and of the

adopted remediation methodology are reflected in the directory structure and

naming conventions of files, which should become clear, in the first place, for

all project participants, and also for users. These conventions should allow not

only a clear understanding of relations between files that derive from each other,

but also their relationship with the objects, collections, and repositories of

origin. Naming conventions should also allow the continuous addition of new

elements within their scheme of hierarchies and associations (see [

Pitti 2004]).

Archival performance takes place also at the level of database organization and

search algorithms as expressions of a classification system. Metadata will define

the hierarchical and networked relations between items, determining the

representation of the collections and the associative retrieval of items.

Overlapping hierarchies and cross-relations establish the ontological

representation of the archive as an aggregation of explicitly related and

searchable items. Machine performance is dependent upon a category-building

process that gives form and structure to a collection of items. We have made some

effort to keep our system relatively flexible since we recognized the limitations

of current taxonomies and systems of classification for forms of literature that

are defined essentially by intermediality, and by the combination of literary and

artistic genres, conventions, and techniques. As in the case of electronic

literature (see [

Tabbi 2007]), there is no set of agreed semantic

operators for describing many of the works in this archive.

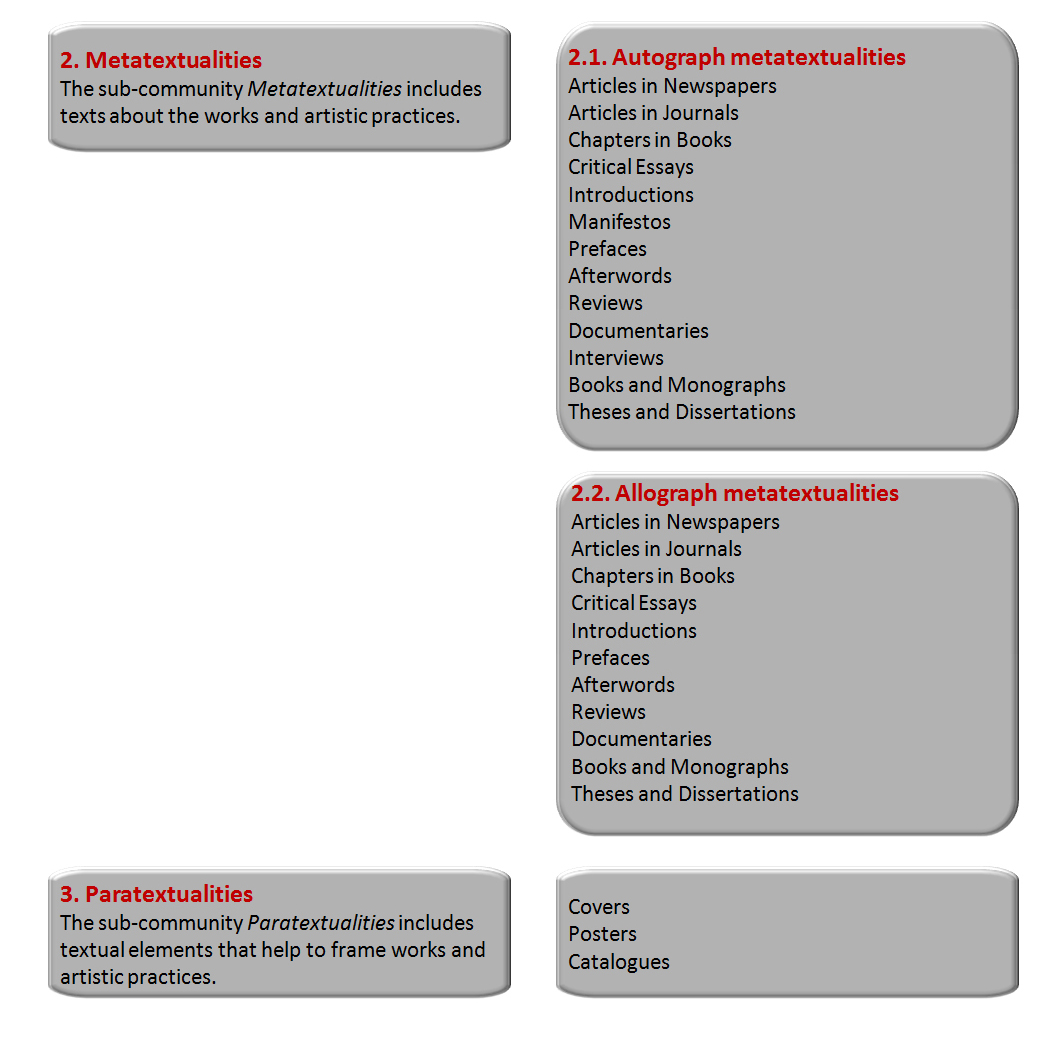

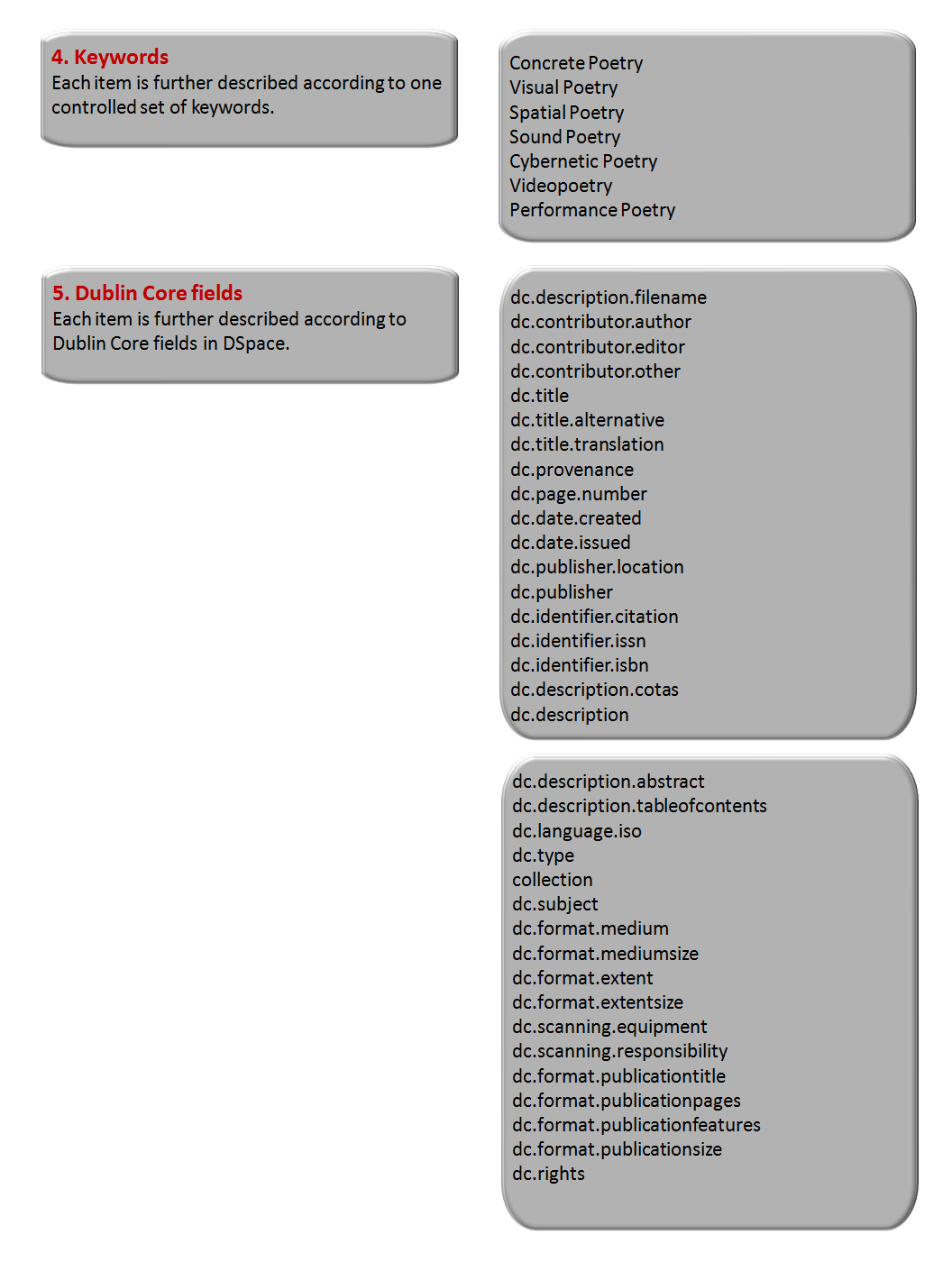

Our taxonomy uses three overlapping systems of classification in an attempt to

combine our own analysis of the corpus with the historical vocabulary of the

practitioners (keywords) and also with standard Dublin Core descriptors.

[11]. I highlight one particular aspect of the proposed

taxonomy which is the interaction between the description of material, media, and

technique (which we have called

materialities) with the description

of transtextual relations (which we have called

metatextualities and

paratextualities) [see Figures 19, 20 and 21]. We tried to strike

a balance between two desirable aims: the need of bringing into the database

structure some of the vocabulary and categories that reflect the original

communities of practice, with their particular intentions and contexts; and the

need to provide a critical and classificatory perspective that uses taxonomies

validated by scientific and academic communities. Although the taxonomies have

been generated through close observation of the specifics of the selected corpus,

they were also subject to the requirements of higher-level descriptions that allow

them to be interoperable with other databases ([

Torres 2014].

Finally the interface itself – including graphical design, navigation structure,

and search capabilities – becomes another layer in the performance of the archive,

producing certain kinds of display and modes of access to digital surrogates.

Functionalities embedded in the interface establish a program of use, influencing

users’ perception of the holdings and structure of the archive. Working as a frame

of reference for the entire database, the interface co-performs the content for

the user. At the same time, through an unanticipated choreography of interactions,

which will evolve over time, the archive can re-imagine itself.

5.2. Rereading, Rewriting, Recreating

Remediation is also occasion for re-imagination. An example of this re-imagination

of the remediating dynamics is one strategy we have called

“releitura” [“rereading”]. Here the

digital facsimile approach has been substituted by a formal intervention that uses

the original work as a program for further textual instantiations that can be

developed through the use of code. Remediation becomes a creative translation that

rewrites the text with scripts that explore its signifying potential within the

new medium. Digital representation has become an occasion for a media translation

that plays with the ratios of the various intersemiotic textual levels in the

source works. In this anti-archival approach remediation is an open exploration of

the signifying potential contained in visual and permutational texts. This form of

reading as rewriting has been applied to a selection of paper-based works of the

1960s that have been treated as projects for new works ([

Portela 2009]; [

Torres 2012b]).

One example can be seen in the “rereading” of the work

Poemas Encontrados [“Found Poems”] (1964) by

António Aragão. Two digital recreations of that work adopt different strategies,

both of which stress the timed and temporal condition of writing in the periodical

press. The randomized combination of printed headlines on the pages of periodicals

is performed, in one case, by means of animation (using Actionscript code by Jared

Tarbel) on a set of pre-defined words and phrases. Typographical differences in

face, size, and style, as well as the progressive overlapping of white letters on

black background, across different areas of the screen, emulate the indiscriminate

collage of newspaper headlines in the original paper collage. Instead of digitally

recreating the original typographic forms and phrases, what is recreated is the

compositional and procedural principle of aleatoric combination.

[12] In the

second instance, the Actionscript code by Tarbel works in conjunction with PHP

programming by Nuno Ferreira, and with RSS feed in real time from online editions

of several newspapers and sites –

Público

(Portugal),

Jornal Folha

de São Paulo (Brazil) ,

Google News

Brazil ,

New York Times

(U.S.A.),

Jornal Folha

de São Paulo (Brazil) - v. 2 ,

Jornal

Expresso (Portugal) and

Jornal

La Vanguardia (Spain).

The combinatorial collage of newspapers’ headlines has been applied to the

current online press, using RSS tools and the language of web pages to build a

mechanism for real-time digital collage – a device that is able to produce

“found poems” through an algorithmic procedure. By

displacing the particular historical content and historical reference of the

original collage, this digital recoding de-contextualizes and breaks the chains of

meaning that bind text and context, a move comparable to what happened in the

original. Indeed, this is one of the main effects of the collage by António

Aragão: original sentences and references have been abolished, or they remain only

as a distant echo, since the poem has broken the markers of discursive cohesion

and coherence that ensured their pragmatic function in the newspaper context. Its

signifying emptiness, that is, its potential for meaning is embodied in the

arbitrary network of relationships between words and sentence fragments, which

continuously overlap and repeat in different scales and at various points of the

screen, resembling statistical clouds of occurrences.

6. The Archive as Open Experiment

The fetishist eroticism of detail is common to many digital archives that establish

their authority on the basis of exhaustiveness in listing, describing, and marking

details. Scholarly literary archives of autograph materials embody this contradiction

between the desire for exhaustive markup of a never-ending set of material features

and the resistance of textual instances to any fixed encoding system. This is one of

the heuristic consequences of digital archives: our obsessive attempt to represent

objects confronts us not just with what we don’t know, but also with the limits of

knowledge as representation. Digital modeling seems to be, in this sense, a new way

of experiencing the failure of representation. Inclusiveness, detailism, and

exhaustiveness become ever-present and ever-unresolved issues.

The medium’s simulatory affordances raise our awareness about the signifying

potential of a whole new level of material differences. Once you begin to pay

attention to all the minutiae of paper and ink, script and layout, cancellations and

erasure, folds and cut-outs, preliminary and preparatory, software and hardware,

materiality and textuality expand to all possible inscriptional variations. Writing

acts assume a gestural and bodily dimension, as if read through an abstract

expressionist code. Digital tools and environments show themselves as reading and

interpretative devices rather than mere instrumental techniques for textual

reproduction and simulation. The interpretative nature of editing and archiving as an

intervention in the material instantiation of a specific textual field dispels

empiricist illusions about the possibility of objective and definitive textual

reconstitutions. Any digital representation will depend on the granularity of its

description and on the politics of its constraints. The PO.EX Archive showed us the

limits of our digital models for the artifacts that we were trying to preserve,

classify, recreate, and network. These multiple transcodings performed the artifacts

as particular products of the database aesthetics of knowledge production.

This may well be one of the theoretical and methodological achievements of the

current digital archiving obsession: editing and archiving can be critically used for

acts of reinterpretation that fully engage the complexity of the various textual and

media materialities of literary practices. Denaturalized and reframed by digital

codes and forms, the dynamics between bibliographic, linguistic, spatial, visual,

sonic, cinematic, and performative is rendered more explicit – a dynamics that can be

programmatically used for reexamining the material production of meaning and for

reimagining the archive itself as a representational intervention. Ultimately, the

remediation of manuscripts, books, collages, photographs, audiotapes, videotapes, and

programming codes as an intermedia network of digital objects gives us the

opportunity for a reflexive exploration of the performative nature of digital

simulation itself. Decentred and decontextualized by the technical affordances of the

medium, digital surrogates enter a new space for material, textual, and conceptual

experiments.

Notes

[1] This article

contains a revised and remixed version of three unpublished papers: (a) “Digital Editing for Experimental Texts” (originally

presented at “Texts Worth Editing”, The Seventh

International Conference of the European Society for Textual Scholarship, 25-27

November 2010, Pisa and Florence, co-sponsored by Consiglio Nazionale delle

Ricerche, Pisa, and Società Dantesca Italiana, Florence); (b) “PO.EX 70-80: The Electronic Multimodal Repository” (co-written with Rui

Torres, and originally presented at “E-Poetry 2011:

International Emerging Literatures, Media Arts & Digital Culture

Festival”, State University of New York, Buffalo, 18-21 May 2011); and

(c) “Performing the Digital Archive: Remediation, Emulation,

Recreation” (originally presented at the Electronic Literature

Organization conference “ELO 2012: Electrifying Literature:

Affordances and Constraints”, West Virginia University, Morgantown, WV,

20-23 June, 2012). I want to express my gratitude to the organizers of those

conferences, particularly to Andrea Bozzi and Peter Robinson, Loss Pequeño

Glazier, and Sandy Baldwin. I also want to thank my colleague and friend Rui

Torres for a three-year intellectual exchange about the problems of collecting,

digitizing, editing, and recreating experimental works of literature. The final

version has benefited from insights, comments, and suggestions by the anonymous

reviewers of DHQ. This article is part of PO.EX: A Digital Archive of Portuguese Experimental

Literature, a research project funded by FCT-Fundação para a Ciência e

a Tecnologia and by FEDER and COMPETE of the European Union (Ref.

PTDC/CLE-LLI/098270/2008).

[2] For a brief account of the evolution of

experimental literary practices in the period 1960-1990 see [Torres 2012a]; for a detailed bibliography of experimental literary

works see [Torres and Portela 2012]. [3] Not

all digitized materials will be made available. Most authors (or their executors)

granted permission for including reproductions of their works, but we could not

clear copyright authorization for all digitized items.

[5] One

of the major sources for this project has been the Archive

Fernando Aguiar. Fernando Aguiar, one of the authors of the group and

organizer of several national and international exhibitions and festivals since

the mid-1980s, has been collecting works and related documentation for more than

three decades. The PO.EX Digital Archive includes

works by the following authors: Abílio [Abílio-José Santos] (1926-1992); Alberto

Pimenta (1937-); Américo Rodrigues (1961-); Ana Hatherly (1929-); Antero de Alda

(1961-); António Aragão (1921-2008); António Barros (1953-); António Dantas

(1954-); António Nelos (1949-); Armando Macatrão (1957-); César Figueiredo

(1954-); Emerenciano (1946-); E.M. de Melo e Castro (1932-); Fernando Aguiar

(1956-); Gabriel Rui Silva (1956-); Jorge dos Reis (1971-); José-Alberto Marques

(1939-); Liberto Cruz [Álvaro Neto ] (1935-); Pedro Barbosa (1948-); Salette

Tavares (1922-1994); and Silvestre Pestana (1949-).

[6] Most printed texts (including manifestos, critical essays, and

reception documents) are remediated as digital facsimiles in image formats. They

are not transcribed as alphanumeric text. Although this option is justifiable

because of the constellated and visual character of many works, it was also

determined by our limited resources in time and money. Works and documents in

standard typographic layout should also have been transcribed into digital text

formats. This would enable complex queries in the textual body. One of the

consequences of this limitation is that most of the critical dimension of the

archive depends on the taxonomies used for associating metadata with each

particular item.

[9] See, for instance, the Digital

Formats Web Site (2004-2011), which is part of the National Digital

Information Infrastructure and Preservation Program of the Library of Congress.

In the domain of new media and internet art, see the research project and

symposium Archiving the Avant Garde: Documenting and Preserving Digital/Media Art

(2007, UC Berkeley). Archiving the Avant

Garde is a consortium project of the University of California,

Berkeley Art Museum, and Pacific Film Archive (with the Solomon R. Guggenheim

Museum, Cleveland Performance Art Festival and Archive, Franklin Furnace

Archive, and Rhizome.org). [10] Their

case studies include (1) computers and disks of authors such as Michael Joyce,

Norman Mailer, Terrence McNally, and Arnold Wesker, housed at The Harry Ransom

Center at The University of Texas at Austin; (2) computers and disks of Salman

Rushdie held at The Emory University Libraries; and (3) hardware, software, and

other collectible material from Deena Larsen, acquired by the Maryland

Institute for Technology in the Humanities [Kirschenbaum 2009b].

[12] Although

not made in the context of a digital archive, similar approaches with random

permutations can be seen in programmed versions of Samuel Beckett’s Lessness in Possible

Lessnesses by Elizabeth Drew and Mads Haahr [Drew 2002] and Raymond Queneau’s Cent mille milliards de

poèmes by Magnus Bodin [Bodin 1997]. Works Cited

Aguiar 1985 Aguiar, Fernando, and Silvestre Pestana, eds. Poemografias: Perspectivas da Poesia Visual

Portuguesa. Lisboa: Ulmeiro, 1985.

Bernstein 1998 Bernstein, Charles. “Introduction”. In Charles Bernstein, ed., Close Listening: Poetry and the Performed Word. Oxford and New York: Oxford University Press, 1998. pp. 3-28.

Bernstein 2006 Bernstein, Charles. “Making Audio Visible: The Lessons of Visual Language for the

Textualization of Sound”. Text 16 (2006), pp. 277-289.

Deegan 2009 Deegan, Marilyn, and Kathryn Sutherland, eds. Text Editing, Print and the Digital World. Farnham: Ashgate, 2009.

Drew 2002 Drew, Elizabeth, and Mads Haahr. “Lessness: Randomness, Consciousness and Meaning ”. Presented at

Consciousness Reframed: The 4th International CAiiA-STAR Research Conference, sponsored by (2002).

http://www.random.org/lessness/paper/.

Drucker 2006b Drucker, Johanna, ed.

Artists’ Books Online: An Online Repository of Facsimiles, Metadata,

and Criticism. University of Virginia (2006-2009) and University of California-Los Angeles (2010-present), 2006.

http://www.artistsbooksonline.org/.

Drucker 2009 Drucker, Johanna. “Modeling Functionality: From Codex to e-Book”. In SpecLab: Digital Aesthetics and Projects in Speculative

Computing. Chicago: University of Chicago Press, 2009. pp. 165-174.

Eaves 1996 Eaves, Morris, Robert Essick and Joseph Viscomi, eds.

The William Blake Archive. Institute for Advanced Technology in the Humanities, University of Virginia, 1996-present.

http://www.blakearchive.org/.

Filreis 2004 Filreis, Al, and Charles Bernstein, eds.

PennSound. Center for Programs in Contemporary Writing, University of Pennsylvania, 2004-present.

http://writing.upenn.edu/pennsound/.

Kirschenbaum 2009a Kirschenbaum, Matthew G., Erika L. Farr, Kari M. Kraus, Naomi Nelson, Catherine Stollar Peters, Gabriela Redwine and Doug Reside.

Approaches to Managing and Collecting

Born-Digital Literary Materials for Scholarly Use.

, White Paper to the NEH Office of Digital Humanities. 2009.

http://drum.lib.umd.edu/handle/1903/9787.

Kirschenbaum 2009b Kirschenbaum, Matthew G., Erika L. Farr, Kari M. Kraus, Naomi Nelson, Catherine Stollar Peters, Gabriela Redwine and Doug Reside. “Digital Materiality: Preserving Access to

Computers as Complete Environments”. Presented at

iPRES 2009: the Sixth International Conference on Preservation

of Digital Objects, sponsored by California Digital Library, University of California (2009).

http://escholarship.org/uc/item/7d3465vg.

Liu 2005 Liu, Alan, David Durand, Nick Montfort, Merrilee Proffitt, Liam R.E. Quinn, Jean-Hughes Réty and Noah Wardrip-Fruin.

Born-Again Bits: A Framework for Migrating Electronic

Literature.

Electronic Literature Organization. 2005.

http://www.eliterature.org/pad/bab.html.

McGann 2001 McGann, Jerome. “The Rationale of Hypertext”. In Radiant Textuality:

Literature after the World Wide Web. New York: Palgrave Macmillan, 2001. pp. 53-74.

Montfort 2004 Montfort, Nick, and Noah Wardrip-Fruin.

Acid-Free Bits: Recommendations for Long-Lasting Electronic

Literature.

Electronic Literature

Organization . 2004.

http://www.eliterature.org/pad/afb.html.

Murray 2012 Murray, Janet H. Inventing the Medium: Principles of Interaction Design as Cultural

Practice. Cambridge: MIT Press, 2012.

Pitti 2004 Pitti, Daniel V. “Designing Sustainable Projects and Publications”. In Susan Schreibman Ray Siemens and John Unsworth, eds.,

A Companion to Digital

Humanities. Oxford: Blackwell, 2004. pp. 471-487.

http://www.digitalhumanities.org/companion/.

Portela 2009 Portela, Manuel. “Flash Script Poex: A Recodificação Digital do Poema Experimental”. Cibertextualidades 3 (2009), pp. 43-57.

Renear 2004 Renear, Allen H. “Text

Encoding”. In Susan Schreibman Ray Siemens and John Unsworth, eds., A Companion to Digital

Humanities. Oxford: Blackwell, 2004. pp. 218-239.

Schreibman 2002 Schreibman, Susan. “The Text Ported”. Literary and

Linguistic Computing 17: 1 (2002), pp. 77-87.

Shillingsburg 1996 Shillingsburg, Peter. Scholarly Editing in the Computer Age. Ann Arbor: University of Michigan Press, 1996.

Shillingsburg 2006 Shillingsburg, Peter L. From Gutenberg to Google: Electronic Representations of Literary Texts. Cambridge: Cambridge University Press, 2006.

Siemens 2005 Siemens, Ray. “Text Analysis and the Dynamic Edition? A Working Paper, Briefly Articulating Some

Concerns with an Algorithmic Approach to the Electronic Scholarly Edition”.

Text Technology 14 (2005), pp. 91-98.

http://texttechnology.mcmaster.ca/pdf/vol14_1_09.pdf.

Tabbi 2007 Tabbi, Joseph.

Toward

a Semantic Literary Web: Setting a Direction for the Electronic Literature

Organization's Directory.

Electronic Literature Organization. 2007.

http://eliterature.org/pad/slw.html.

Torres 2010 Torres, Rui. “Preservación y diseminación de la literatura electrónica: por un archivo digital

de literatura experimental”. Arizona Journal of

Hispanic Cultural Studies 14 (2010), pp. 281-298.

Torres 2012a Torres, Rui. “Visuality and Material Expressiveness in Portuguese Experimental Poetry”. Journal of Artists' Books 32 (2012), pp. 9-20.

Torres 2013 Torres, Rui, ed.

PO.EX: A Digital Archive of Portuguese Experimental Literature. Porto: Universidade Fernando Pessoa, 2013.

http://www.po-ex.net/.

Torres 2014 Torres, Rui, Manuel Portela, and Maria do

Carmo Castelo Branco. “Justificação metodológica da taxonomia do

Arquivo Digital da Literatura Experimental Portuguesa,

”

Poesia Experimental Portuguesa: Contextos, Estudos,

Entrevistas, Metodologias, Porto: Edições Universidade Fernando Pessoa

[forthcoming].

Torres and Portela 2012 Torres, Rui, and Manuel Portela. “A Bibliography of Portuguese

Experimental Poetry”. Journal of Artists'

Books 32 (2002), pp. 32-35.

Van Hulle and Nixon 2011 Van Hulle, Dirk, and Mark Nixon, eds.

Samuel Beckett Digital Manuscript Project. Centre for Manuscript Genetics (University of Antwerp), the Beckett International Foundation (University of Reading), the Harry Ransom Humanities Research Center (Austin, Texas) and the Estate of Samuel Beckett, 2011-present.

http://www.beckettarchive.org/.

Vanhoutte 2004 Vanhoutte, Edward. “An Introduction to the TEI and the TEI Consortium”. Literary and Linguistic Computing 19: 1 (2004), pp. 9-16.