Abstract

This essay discusses agent-based modeling (ABM) and its

potential as a technique for studying history, including

literary history. How can a computer simulation tell us

anything about the past? This essay has three distinct

goals. The first is simply to introduce agent-based modeling

as a computational practice to an audience of digital

humanists, for whom it remains largely unfamiliar despite

signs of increasing interest. Second, to introduce one

possible application for social simulation by comparing it

to conventional, print-based models of the history of book

publishing. Third, and most importantly, I’ll sketch out a

theory and preliminary method for incorporating social

simulation into an on-going program of humanities

research.

Introduction

This essay will discuss agent-based modeling (ABM) and its

potential as a technique for studying history, including

literary history. When confronted by historical simulations,

scholars first notice their unusual ontological commitments:

a computer model of social life creates a simulated world

and then subjects that world to analysis. On the surface,

computational modeling has many of the trappings of science,

but at their core simulations seem like elaborate fictions:

the epistemological opposite of science or history.

Historical simulation thus straddles two very different

scholarly practices. On the one hand are the generally

accepted practices of empirical research, which look to the

archive for evidence and then generalize based on that

evidence. On the other hand is the new and in many ways

idiosyncratic practice of simulation, which thinks in the

opposite direction. ABM begins with a theory about a real

system and then creates a functional replica of that system.

When confronted with agent-based models, historians often

respond with a knee-jerk (and in many ways, justified)

skepticism about the applicability and usefulness of

artificial worlds. How can a computer simulation tell us

anything about the past? The difficulty of this question

should not be understated. Nonetheless, I will propose that

these forms of intellectual inquiry can productively

coincide, and I’ll map out a research program for historians

curious about the possibilities opened by this new

technique.

This essay has three distinct goals. The first is simply to

introduce agent-based modeling as a computational practice

to an audience of digital humanists, for whom it remains

largely unfamiliar despite signs of increasing interest. The

second goal is to introduce one possible application for

social simulation by comparing it to conventional,

print-based models of the history of book publishing. Third,

and most importantly, I’ll sketch out a theory and

preliminary method for incorporating social simulation into

an on-going program of humanities research.

I: Playing with complexity

Agent-based modeling, sometimes called individual-based

modeling, is a comparatively new method of computational

analysis.

[1] Unlike equilibrium-based

modeling, which uses differential equations to track

relationships among statistically generated aggregate

phenomena — like the effect of interest rates on GDP, for

example — ABM simulates a field of interacting entities

(agents) whose simple individual behaviors collectively

cause larger emergent phenomena. In the same regard, ABM

differs significantly from other kinds of computational

analysis prevalent in the digital humanities. Unlike text

mining, topic modeling, and social-network analysis, which

apply quantitative analysis to already existing text corpora

or databases, ABM creates a simulated environment and

measures the interactions of individual agents within that

environment. According to Steven F. Railsback and Volker

Grimm, ABMs are “models where

individuals or agents are described as unique and

autonomous entities that usually interact with each

other and their environment locally”

[

Railsback 2012, 10]. These local

interactions generate collective patterns, and the

intellectual work of ABM centers on identifying the

relationships among individual rules of behavior and the

larger cultural trends they might cause.

In this way, agent-based modeling is closely associated with

complex-systems theory, and models are designed to simulate

adaptation and emergence. In the fields of ecology,

economics, and political science, ABM has been used to show

how the behaviors of individual entities — microbes,

consumers, and voters — emerge into new collective

wholes.

[2]

John Miller and Scott Page describe complex systems: “The remarkable thing about social

worlds is how quickly [individual] connections and

change can lead to complexity. Social agents must

predict and react to the actions and predictions of

other agents. The various connections inherent in

social systems exacerbate these actions as agents

become closely coupled to one another. The result of

such a system is that agent interactions become

highly nonlinear, the system becomes difficult to

decompose, and complexity ensues”

[

Miller 2007, 10]. At the center of complexity thus rests an underlying

simplicity: the great heterogeneous mass of culture in which

we live becomes reconfigured as an emergent effect of the

smaller, describable choices individuals tend to make. The

intellectual pay-off of social simulation comes when

scholars identify and replicate this surprising disjunction.

As Joshua Epstein and Robert Axtell argue, “it is not the emergent macroscopic

object per se that is surprising, but the generative

sufficiency of the simple local rules”

[

Epstein 1996, 52]. In this formulation, to study complex systems is to

wield the procedural operation of computers like Occam’s

Razor — by showing that simple procedures are sufficient to

cause complex phenomena within artificial societies, one

raises at least the possibility that such procedures are

“all that is really

happening” in actual systems [

Epstein 1996, 52].

Humanists will be hesitant to accept the value of this (and

should be, I think), and I will return to the notion of

“generative sufficiency”

later. For now I mean only to point out the way

complex-systems theory elevates the local and the simple at

the level of interpretation: to know about the world under

this paradigm is to generate computer simulations that look

in their larger patterns more or less like reality but which

at the level of code are dictated by artificially simple

underlying processes.

The basic work of agent-based modeling involves writing the

algorithms that dictate these processes. Agent-oriented

programs can be written and executed from scratch in any

object-oriented programming language, including Python and

R. However, scholars looking to incorporate agent-based

simulations into their research often rely on out-of-the-box

software packages. Some, like AnyLogic, are proprietary

toolkits designed for commercial applications, but many are

open source. In their comprehensive survey of available

packages, Cynthia Nikolai and Gregory Madey point out that

“different groups of users prefer

different and sometimes conflicting aspects of a

toolkit”

[

Nikolai 2009, 1.1]. Social scientists

and humanities-based researchers, they argue, tend to favor

easy-to-learn interfaces that require fewer programming

skills, while computer scientists prefer packages that can

be modified and repurposed.

[3] Without presuming to recommend (even implicitly) one toolkit

over another, and in the hope that my discussion will be

applicable across platforms, I will focus on examples drawn

from NetLogo. NetLogo is a descendent of the Logo

programming language, which was first designed in the 1960s

and became popular in primary and secondary education [

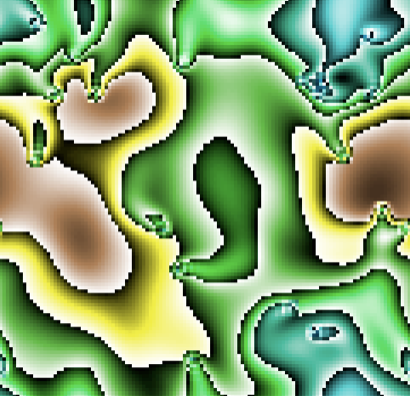

Harvey 1997]. Like its ancestor, NetLogo

creates sprites called “turtles” and moves them

according to unit operations called “procedures”. The

turtles circulate in an open field of “patches,” small

squares which can be assigned variables that change over

time. Like Logo, NetLogo can generate beautiful and

intricate visual displays from comparatively simple

commands. (See Figure 1.)

This ability to visualize the behaviors of many turtles as

they execute their individually determined procedures is

what makes the Logo family of programming languages

particularly suited for agent-based simulations. (RePast

Simphony has adopted a similar vocabulary, called ReLogo,

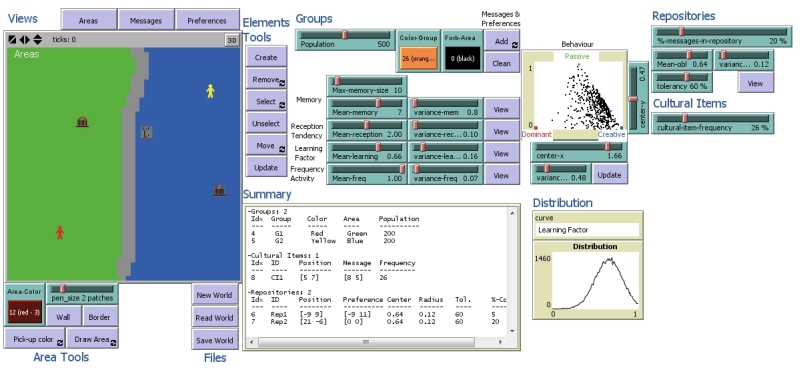

for novice users.) Researchers Juan-Luis Suárez and Fernando

Sancho, inspired by their primary research on the Spanish

baroque as a transatlantic intellectual phenomenon, created

the

Virtual Culture Laboratory

(VCL) to model international cultural transmission.

The VCL (

Figure 2) creates a

field of individual agents that circulate among each other

as they cross artificially abstract cultural boundaries. The

world Suárez and Sancho envision is divided geographically

and demographically: a green nation is separated from a blue

nation, and both are home to agents with a mix of dominant

(red) and creative or passive (yellow) personalities. As the

agents circulate in these regions, they trade messages and

learn from each other. Measuring the agents’ performance

under different conditions allows the researchers to test

theories of intercultural exchange. Suárez and Sancho write,

“Taking the baroque as a cultural

system enables us to observe individual works and their

interactions with the human beings who create,

contemplate and use them, to examine the emergence of

‘cultural’ patterns from these interactions,

and to determine the diverse states of the resulting

culture”

[

Suárez and Sancho 2011, 1.11]. In this way,

graphically simple programming environments like NetLogo

allow researchers to create analytically rigorous

representations of complex social systems.

[4]

Of all the genres of computational expression across the

digital humanities, agent-based models might share most in

common with games, in particular what are called

“serious games.” Like games, models

simulate rule-bound behaviors and generate outcomes based on

those rules. Ian Bogost has described games’ intellectual

function: “Games represent how real and

imagined systems work, and they invite players to

interact with those systems and form judgments about

them”

[

Bogost 2007, vii]. In this sense, games

involve what Noah Wardrip-Fruin has called

“expressive processing.” He explains:

“When I play a simulation game,

author-crafted processes determine the operations of the

virtual economy. There is authorial expression in what

these rules make possible”

[

Wardrip-Fruin 2009, 3–4]. Like designing

serious games, modeling is a form of authorial expression

that uses procedural code to confront complex social and

intellectual problems.

ABM differs from gaming in three key respects, however.

First, it does not usually depend on direct human

interaction, at least not in the same sense of games in

which players move through a navigable space toward a

definite goal [

Manovich 2001]. There is no

boss to fight at the end of a social simulation. You do not

play an agent-based model. Instead, you

play with a model, tinkering with its

procedures and changing its variables to test how the code

influences agent behavior.

[5] I sometimes

describe social simulation this way: Imagine a

Sims game in which the player

writes all the behaviors, controls all the variables, and

then sets the system to run on autopilot thousands of times,

keeping statistics of everything it does. This suggests the

second key difference between ABMs and games. With

researcher-generated simulations, the researcher is in

control of the processes and can adjust their constraints

according to her or his interests.

[6] A

fundamental characteristic of social simulations is that

designers can alter the parameters of the model’s function

and thereby generate different, often unexpected patterns of

emergence.

[7]

“While, of course, a model can never go

beyond the bounds of its initial framework,”

Miller and Page write, “this does not imply that it cannot

go beyond the bounds of our initial

understanding”

[

Miller 2007, 69]. By pushing against their designers’ expectations,

simulated environments are analogous to experimental

laboratories where hypotheses are tested, confirmed, and

rejected. They join an ongoing process of intellectual

inquiry. “Simulation practices have

their own lives,” philosopher Eric Winsberg

explains. “They evolve and mature over the

course of a long period of use, and they are

‘retooled’ as new applications demand more

and more reliable and precise techniques and

algorithms”

[

Winsberg 2010, 45]. Here, then, is the third and most important

difference that separates simulations from games: they

participate in disciplined traditions of scholarly inquiry,

and their results are meant to contribute to research

agendas that exist outside themselves.

II: Models: epistemology and ontology

However, if we are to simulate responsibly, the above

discussion raises a number of epistemological and

ontological issues that must be acknowledged and dealt with.

These issues can be stated as a pair of questions: What is a

model? How can models be used as instruments of learning? In

the field of humanities computing, Willard McCarty has been

a leading voice.

[8]

Historians have debated the relationship between implicit

models of general human behavior and their larger narratives

that describe the causes of particular historical events.

[9] Outside the humanities, especially

in economics and physics, modeling has a long tradition of

contested commentary [

Morrison 1999]. Against

this large and still-growing body of scholarship, it may

seem presumptuous or tedious to offer yet another discussion

of the philosophy of modeling, but I’ve found that a very

particular understanding of the term is useful when thinking

about how computational models can be incorporated into

historical research. The thesis I’ll argue for, in a

nutshell, is that

models don’t represent the world —

they represent ideas. The corollary to this

claim, as it pertains to history, is that

models don’t

represent the past — they represent our ideas about the

past. To begin to show what I mean here and why it might matter for

the practice of historical simulation, allow me to back up

and survey some of the more common uses of the word. In

Language of Art (1976),

Nelson Goodman describes models in a usefully comprehensive

way: “Few terms are used in popular and

scientific discourse more promiscuously than

‘model.’ A model is something to be admired or

emulated, a pattern, a case in point, a type, a

prototype, a specimen, a mock-up, a mathematical

description — almost anything from a naked blonde to a

quadratic equation — and may bear to what it models

almost any relation of symbolization.”

[10] Goodman

is skeptical that a general theory of modeling is possible,

but if we work through these examples, some general patterns

emerge.

Consider fashion models. It doesn’t seem right to say they

represent people. Models don’t seem to represent actual

human bodies. They represent instead normative ideas about

how the human body should look, as well as, perhaps, ideas

about sexuality and capitalism. Compare fashion models with

model organisms, like fruit flies and lab rats, used by

geneticists and biologists to study living systems. Mice

don’t represent human bodies any more than fashion models

do, but in the field of medical research they serve as

analogues, representatives of mammalian systems in general,

including humans. Like beauty, mammalianism is a concept we

use to categorize bodies. Theoretical models of the kind

used in microphysics represent particles, true, but those

particles are usually not directly observable, and in some

cases they might not even exist [

Morgan 1999a].

[11]

Mathematical models common in economics are meant to

represent real economic activity, but, as Kevin Brine and

Mary Poovey have recently argued, economic models remain at

several ontological removes from the world they purport to

describe [

Poovey 2013].

[12] Even

mimetic objects like physical scale models, such as the

papier-mâché volcano, serve to illustrate and visualize

ideas about causal forces in geological systems [

de Chadarevian 2004].

These different forms of symbolization may have more in

common than Goodman acknowledged. They all share a condition

of exemplarity. None stand in for reality, exactly. None

refer in a straightforward way to the phenomena they purport

to describe; rather, they exemplify the formal

characteristics of those phenomena. If models represent

anything, they describe generic types, categories, theories,

and other structures of relation. This is what I mean when I

say that models represent ideas rather than things.

[13] Models describe the world

analogically by representing their underlying theory

mimetically. In

Science Without Laws:

Model Systems, Cases, and Exemplary Narratives

(2007), editors Angela Creager, Elizabeth Lunbeck,

and M. Norton Wise argue that “model

systems do not directly represent [phenomena] as models

of them. Rather, they serve as exemplars or analogues

that are probed and manipulated in the search for

generic (and genetic) relationships”

[

Creager 2011, 2]. As the editors make

clear, those generic and genetic relationships — those kinds

and causes — are not really intrinsic to anything; rather,

they are the concepts and theories that researchers bring to

bear, subject to inquiry, and portray as their

“conclusions.”

In the cases of a 3D mechanical replicas or computer

simulations, this process of abstraction isolates key

characteristics and behaviors — simple things — that can be

shown to generate more complex, dynamic structures. For

example, the economist and inventor Irving Fisher

popularized the use of mathematical equilibrium-based models

in economics [

Morgan 1999b]; [

Morgan 2004]; [

Poovey 2013]. He

began in the late nineteenth-century by building an actual

physical machine designed to represent currency flow. Small

hydraulic presses drove water in and out of the machine

through different tubes — rising and falling water levels

represented the influx or drain of valuable metals in an

economic territory whose currency was still tied to the gold

standard. Fisher had composed a handful of equations that,

he believed, described the flow of currency through an

economy, and he built the hydraulic machine to represent

that theory.

[14] Half

a century later, another economist, A. W. (Bill) Phillips,

built a similar machine called the MONIAC which he

understood as a pedagogical tool [

Colander 2011]. By visualizing accepted economic theories of currency

flow, the operation of the machine had an analogical

relationship to actual economic activity. In Mary Poovey’s

and Kevin Brine’s words, “The analog-machine method could

only represent economic processes analogically —

only, that is, by producing a simulation that

reproduced the theoretical assumptions formulated as

equilibrium theory”

[

Poovey 2013, 72].

Despite their critical and skeptical tone, Poovey and Brine

point directly to the value of replicas, whether mechanical

or digital. In Science without

Laws, the editors compare generative models to

lab rats and fruit flies. Unlike putatively simple, naive

observation, in which the observer passively receives

information about the external world, the process of

selecting or building models involves replicating one’s own

a priori ideas about how

the world does or might work and then subjecting a

functional representation of those ideas to close

scrutiny.

Building from these general observations, I use the word

“model” in two closely related ways to describe

both the replica or example and the theoretical assumptions

that motivate its creation or selection. At the abstract

level, a model is

any framework of interpretation used

to categorize real phenomena. It might be

specified to the point of being a theory, but it might refer

more generally to the categories, structures, and processes

thought to drive historical change.

[15] In literary theory, model in this sense

relates most closely to ideas of form and genre. At the more

particular level, a model is

any object used to

represent that framework. Such objects might

include a representation, a simulation, a replica, a

case-study, or simply an example.

The advantage of this definition is that it frees models

from the never-realizable expectation that they ought to

represent the world empirically. To return to the example of

laboratory mice, we can see that they represent human bodies

only provisionally and analogically.

[16] Their purpose is to represent an

interpretive framework — a conceptual model of mammalianism

— which is impossible to observe except through particular

cases. Like novels, models are fact-generating machines.

[17] In Bill Phillips’s MONIAC, the waters

rise and fall to measurable levels, and those changes are

real facts, in much the same way that it’s a fact that

Elizabeth Bennet married Mr. Darcy. However, artificial data

like these are true or false only with respect to their

procedural contexts. Out here in the real world, there never

was a real Elizabeth Bennet or Mr. Darcy, and if the blue

water rises in Bill Phillips’s machine, we haven’t

experienced inflation out here in the real world. Nothing

that happens in a simulation ever happens outside the

simulation. What happens in a model stays in a model, so to

speak. Artificial societies exist on their own terms while

providing analogues to the world beyond.

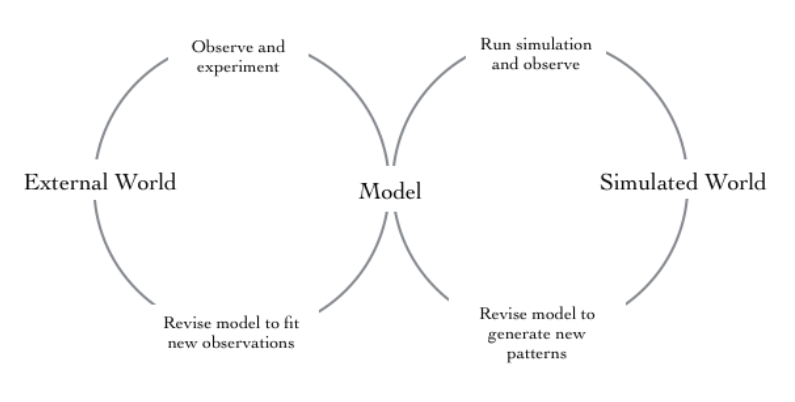

[18]So what does this have to do with history? Generative

modeling strips away the empirical apparatus of

document-based research and creates new facts. It flips and

mirrors the hermeneutic circle such that the whole thing

looks like a figure-8. (See

Figure

3). In the traditional hermeneutic circle the

researcher starts with a theory or model, sometimes

specified to the point of being a hypothesis, and from there

makes observations and experiments out in the real world.

The model is then revised to account for those new

observations. A researcher who builds simulations begins in

more or less the same place, but instead of digging into the

archives or querying Google n-grams, builds a simulated

world that works or doesn’t work according to expectations.

The patterns of behavior within the simulation either match

or fail to match what the designer predicts, and the model

is adjusted accordingly. As a guide to intellectual labor,

the hermeneutic figure-8 presupposes a researcher willing to

traverse all the contours of this line.

III: Models in Literary History

Literary historians use many kinds of models to support many

different kinds of claims. The simplest are classification

concepts like genre, nation, and period, which provide an

interpretive framework against which individual cases are

tested. Other models in literary history are more

complicated. Biographical contextualization creates a model

of some past “context,” usually in the form of

narrative description. Contexts are executed when scholars

use them to speculate about how people in the past might

have interpreted some text or event.

[19] These complex models often

deploy simpler submodels. For example, Michel Foucault

pointed out long ago that “the author” functions as an

interpretive model, and much the same could be said about

“the reader” as imagined in reader-response theory

and book history. The literary canon is itself a great,

capacious representative model. Though often compared to the

canons of scripture, in practice canonical literature has

more in common with the canonical organisms of biomedical

research. Rarely is the literary canon read with

prescriptive veneration, and never with the authority of

law. Instead of “great works” we have a

testing ground where new theories are subject to

examination. Though journalists and graduate students often

wonder what more there is to say about William Shakespeare,

that’s like asking what more there is to know about mice or

fruit flies.

Robinson Crusoe is

canonical in the same way that slime mold is canonical.

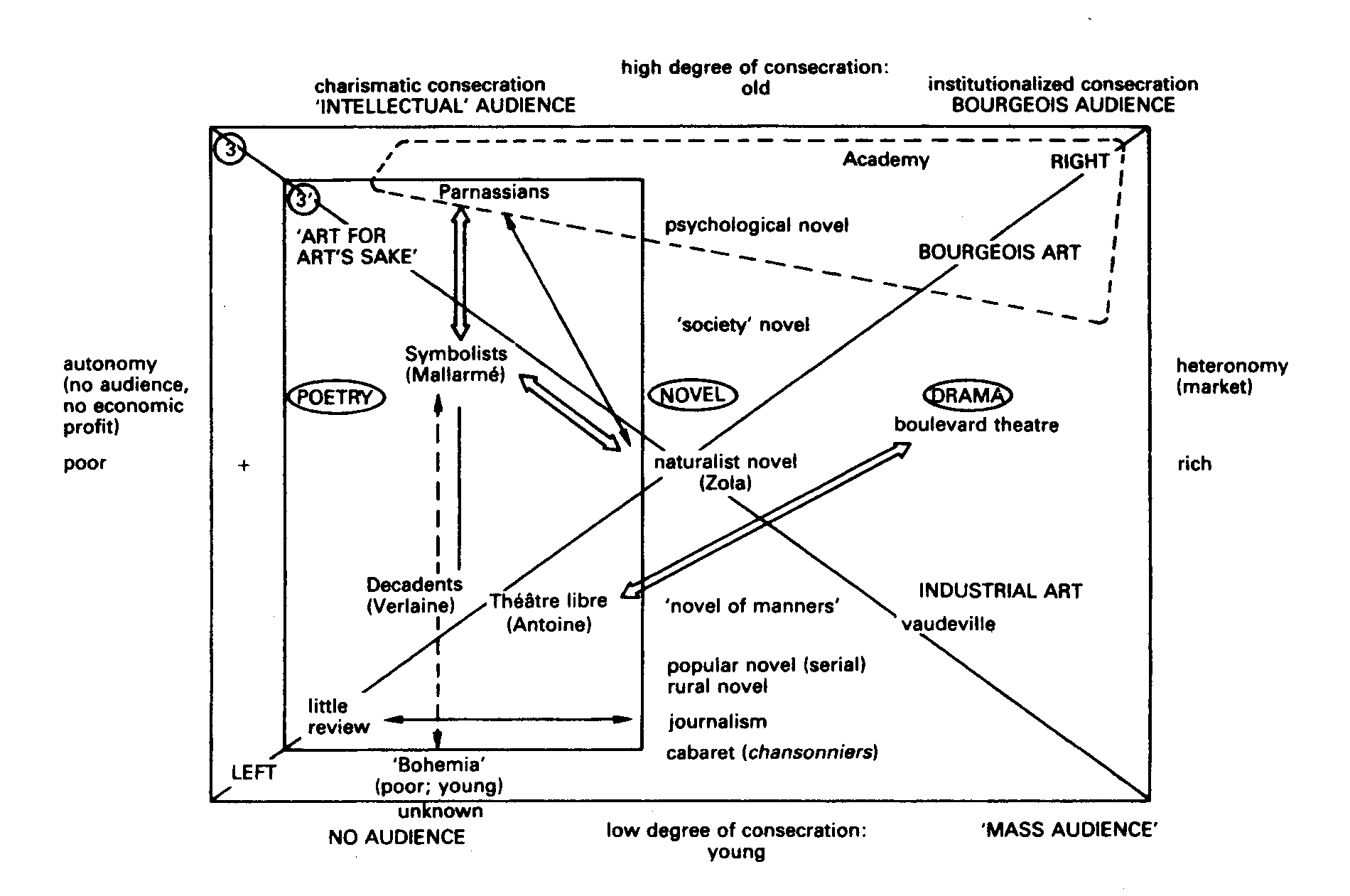

Some of these humanistic models are more amenable to

agent-based computation than others. Perhaps most promising

are those used to describe patterns of social formation,

processes of change, and systems of causation. Such models

usually appear as two-dimensional diagrams. Pierre

Bourdieu’s idea of “the field of

cultural production” advanced a highly abstract,

schematic picture of art, commerce, and politics. (See

Figure 4.)

Agents within these overlapping and competing

fields jostled for prestige, creating a dynamic and adaptive

system highly responsive to the choices made by individual

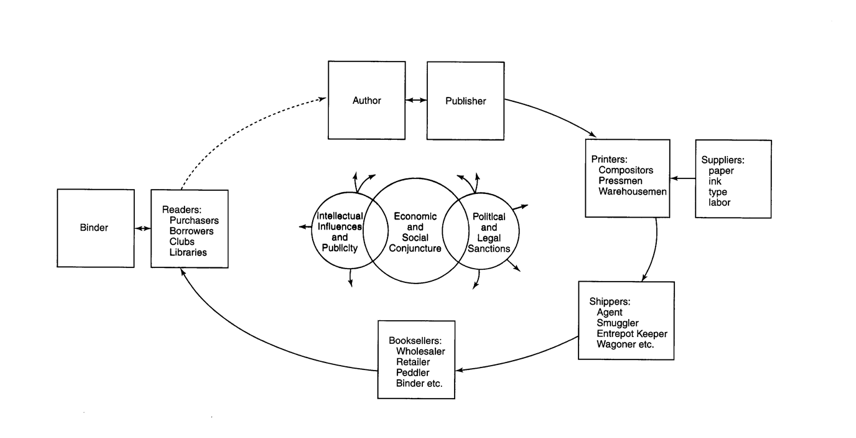

participants. Similarly, Robert Darnton’s model of the

communications circuit identified structural relationships

within the book trade. Ideas and books move throughout nodes

in an always-changing network. (See

Figure 5).

Such diagrams identify kinds of people and a

framework that binds them together. These frameworks — the

field, the circuit — visualize a web of forces that

motivated individual behaviors and caused systems to change

over time.

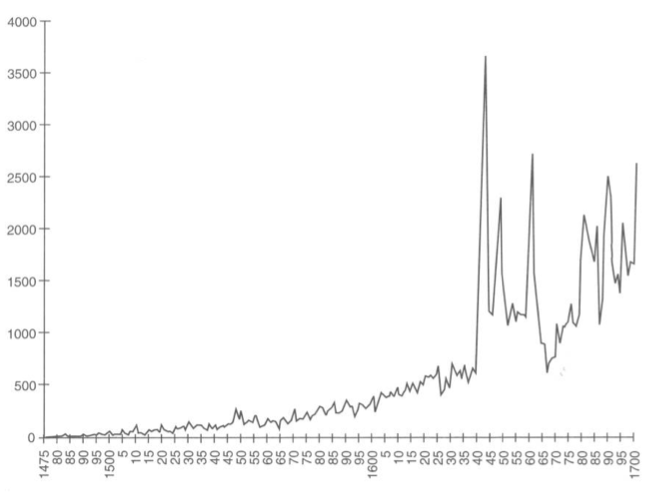

Book historians have also collected a large amount of data

in the form of statistics, although that data tends to be

disconnected and resistant to comparative analysis. It’s

difficult to aggregate much of the historical records

because of inconsistencies and gaps, but some system-level

statistics are available. For example, scholars have

tabulated the number of new books produced in England

annually from the earliest years of the hand-press era. (See

Figure 6.)

For the first 150 years, book production followed

a fairly stable arc of growth. However, this equilibrium was

shattered during the civil wars of the 1640s, when the print

marketplace exploded with political and religious debates.

Historian Nigel Smith has described the event as a “media revolution,” and scholars

often point to the English Civil War as a key early moment

in the development of the free, democratic press [

Smith 1994, 24]. On the one hand, the

scandal of war and regicide led to a heightened interest

among readers and seems to have increased demand for printed

books. On the other hand, political instability loosened the

stranglehold that state and commercial monopolies had long

exerted over the book trade.

What relation is there between diagrammatic models like

Bourdieu’s and Darnton’s and aggregate statistics like

these? It turns out, very little. Although economic and

political factors appear as forces in both diagrams, the

models don’t attempt to specify them. Darnton is a prominent

practitioner of “microhistory,” a technique of

historical explanation that performs close analysis of

individuals and “everyday life”; in Darnton’s case,

this means close study of individual members of the book

trade [

Brewer 2010]; [

Darnton 1984]. In microhistory, these often

overlooked figures are chosen to exemplify how the print

marketplace worked at the local level. Darnton’s model is

thus designed, not to explain large macrolevel patterns, but

instead to provide a heuristic tool for interpreting

particular historical events and persons, who then stand in

as model exemplars for those larger patterns. The

diagrammatic model operates in the service of a bias toward

the individual, particular, and contingent event. A model

like Darnton’s is validated — if “validated” is even

the right word — insofar as it helps scholars describe

particular pieces of evidence found in the archive.

In this respect, the diagrammatic models often drawn by

historians are validated very differently than simulations

like those popular among complex-systems theorists. Within

the field of complexity science, explanation is

“validated” by the model’s ability to replicate

large system-level patterns within simulated worlds.

[20]

If the model can produce visual patterns similar to patterns

produced by the observed record, the possibility is raised

that the moving parts of the model bear some meaningful

analogical relationship to the moving parts of real

processes. Epstein and Axtell argue that “the ability to grow [artificial societies]

— greatly facilitated by modern object-oriented

programming … holds out the prospect of a new,

generative, kind of social science”

[

Epstein 1996, 20]. This newness takes

form, not merely as a novel genre of cultural

representation, but as a mode of inquiry that fundamentally

transforms what we think of as historical explanation.

Epstein and Axtell ask, “What

constitutes an explanation of an observed social

phenomenon? Perhaps one day people will interpret the

question, ‘Can you explain it?’ as asking ‘Can

you grow it?’”

I will qualify Epstein’s and Axtell’s dictum below, and I

find the notion that agent-based models can be

“validated” to be highly dubious, but it’s worth

pausing over the radicalism of their anti-historical vision.

The intellectual mandate to “grow” artificial societies

places a wildly different set of demands on models like

Bourdieu’s and Darnton’s. Whereas static models are used as

heuristics for interpreting historical records, simulations

are designed to mimic macroscopic patterns. A generative

model becomes explanatory, they argue, when the simple,

local rules that dictate agent behavior can be shown to

result in complex patterns: to know something as a

complex-systems theorist is to identify this meaningful

disjunction. However, such simple rules can never mimic real

behavior at the microlevel. Converting static models to

dynamic simulations seems to shift the target of explanation

away from particular examples and towards system-level

statistics. Models are useful, according to complexity

theory, not for interpreting particular events but for

generating patterns that look like aggregations of things

that really happened; modeling complexity thus obviates the

need for attention to particulars. Simulations allow us to

see through the mystifying complications of historical

evidence and see in their place the simple processes that

underpin complex systems. Simplicity and complexity are

real, and simulations allow us to see them clearly by

stripping away the illusory contingencies of actuality. Such

is, at least, how I interpret the challenge complex-systems

theory poses to historical explanation.

In order for generative models to contribute to a larger

practice of historical explanation, scholars will need to

reject this theory, I think. The value of modeling will need

to be placed elsewhere. As a group, historians will never

concede that simplicity and complexity are more interesting

than nuance, complication, and ambiguity. Nor should they.

I’ll conclude this essay by arguing that dynamic simulations

work much like heuristic models at the level of historical

interpretation (but better). We can use agent-based models

without taking on board all of complexity theory’s

ontological commitments. For now though, I want to leave

Epstein’s and Axtell’s challenge suspended in the air, like

an unexpected admonition, or like an interdisciplinary dare:

Can we take our ideas, written out in regular academic prose

or drawn as diagrams, and paraphrase them into functional

computer code? Can we generate simulations that behave how

the historical record

we created predicts? If

not, maybe we don’t know what we think we know.

[21]

IV: Growing the communications circuit in a digital petri

dish

Following through on this dare requires a new historical

practice. Generative modeling is very different from

archival work (that’s obvious enough), but it’s also very

different from topic modeling and other statistical

techniques. Historical simulation completely sets aside the

basic empirical project of gathering and analyzing documents

from the past. Instead, simulation points back to the

theoretical model itself.

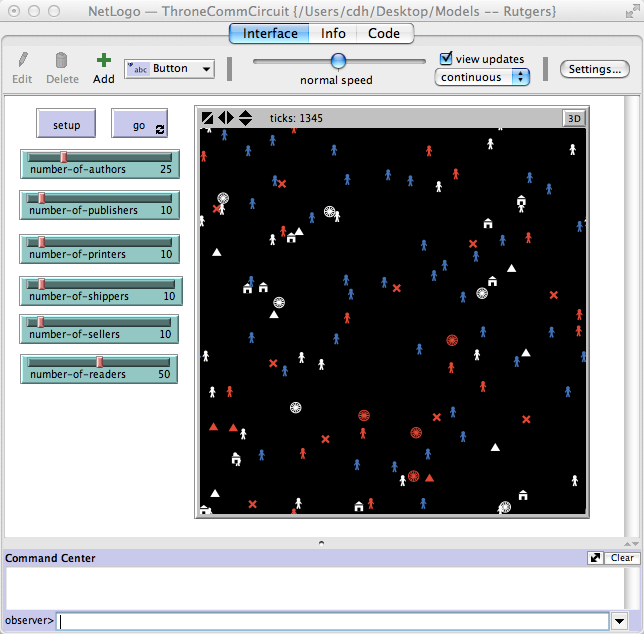

The task of converting Darnton’s model into a functioning

agent-based simulation has already been started by literary

scholar Jeremy Throne, who breaks the model down to six

turtle-types (called “breeds” in NetLogo parlance):

authors, publishers, printers, shippers, sellers, and

readers. Throne created variables to control how many of

each breed are included, and at initialization these turtles

are distributed randomly across a standard-sized field of

patches. At each tick, the turtles move about randomly, and

whenever they bump into each other they perform

transactions: authors present publishers with manuscripts,

who give them to printers as “jobs,” from whom they’re

picked up by shippers and deposited to sellers. Readers

purchase the books and complete the circuit by giving

authors encouragement to create more manuscripts. Like

clockwork automata, the now-moving parts of Darnton’s model

create a uniformly bustling field of exchange. (See

Figure 7.)

Throne concedes that “the homogeneous

nature of these businesses is admittedly

unrealistic,” and indeed the immediate reaction

that historians often have when first exposed to agent-based

models is to be taken aback by their obvious, even comical

artificiality [

Throne 2011]. In part this is

simply because of the crudity of NetLogo’s animations: the

figures bounce around the field like chunks of exploded

asteroids in

Asteroids. More

deeply, though, the artificiality of the simulation can be

traced in the code itself; the procedures that dictate

turtle behaviors are radically simplified. For example, here

is Throne’s code that simulates the activity of book-buying:

to buy-book

if any? sellers-here with [ inventory > 0 ] ;; if the reader meets a seller with books to sell

[ ask one-of sellers-here with [ inventory > 0 ]

[ set inventory inventory - 1 ;; take a book from the seller

if inventory <= 0 ;; if the seller is out of books

[ set color white ] ;; show that the inventory is gone

]

ask one-of readers-here

[ set books books + 1 ;; increase the books a reader has read by one

set color blue ]

]

end

These twelve lines of code reduce an enormously complicated

cluster of practices surrounding reading to a single

procedure. As “readers” move around the patches

randomly, they’re constantly called upon to

“buy-books.” Book buying in this context means

checking to see if a “seller” turtle happens to sit on

the same patch, verifying that the seller has inventory, and

then taking that inventory.

[22] Already my prose description is more

complicated than the procedure itself. In fact, books as

such don’t exist in the simulation at all. Rather, they

appear only as numerical variables owned by turtles. In the

above code books exist as “inventory” (for sellers) and

“books” (for readers).

[23]

When a reader bumps into a seller, a seller’s

“inventory” score is reduced by one and a reader’s

“books” score is increased by one. That’s all that

happens.

Given its level of abstraction, what relation does this

procedure have to actual book buying or real reading? Throne

describes the procedure as an activity “that may be thought of as reading, purchasing, or

borrowing” — a telling phrase that captures well

his model’s breadth of imaginative application [

Throne 2011]. I say “imaginative” because

there isn’t anything that existed in history that his

readers do, really. They don’t read or purchase or borrow:

their activities

may be thought of as those

things because they are analogues for those things. Throne’s

model is less interested in accounting for the

particularities of the behaviors imagined here than in

demonstrating their function within a larger system of

exchange and circulation. That function can be summed up

like this: Purchasing, sharing, and reading happen when

people encounter media providers, and those activities move

content from providers to the reading populace, and these

transfers, broadly and abstractly construed, bind consumers

to producers in a chain of cultural production.

[24] Such

abstract ideas require a comparably abstract form of

representation. While Darnton’s diagram simplifies this

process down to an arrow, the NetLogo model represents it as

an algorithmic procedure. So, while both models seem

unrealistic and over-simplified when compared to actual

behavior, if we take the idea to be the models’ real

subject, rather than the past

per se, then

neither is reductive at all. The code and the diagram are

neither less realistic nor less valid than generalizations

in prose. Agent-based models don’t reduce life to

abstractions; they bring abstractions to life.

The most salient difference between agent-based simulations

and more traditional forms of historical modeling is not,

then, that simulations are peculiarly abstract, artificial,

or otherwise disconnected from the past. Rather,

conventional forms of historical explanation depend on the

spatialized logic of print, whether in the form of diagrams,

graphs, charts, or simply sequential prose. In such models,

sequence and spatial juxtaposition carry much of the

explanatory load. Agent-based models use algorithmic

processes instead. Alexander Galloway’s comment about video

games applies to ABM as well: like games, simulations are an

“action-based medium”

[

Galloway 2006]. This means that simulations

like Throne’s are able to point in both directions of what

I’ve called the hermeneutic figure-8. They retain the

capacity to facilitate historical research and explanation,

like any static model, but they also can be activated to

generate behaviors in simulated worlds — behaviors that may

or may not replicate patterns observed in the historical

record or predicted by the underlying assumptions. As

Willard McCarty has argued, models “comprise a practical means of playing out the

consequences of an idea”

[

McCarty 2008]. In his initial experiments

Throne found, for example, that printers and shippers were

the surprising bottleneck and that the function of his world

is more sensitive to disruptions in production and shipment

than he’d anticipated.

[25]The challenge then becomes one of reconciling these

disruptions with observed patterns in the historical record,

and it is at this point in the explanatory process that

statistics begin to play a valuable role. Statistics do not

“validate” the model, if by validate one means

“prove,” but they facilitate interpretation by identifying

where the model does and does not replicate observed

macroscopic patterns [

Dixon 2012].

[26] To return to Throne’s

original, what’s striking is the lack of change over time:

once the turtles are apportioned at initialization, the

system runs without constraint or growth. Without feedback

loops that alter agent behavior, the communications circuit

operates here like a complex system, but not like a complex

adaptive system, and thus its production,

once set, operates at an unrealistically consistent

equilibrium.

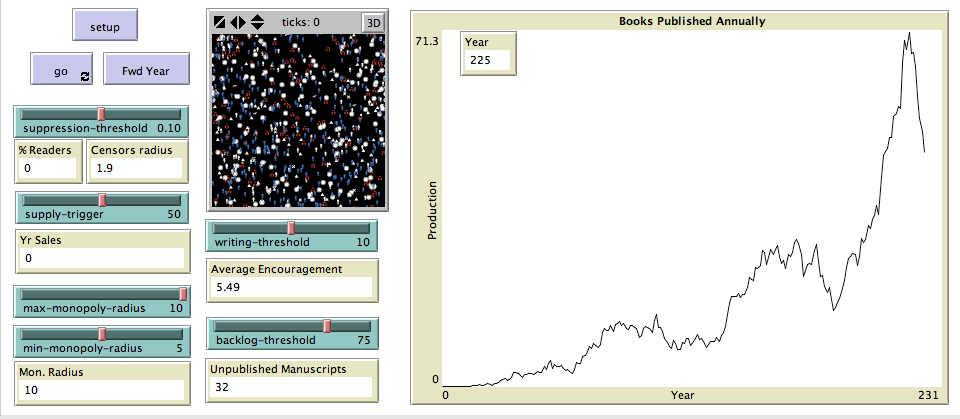

Happily, agent-based models are easy to modify and extend

(surprisingly easy, in fact, to any digital humanist

comfortable with basic coding), and gaps in a model can be

addressed to facilitate new experiments that ask new

questions. In my revision of Throne’s original, I wondered

how economic growth, state suppression, and the exertion of

commercial monopolies might impact book production. My

version argues that growth in demand instigates pressure

from the state and from commercial interests to restrict

production, but that if such restrictions result in too much

pent-up demand, constraints break down in moments of crises.

The result is a pattern of book production that largely

follows economic growth but much more closely matches the

punctuated equilibrium observed across the hand-press era.

Compare, for example, the pattern of book production

observed over a 225-year period in my version (See

Figure 8.) with annual book

production in England from 1475 to 1700. (See

Figure 6.) “Playing with the

model” is another term for “sensitivity analysis,” and it

means adjusting the settings to find out which replicate

observed cultural patterns, which cause the system

effectively to crash, and to try to figure out why. However,

the output of a model never will match the historical data

exactly, nor should it. The purpose of historical simulation

is not to recreate the past but to subject our general ideas

about historical causation to scrutiny and

experimentation.

[27] Readers curious about the model itself are encouraged to

download the file and play with its variables to see how its

constraints and feedback loops modify agent behavior (

http://modelingcommons.org/browse/one_model/4004).

As with any new genre of writing, the best way to learn

about it is to compare and contrast texts that tackle

similar questions and problems. Readers are also encouraged

to examine two other models of the historical book trade

that I’ve created. “Bookshops” focuses closely on the

finance of seventeenth- and eighteenth-century book-selling

businesses to explore how changes in demand and costs might

have affected publishers’ decisions about price,

republication, and edition size (

http://modelingcommons.org/browse/one_model/4002).

“The Paranoid Imaginarium of Roger

L’Estrange” is more subjective and speculative:

it attempts to create a working model of how

seventeenth-century state censors imagined a print

marketplace of scurrilous and seditious publication, where

voraciously scandal-mongering readers threaten to disrupt

the populace and therefore require strict policing to

prevent social breakdown (

http://modelingcommons.org/browse/one_model/4003).

NetLogo models can also be used to address questions of

literary form. For example, Graham Sack has created two

models of interest: one that simulates the fictional social

networks of nineteenth-century novels and another that

adapts models of biological evolution to simulate the

evolution of literary genres [

Sack 2013]; [

Sack 2013]. Surveying these examples, as well

as models publicly available at

http://modelingcommons.org or the sample library

included with NetLogo, may provide scholars a glimpse of

this nascent genre’s potential as a tool of historical

explanation.

Humanistic problems that could be tackled with agent-based

modeling include (but are not limited to):

History of commerce. As the examples of book

history above suggest, agent-based computation is

well-suited to model production and distribution networks.

Indeed, the most important commercial applications of ABM

look at systems dynamics and logistics, and there’s no

reason why ABM couldn’t be used to study historical systems.

How did communication and transportation networks evolve?

How did new technologies (telegraph, railroad) affect

commerce, and at what points were those networks most

vulnerable? What factors were most important to their

development?

Political and military history. In the social

sciences, ABM is often used to examine phenomena like voter

affiliation. Applied to historical cases, it could be used

to answer a wide range of questions. What caused the

emergence of partisan politics in the eighteenth century?

How did the consolidation of nation-states in the nineteenth

century lay the conditions for the global wars of the

twentieth? How did social movements form and deform? What

conditions were needed for twentieth-century political

advocacy to manifest as social change?

History of literature and philosophy. The

material concerns of politics and commerce matter for

literature as well. How did competition between publishers,

theaters, film companies, authors, editors, unions,

typesetters, and other stakeholders affect the production of

books, plays, and movies? More abstractly, scholars might

use ABM to model interpretive difficulties. Through what

process do genres devolve into parody? What is

“originality,” when is it recognized,

and under what conditions is it valued? What factors are the

most important drivers of “paradigm shift”? How do ideas

change over time?

In all of these cases, agent-based models will never be able

to establish definitively what happened in the past.

However, they could be used in each case to specify

scholars’ ideas about historical processes while subjecting

those ideas to a challenging form of scrutiny.

In conclusion, three points are worth emphasizing. First,

simulation does not and will never replace document-based

research as the historian’s primary activity. As hermeneutic

tools, agent-based models work much like traditional

diagrams: they articulate a cluster of general assumptions

and make those assumptions available as a guide to

interpretation.

[28] However, second,

simulation is an action-based medium that makes those

assumptions more explicit and enables experiments to test

their internal consistency. When agents don’t behave how the

designer expects them to, debugging, expanding, or otherwise

modifying the model becomes a process of intellectual

inquiry that subjects the designer’s ideas to a frustrating

but invigorating process of reformulation. Third,

statistical comparison is an important tool for testing that

consistency, but such comparisons don’t suggest (pace

complex-system theory) that simple processes are “all that is really happening.”

Instead, statistical confirmation and its breakdown identify

moments of analogy between the model and the past, as well

as (just as usefully) moments of dissimilarity between

them.

This last point suggests that any historical simulation’s

success will not be determined by its verisimilitude. Any

model that was sophisticated and complicated enough to

represent faithfully the multitudinous totality of the past

would be every bit as inscrutable as that past. Rather,

models should be judged by their capacity to facilitate

interpretation and explanation. In practical terms, this

means that ABMs targeted toward an audience of historians

will need to be thesis-driven and richly documented with

primary and secondary sources, demonstrating both the

model’s macrolevel similarity with historical patterns and

its value as a heuristic device for explaining particular

events or interpreting historical texts. Ultimately,

agent-based models don’t need to tell us something new, but

they should help us say something new.

Works Cited

Adams and Barker 2006 Adams,

Thomas R. and Nicholar Barker. “A New

Model for the Study of the Book,” in The Book History Reader, 2nd ed.,

ed. David Finkelstein and Alistair McCleery (New York:

Routledge, 2006).

Barnard and McKenzie 2002 Barnard, John and D. F. McKenzie, eds. with the assistance

of Maureen Bell. The Cambridge History

of the Book in Britain: vol. 4. (Cambridge:

Cambridge University Press, 2002).

Bogost 2007 Bogost, Ian.

Persuasive Games: The Expressive

Power of Videogames (MIT Press, 2007),

vii.

Bonnett 2007 Bonnett, John.

“Charting a New Aesthetics for

History: 3D, Scenarios, and the Future of the

Historian’s Craft,”

L’histoire Sociale / Social

History 40, 79 (May 2007): 169–208.

Bourdieu 1993 Bourdieu,

Pierre. The Field of Cultural

Production: Essays on Art and Literature (New

York: Columbia University Press, 1993).

Brewer 2010 Brewer, John.

“Microhistory and the Histories of

Everyday Life,”CAS

eSeries 5 (2010).

Bunzl 1997 Bunzl, Martin.

Real History: Reflections on

Historical Practice (London and New York:

Routledge, 1997).

Carmichael 2013 Carmichael, Ted and Mirsad Hadzikadic. “Emergent Features in a General Food Web Simulation:

Lotka-Volterra, Gause’s Law, and the Paradox of

Enrichment,”

Advances in Complex Systems

(April 2013).

Colander 2011 Colander,

David. “The MONIAC, Modeling, and

Macroeconomics,”Economia

Politica 28, 1 (2011): 63–82.

Creager 2011 Creager, Angela

N. H., Elizabeth Lunbeck, and M. Norton Wise, eds. Science Without Laws: Model Systems,

Cases, Exemplary Narratives, Science and Cultural

Theory (Durham: Duke University Press,

2007).

Darnton 1984 . The Great Cat Massacre and Other Episodes

in French Cultural History (New York: Vintage

Books, 1984).

Darnton 2006 Darnton, Robert.

“What is the History of

Books?” in The Book History

Reader, 2nd ed., ed. David Finkelstein and

Alistair McCleery (New York: Routledge, 2006).

Dean 2000 Dean, Jeffrey S.,

George J. Gumerman, Joshua M. Epstein, Robert L. Axtell,

Alan C. Swedlund, Miles T. Parker, and Stephen McCarroll,

“Understanding Anasazi Culture

Through Agent-based Modeling.” In Dynamics in Human and Primate Societies :

Agent-Based Modeling of Social and Spatial Processes:

Agent-Based Modeling of Social and Spatial

Processes, edited by Timothy A. Kohler and

George J. Gumerman (Oxford: Oxford University Press, 2000),

179–206.

Dixon 2012 Dixon, Dan. “Analysis Tool or Research Methodology? Is

There an Epistemology for Patterns?” in Understanding Digital Humanities,

ed. David Berry (Basingstoke; New York: Palgrave

Macmillan, 2012), 191-209.

Düring 2011 Düring, Marten.

“The Potential of Agent-Based

Modeling for Historical Research,” forthcoming in

Complex Adaptive Systems: Energy,

Information and Intelligence: Papers from the 2011 AAAI

Fall Symposium.

Epstein 1996 Epstein, Joshua

and Robert Axtell, Growing Artificial

Societies: Social Science from the Bottom Up

(Cambridge: MIT Press, 1996).

Gallagher 2007 Gallagher,

Catherine. “The Rise of

Fictionality,” in The Novel, ed. Franco Moretti,

vol. 1, 2 vols. (Princeton: Princeton University Press,

2007).

Galloway 2006 Galloway,

Alexander. Gaming: Essays on

Algorithmic Culture (Minneapolis: University of

Minnesota Press, 2006)

Griere 1999 Griere, Ronald N.

Science Without Laws

(Chicago: University of Chicago Press, 1999).

Griesemer 2004 Griesemer,

James. “3-D Models in Philosophical

Perspective,” in Models: The

Third Dimension of Science, ed. Soraya de

Chadarevian and Nick Hopwood (Stanford: Stanford University

Press, 2004).

Harvey 1997 Harvey, Brian.

Computer Science Logo Style:

Symbolic Computing, 2nd ed. (MIT Press,

1997).

Hubbard 2007 Hubbard, E. Jane

Albert. “Model Organisms as Powerful

Tools for Biomedical Research,” in Science Without Laws, ed. Angela

N. H. Creager, Elizabeth Lunbeck, and M. Norton Wise

(Durham: Duke University Press, 2007).

Korb 2013 Korb, Kevin, Nicholas

Geard, and Alan Dorin. “A Bayesian

Approach to the Validation of Agent-Based

Models,” in Ontology,

Epistemology, and Teleology for Modeling and

Simulation, ed. Andreas Tolk,(Berlin: Springer,

2013).

Liu 2013 Liu, Alan. “The Meaning of the Digital

Humanities,”

PMLA 128, 2 (2013).

Lytinen 2012 Lytinen, Steven

L. and Steven F. Railsback. “The

Evolution of Agent-based Simulation Platforms: A Review

of NetLogo 5.0 and ReLogo,”

European Meetings on Cybernetics and

Systems Research (2012).

Manovich 2001 Manovich, Lev.

The Language of New Media

(Cambridge: MIT Press, 2001).

McCarty 2005 McCarty,

Willard, Humanities Computing

(Basingstoke; New York: Palgrave Macmillan, 2005).

Miller 2007 Miller, John H.

and Scott E. Page, Complex Adaptive

Systems: An Introduction to Computational Models of

Social Life (Princeton: Princeton University

Press, 2007).

Morgan 1999a Morgan, Mary S.

“Learning from Models,” in

Models as Mediators: Perspectives

on Natural and Social Sciences, ed. Mary S.

Morgan and Margaret Morrison (Cambridge: Cambridge

University Press, 1999), 347–88.

Morgan 1999b Morgan, Mary S.

and Margaret Morrison, eds., Models as

Mediators: Perspectives on Natural and Social Sciences,

Ideas in Context (Cambridge; New York: Cambridge

University Press, 1999).

Morgan 2004 Morgan, Mary S.

and Marcel Boumans, “Secrets Hidden by

Two-Dimensionality: The Economy as a Hydraulic

Machine,” in Models: The

Third Dimension of Science, ed. Soraya de

Chadarevian and Nick Hopwood (Stanford: Stanford University

Press, 2004)

Morrison 1999 Morrison,

Margaret. “Models as Autonomous

Agents,” in Models as

Mediators: Perspectives on Natural and Social Sciences,

Ideas in Context, ed. Mary S. Morgan and

Margaret Morrison (Cambridge; New York: Cambridge University

Press, 1999).

Nikolai 2009 Nikolai, Cynthia

and Madey, Gregory, “Tools of the Trade:

A Survey of Various Agent Based Modeling

Platforms,”

Journal of Artificial Societies and

Social Simulation 12, 2 (2009).

http://jasss.soc.surrey.ac.uk/12/2/2.html Poovey 2013 Poovey, Mary and

Kevin R. Brine, “From Measuring Desire

to Quantifying Expectations: A Late Nineteenth-Century

Effort to Marry Economic Theory and Data,” in

“Raw Data” is an

Oxymoron, ed. Lisa Gitelman (MIT Press, 2013),

61–75.

Railsback 2012 Railsback,

Steven F. and Volker Grimm, Agent-based

and Individual-based Modeling a Practical

Introduction (Princeton: Princeton University

Press, 2012).

Ramsay 2003 Ramsay, Stephen.

“Toward an Algorithmic

Criticism,”

Literary and Linguistic Computing

18, 2 (2003).

Robertson 2005 Robertson,

Duncan, “Agent-Based Modeling

Toolkits,”

Academy of Management Learning and

Education, 4, 4 (2005): 525-27

Sack 2011 Sack, Graham. “Simulating Plot: Towards a Generative

Model of Narrative Structure,” in Complex Adaptive Systems: Energy,

Information and Intelligence: Papers from the 2011 AAAI

Fall Symposium.

Sack 2013 Sack, Graham. “Character Networks for Narrative

Generation: Structural Balance Theory and the Emergence

of Proto-Narratives.”

Proceedings of 2013 Workshop on

Computational Models of Narrative (CMN 2013),

ed. Mark A. Finlayson and Bernhard Fisseni and Benedikt Lowe

and Jan Christoph Meister. August 4-6, 2013.

Schell 2008 Schell, Jesse.

The Art of Game Design: A Book of

Lenses (Taylor & Francis US, 2008).

Smith 1994 Smith, Nigel. Literature and Revolution in England,

1640-1660 (New Haven: Yale University Press,

1994).

Suárez and Sancho 2011 Juan-Luis

Suárez and Fernando Sancho, “A Virtual

Laboratory for the Study of History and Cultural

Dynamics,”

Journal of Artificial Societies and

Social Simulation 14, 4 (2011).

http://jasss.soc.surrey.ac.uk/14/4/19.html Throne 2011 Throne, Jeremy.

“Modeling the Communications

Circuit: An Agent-based Approach to Reading in

‘N-Dimensions’,” forthcoming in Complex Adaptive Systems: Energy,

Information and Intelligence: Papers from the 2011 AAAI

Fall Symposium.

Waldrop 1993 Waldrop,

Mitchell M. Complexity: The Emerging

Science at the Edge of Order and Chaos (New

York: Simon & Schuster, 1993)

Wardrip-Fruin 2009 Wardrip-Fruin,

Noah. Expressive Processing: Digital

Fictions, Computer Games, and Software Studies

(MIT Press, 2009).

Weingart Weingart, Scott. “Abduction, Falsifiability, and Parsimony:

When is ‘good enough’ good enough for simulating

history?”” (unpublished draft)

Winsberg 2010 Winsberg, Eric. Science in the Age of Computer

Simulation Chicago: University of Chicago Press,

2010

de Chadarevian 2004 de

Chadarevian, Soraya and Nick Hopwood, eds., Models: The Third Dimension of

Science (Stanford: Stanford University Press,

2004).