Abstract

Computer-aided research in the humanities has been inhibited by the prevailing

paradigm of software design in humanities computing, namely, the document

paradigm. This article discusses the limitations of the document paradigm and

contrasts it with the database paradigm. It describes a database-oriented

approach that provides a better way to create digital representations of

scholarly knowledge, allowing individual observations and interpretations to be

shared more widely, analyzed more effectively, and preserved indefinitely.

Introduction

Computer-aided research in the humanities has been inhibited by the prevailing

paradigm of software design in humanities computing (or, as it is now called,

digital humanities). The prevailing paradigm is so pervasive and has been

entrenched for so long that many people have difficulty imagining an

alternative. We are referring to the document paradigm of software design, in

which the structure of pre-digital documents is the basis for the data

structures in which information is represented digitally. In the document

paradigm, the digital representation of information depends on the relative

position of units of information in one or two dimensions. Information is

represented by linear character strings or by tables consisting of rows and

columns, as on a flat printed page.

These position-dependent data structures are familiar to people who have been

trained to read books, which accounts for their popularity. But they fail to

meet the needs of scholars if they are used, not simply as intuitive display

formats, but as the basic organizing structures for the digital representation

of scholarly information. We pay a steep price for clinging to convenient

analogies like “line,”

“ledger,”

“page,”

“book,” and “library” insofar as we treat them as basic structures in

our software rather than merely convenient ways to present information to

end-users. By organizing information within position-dependent data structures

limited to one or two dimensions, such as strings and tables, we fail to

represent the full range of scholarly observations and interpretations in a

predictable and semantically rich digital form that permits powerful automated

comparisons and analyses.

[1]For example, in the document paradigm a text will be represented as a long

sequence of numeric codes in which each code represents a character in a writing

system (e.g., the standard ASCII numbers used for characters in the Latin

alphabet). Some of the characters may constitute annotations or “markup

tags” interspersed among characters that represent the text itself, but

both the text and its markup will be represented by a single string of character

codes. A character string with embedded markup tags can be interpreted as a

hierarchical “tree” of textual components, yielding a more complex data

structure. However, the semantic potential of the tree structure will be limited

by the fact that the markup tags occur at particular positions within what is,

ultimately, just a one-dimensional sequence of character codes.

This widely used method of text encoding is sufficient for many purposes in daily

life and in research outside the humanities. But it has been borrowed

inappropriately from non-scholarly domains to be used for literary and

historical texts that are objects of study in their own right. Some kinds of

scholarly work can be done using this method, but it imposes unnecessary limits

if it is used as the primary means of representing a text. It deprives scholars

of the power to express in digital form many of the conceptual distinctions they

employ routinely in the course of their work. They end up imitating the

position-dependent structure of a pre-digital medium rather than exploiting the

potential of the digital medium to represent their information in a more

effective way. As a result, they fail to capture in an explicit, searchable form

the different ways a given text has been read and annotated, making it difficult

to use computational methods to compare and analyze the various interpretations.

And it is precisely those texts which are open to many different readings that

are of greatest interest to scholars in the humanities, who spend considerable

time tracing the history of textual interpretation and the interconnections

within and among texts — tasks that could be greatly facilitated by the

appropriate digital tools.

The alternative to the document paradigm is the database paradigm, which is

characterized by data structures that transcend the position-dependent structure

of pre-digital documents. Database systems make use of unique

“keys” and internal indexes to retrieve and recombine

atomized units of information in a flexible manner. The database paradigm has

been wrongly neglected in the humanities because it is thought to be unsuitable

for free-form texts. Databases are thought to be suitable only for highly

structured tables of data, which is what many people think of when they hear the

word “database.” But there is a great deal of predictable structure both

within texts themselves and within scholarly analyses of them — structure of a

kind that is best represented digitally by means of a properly atomized and

keyed database.

Moreover, a data table is itself a document and not a database. Even if we were

to aggregate separate tables into a collection of tables, we would not thereby

create a database, properly speaking, regardless of whether we use database

software to manage the various tables. According to the document-versus-database

distinction employed in this article, a collection of tables would constitute a

database only if the tables were linked via key fields and were

“normalized” to minimize the duplication of information,

necessitating “joins” among two or more tables to produce a

dynamic view of the atomized information in response to a particular

query.

[2] Thus, the

distinction between documents and databases is not a distinction between

unstructured textual information and structured tables. Rather, it is a

distinction between position-dependent data structures that mimic pre-digital

documents — be they one-dimensional character strings or two-dimensional tables

— and the atomized, flexible, and hence multi-dimensional structure of a true

database.

Unfortunately, because of the pervasiveness of the document paradigm, which has

become a deeply engrained tradition in humanities computing, scholarly software

development has focused on digital documents. There are a few exceptions but on

the whole there has been very little effort expended to develop database systems

for research in the humanities, even though there has been no insuperable

technical barrier to doing so in the past forty years, since the advent of the

relational data model and general-purpose database querying languages. Moreover,

in the last ten years, non-relational data models and querying languages have

emerged that make possible the creation of powerful database systems that

preserve the best features of relational systems but can more easily accommodate

texts.

[3] It is

time to embrace the database paradigm in digital humanities and invest more

effort in developing software within that paradigm instead of within the

document paradigm. This is the best way to create digital representations of

scholarly knowledge that can store individual observations and interpretations

in a manner that allows them to be shared more widely, analyzed more

effectively, and preserved indefinitely.

In the remainder of this six-part article we will explain in more detail why the

database paradigm is better for digital research in the humanities than the

document paradigm. We will start in Part One by discussing the nature of digital

texts and the “problem of overlapping hierarchies” that

plagues the widely used linear-character-sequence method of digitizing texts.

This text-encoding method conforms to the document paradigm but it cannot cope

with multiple readings of the same text, which are best represented digitally as

overlapping hierarchies consisting of the same textual components. The inability

of this method to represent overlapping hierarchies limits its usefulness for

scholarly research.

In Part Two, we examine how it was that the document paradigm came to dominate

software design in the humanities, despite its limitations. We will summarize

the parallel histories of the document paradigm and the database paradigm since

they emerged in the 1960s in order to understand the difference between them.

And we will see how, in recent years, the document paradigm has enriched the

database paradigm and made it even more suitable for computational work in the

humanities.

In Part Three, looking forward, we discuss in general terms how to transcend the

document paradigm and work within the database paradigm in a way that enables

scholars to represent all the entities, properties, and relationships of

interest to them, including multiple readings of the same text that are

represented by means of overlapping hierarchies. This requires an

“item-based” atomization of information. A suitably

designed database system that implements an item-based ontology

[4] can

allow us to represent scholarly knowledge in a highly flexible but still

predictable form — a digital form of knowledge whose schema is sufficiently

rich, semantically, to permit efficient automated searches, while still making

it easy for researchers to reconfigure their data and to integrate it tightly

both within and across individual research projects.

In Part Four, we describe a specific example of how this atomized item-based

ontology has been implemented in a working database system in use at the

University of Chicago. This is a multi-user, multi-project, and highly scalable

non-relational database system that takes advantage of recent innovations in

database software. It relies on a standardized data format (XML) and

corresponding querying language (XQuery) that have been incorporated into

high-performance database software over the past decade as an alternative to

relational tables and the SQL querying language. We have therefore been able to

borrow many of the best features of older relational database systems while

working more easily with the complex overlapping hierarchies that characterize

data in the humanities.

In Part Five, we explain how a hierarchical, item-based ontology implemented in a

database system allows us to go beyond isolated data silos to a sustainable

online research environment that encompasses and integrates a vast array of

information of interest to scholars — information that can be rigorously

searched and analyzed, in the spirit of the Semantic Web, overcoming the

limitations of the traditional document-oriented (and semantically impoverished)

World Wide Web. Texts and the many ways of reading them are only one example of

the information we need to search and analyze. The same generic ontology can be

used for other kinds of information pertaining to persons, places, artifacts,

and events. This enables interoperability and economies of scale among a wide

range of users. To realize the full benefits of digitization in the humanities,

our software tools should allow us to capture explicitly, in a reproducible

digital form, all the distinctions and relationships we wish to make, not just

in textual studies but also in archaeology, history, and many other cultural and

social disciplines. This raises the important issue of “data

integration” and how it can best be achieved, which is dealt with

in Part Six.

In Part Six of this article, we conclude by exploring the implications of the

digital ontology we are advocating with respect to the question of whether

digitization necessarily forces us to standardize our modes of description or

whether we can obtain the benefits of structured data and powerful automated

queries without conceding semantic authority to the designers and sponsors of

the software we use. We argue that standardization of terminologies and

classifications is not necessary for data integration and large-scale querying.

A suitably designed item-based system allows us to safeguard the ontological

heterogeneity that is the hallmark of critical scholarship in the humanities.

Overlapping textual hierarchies are just one example of this heterogeneity,

which permeates scholarly work and should not be suppressed by standardized

document-tagging schemes or rigid database table formats.

1. The problem of overlapping hierarchies

In order to understand why the document paradigm leads to inadequate digital

representations of scholarly texts, we must first define what we mean by a

“digital text.” One way of digitizing a text is to make a

facsimile image of it — for example, scanning it to produce a bitmapped

photograph — for the purpose of displaying it to human readers. However, a great

deal of work in digital humanities is based, not on facsimile images, but on the

digitizing of texts in a form that allows automated searching and analysis of

their contents. Yet, when we go beyond making a visual facsimile of a text and

try to represent its meaningful content, then what we are actually digitizing is

not the text per se but a particular reading of it.

The standard method of creating a digital text from a non-digital original

involves the character-by-character encoding of the text as a sequence of

numbers using one number per character. But this kind of encoding represents

just one potentially debatable interpretation of the physical marks on an

inscribed medium. Even in the simplest character-by-character encoding of a

text, without any editorial annotations in the form of embedded markup, choices

are being made about how to map the marks of inscription onto standard numeric

codes that represent characters in a particular writing system. Moreover, all

character-encoding schemes, such as ASCII and Unicode, themselves embody prior

interpretive choices about how to represent a given writing system and,

crucially, what to leave out of the representation. These choices were not

inevitable but must be understood historically with respect to particular

individuals and institutional settings. There is a historically contingent

tradition of encoding characters electronically that began long ago with Samuel

Morse and the telegraph, with the long and short signals of the Morse

code.

[5] When

we speak of digital texts we should remember that every encoding of a

non-digital work is a reductive sampling of a more complex phenomenon. The

original work is represented by sequences of binary digits according to some

humanly produced and potentially debatable encoding scheme.

[6]For example, the Unicode Consortium makes debatable choices about what to include

in its now ubiquitous character-encoding standard [

http://www.unicode.org]. Even though

it gives codes for many thousands of characters, it does not attempt to capture

every graphic variant, or allograph, of every character in every human writing

system. This is understandable as a practical matter but it creates problems for

researchers for whom such variations are themselves an important object of study

because they indicate scribal style, networks of education and cultural

influence, diachronic trends, and so on. So we should start by acknowledging

that digitally representing a text as a sequence of standard character codes

really represents just one possible reading of the text; and, furthermore, this

representation is encoded in a way that reflects a particular interpretation of

the writing system used to create the text in the first place.

The problem is that many texts are open to multiple readings, both on the

epigraphic level and on the grammatical or discourse level, and yet the dominant

text-encoding method in use today, which is characterized by linear

character-code sequences with embedded markup, is not capable of representing

multiple readings of the same text in a workable manner. This is a serious

problem for scholars in the humanities, in particular, for whom the whole point

of studying a text is to come up with a new or improved interpretation of it. It

is an especially pressing concern in fields of research in which divergent

readings occur at quite low levels of grammatical interpretation or epigraphic

decipherment. For example, when working with writing systems that do not have

word dividers, as is common in ancient texts, we often face ambiguous word

segmentations. And many texts have suffered physical damage or are inscribed in

an ambiguous way, giving rise not only to debatable word segmentations on the

grammatical level but also to debatable epigraphic readings.

The problem is perhaps obvious in the case of philological work on manuscripts,

in which we need to record the decisions made by editors and commentators in

such a way that we can efficiently display and compare different readings of a

text whose physical condition, language, or writing system make it difficult to

understand. However, the problem arises also with modern printed texts and even

with “born digital” texts. Useful work on such texts can of

course be done with simple linear character encodings (with or without markup

tags) by means of string-matching searches and other software manipulations that

conform to the document paradigm. But a one-dimensional encoding cannot capture,

in a way that is amenable to automated querying, the decisions made by different

scholars as they come up with different ways of analyzing a text and of relating

its components to one another or to another text entirely.

With the wealth of software tools at our disposal today, scholars should not have

to settle for a method of digitizing texts that cannot easily accommodate

multiple readings of the same text. They ought to be able to represent in

digital form various ways of reading a text while capturing the fact that these

are interpretations of the same text. Computers should help scholars debate

their different readings by making it easy to compare variations without

suppressing the very diversity of readings that motivates their scholarly work.

But the only way to do this is by abandoning the deeply engrained tradition of

treating digital texts as documents, that is, as data objects which are

configured in one or two dimensions. Scholarly texts should instead be

represented in a multi-dimensional fashion by means of a suitably designed

database system.

To understand the limitations of the document-oriented approach, it is worth

examining the scholarly markup standard promulgated by the Text Encoding

Initiative (TEI), an international consortium founded in 1987 (see

http://www.tei-c.org/index.xml; [

Cummings 2007]). As we

have said, the dominant method of text-encoding for scholarly purposes is not

capable of representing multiple readings of the same text in a workable manner.

The TEI markup scheme is the best-known example of this method. We cite it here

simply as one example among others, noting that most other encoding schemes for

textual corpora work in a similar way and have the same limitations. We note

also that a number of scholars involved in the TEI consortium are well aware of

these limitations and do not claim to have overcome them.

A digital text representation that follows the TEI standard contains not just

character codes that comprise the text itself but also codes that represent

markup tags interspersed within the text. The creators of the TEI scheme and

similar text-encoding schemes have assumed that embedding annotations within

character sequences in the form of markup tags yields a text-representation that

is rich enough for scholarly work. However, as Dino Buzzetti has emphasized, the

markup method has significant disadvantages [

Buzzetti 2002]

[

Buzzetti 2009]

[

Buzzetti and McGann 2006]. As Buzzetti says, drawing on the Danish linguist

Louis Hjelmslev’s distinction between “expression” and “content,” and

citing Jerome McGann’s book

The Textual Condition

[

McGann 1991]:

From a semiotic point of view the

text is intrinsically and primarily an indeterminate system. To put it

briefly, there are many ways of expressing the same content just as

there are many ways of assigning content to the same expression.

Synonymy and polysemy are two well-known and mutually related linguistic

phenomena.

[Buzzetti 2009, 50]

Thus, in an essay that Buzzetti co-authored with McGann, the following

question is raised:

Since text is dynamic and mobile

and textual structures are essentially indeterminate, how can markup

properly deal with the phenomena of structural instability? Neither the

expression nor the content of a text are given once and for all. Text is

not self-identical. The structure of its content very much depends on

some act of interpretation by an interpreter, nor is its expression

absolutely stable. Textual variants are not simply the result of faulty

textual transmission. Text is unsteady, and both its content and

expression keep constantly quivering.

[Buzzetti and McGann 2006, 64]

The same point could no doubt be expressed in a less structuralist way,

but we are not concerned here with the particular literary theory that underlies

this way of stating the problem. Regardless of how they express it, Buzzetti and

McGann are surely right to say that the difficulties encountered in representing

multiple readings of the same text constitute a major deficiency in the

markup-based technique commonly used to digitize scholarly texts (see also [

McGann 2004]).

Moreover, as they acknowledge, this deficiency was recognized very early in the

history of the Text Encoding Initiative and has been discussed repeatedly over

the years (see, e.g., [

Barnard et al. 1988]; [

Renear et al. 1993]). Almost twenty years ago, Claus Huitfeldt published a perceptive and

philosophically well-informed critique of the TEI encoding method, entitled

“Multi-Dimensional Texts in a One-Dimensional

Medium”

[

Huitfeldt 1995]. He described the conventional method of

text-encoding in which “a computer represents a text as

a long string of characters, which in turn will be represented by a

series of numbers, which in turn will be represented by a series of

binary digits, which in turn will be represented by variations in the

physical properties of the data carrier”

[

Huitfeldt 1995, 236] as an attempt to represent multi-dimensional texts in a one-dimensional

medium.

However, despite his accurate diagnosis of the problems inherent in reducing many

dimensions to just one, for some reason Huitfeldt did not challenge the basic

assumption that “a long string of

characters” is the only data structure available for the digital

representation of texts. As he no doubt knew, computer programmers are not

limited to using one-dimensional structures. The fact that all digital data is

ultimately represented by one-dimensional sequences of binary digits is

irrelevant in this context. We are dealing here with the logical data structures

used by application programmers and by high-level programming languages, not the

underlying structures dealt with by operating systems and compilers (a compiler

is a computer program that translates source code written in a high-level

programming language into lower level “machine code”). As a

computer scientist would put it, the power of digital computers stems from the

fact that a “Turing machine,” defined mathematically in terms of primitive

computations on a one-dimensional sequence of binary digits, is capable of

emulating more complex data structures and algorithms that can represent and

manipulate information in many different dimensions.

Thus, in spite of the deeply entrenched habit of encoding texts as long character

strings, scholars should pay more attention to the fact that a digital computer

is not necessarily a one-dimensional medium when it comes to the representation

of texts — indeed, they will not obtain the full benefits of digitization until

they take advantage of this fact. The failure to adopt a multi-dimensional data

model explains why, after the passage of more than twenty-five years, no widely

accepted way of representing multiple readings of the same text has emerged in

the scholarly text-encoding community. By and large, even the sharpest critics

of the TEI markup scheme and its limitations have themselves remained within the

document paradigm. They have not abandoned the one-dimensional character-string

method of representing scholarly texts in favor of the multi-dimensional

structures available in a database system.

To understand why no workable way of representing multiple readings has emerged,

we must examine more closely the TEI Consortium’s text-encoding method. The TEI

markup scheme encodes an “ordered

hierarchy of content objects” (OHCO), where a “content object” is a textual component defined

with respect to some mode of analysis at the level either of textual expression

or of textual content (see [

Renear 2004, 224–225]). Quite

rightly, the OHCO model exploits the power of hierarchies to represent

efficiently the relationships of parts to a larger whole. For example, a text

might be broken down into pages, paragraphs, lines, and words, in a descending

hierarchy, or it might be broken down into component parts in some other way,

depending on the mode of analysis being employed. Regardless of how they are

defined, a text’s components can be separated from one another within a long

sequence of characters and related to one another in a hierarchical fashion by

means of markup tags.

The problem with this method is that any one digital text is limited to a single

hierarchy, that is, one primary configuration of the text’s components. Using

standardized markup techniques, a linear sequence of characters can easily

represent a single hierarchy but it cannot easily represent multiple overlapping

hierarchies that reflect different ways of reading the same text. The TEI

Consortium itself provides some simple examples to illustrate this problem in

its

Guidelines for Electronic Text Encoding and

Interchange, including the following excerpt from the poem “Scorn not the sonnet” by William Wordsworth [

TEI P5 2013, Chapter 20]

[7]:

Scorn not the sonnet; critic, you have frowned,

Mindless of its just honours; with this key

Shakespeare unlocked his heart; the melody

Of this small lute gave ease to Petrarch’s wound.

This poem could be represented by a hierarchy based on its metrical

features, with components that represent the poem’s lines, stanzas, and so on.

But the same poem could equally well be represented by a hierarchy based on its

grammatical features, with components that represent words, phrases, clauses,

and sentences.

If we survey literary, linguistic, and philological scholarship more broadly, we

find many different ways of constructing hierarchical representations of texts,

each of which might be useful for computer-aided research on textual corpora.

Our different ways of analyzing texts produce different logical hierarchies and

we cannot claim that any one hierarchy is dominant, especially if we are trying

to create a digital representation of the text that can be used in many

different ways.

[8] The choice of which analytic hierarchy to use and how

the hierarchy should be constructed will depend on the kinds of questions we are

asking about a text. For this reason, a fully adequate digital representation

would allow us to capture many different ways of reading a text without losing

sight of the fact that they are all readings of the same text. Moreover, a fully

adequate digital representation would make full use of the expressive power of

hierarchies wherever appropriate, but it would not be limited to hierarchies and

it would also allow non-hierarchical configurations of the same textual

components, without duplicating any textual content.

A brute-force solution to the “problem of overlapping

hierarchies,” as it has been called, is to maintain multiple

copies of identical textual content, tagging each copy in a different way. Here

is a TEI encoding of the metrical view of the Wordsworth excerpt using the

<l> tag (one of the standard TEI tags) for each metrical line and

using the

<lg> tag to indicate a “line group”:

<lg>

<l>Scorn not the sonnet; critic, you have

frowned,</l>

<l>Mindless of its just honours; with this

key</l>

<l>Shakespeare unlocked his heart; the

melody</l>

<l>Of this small lute gave ease to Petrarch's

wound.</l>

</lg>

The grammatical view of the same text would be encoded by

replacing the metrical tags with tags that indicate its sentence structure,

using the

<p> tag to indicate a paragraph and the

<seg> tag for

grammatical “segments”:

<p>

<seg>Scorn not the sonnet;</seg>

<seg>critic, you have frowned, Mindless of its just

honours;</seg>

<seg>with this key Shakespeare unlocked his

heart;</seg>

<seg>the melody Of this small lute gave ease to Petrarch's

wound.</seg>

</p>

However, as the authors of the TEI Guidelines point

out, maintaining multiple copies of the same textual content is an invitation to

inconsistency and error. What is worse, there is no way of indicating that the

various copies are related to one other, so it is impossible to combine in a

single framework the different views of a text that are contained in its various

copies — for example, if one wanted to use an automated algorithm to examine the

interplay between a poem’s metrical and grammatical structures in comparison to

many other poems.

Three other solutions to the problem of overlapping hierarchies are suggested in

the TEI

Guidelines but they are all quite difficult

to implement by means of textual markup using the standards-based software tools

currently available for processing linear character strings. In addition to

“redundant encoding of information in

multiple forms,” as in the example given above, the following

solutions are discussed:

- “Boundary marking with empty

elements,” which “involves marking the start

and end points of the non-nesting material. . . . The

disadvantage of this method is that no single XML element

represents the non-nesting material and, as a result, processing

with XML technologies is significantly more difficult”

[TEI P5 2013, 631–634]. A further disadvantage of this method — indeed, a crippling

limitation — is that it cannot cope with analytical hierarchies in which

textual components are ordered differently within different hierarchies,

as often happens in linguistic analyses.

- “Fragmentation and reconstitution of

virtual elements,” which “involves breaking what might be considered a single logical (but

non-nesting) element into multiple smaller structural elements that

fit within the dominant hierarchy but can be reconstituted

virtually.” However, this creates problems that “can make automatic analysis

of the fragmented features difficult”

[TEI P5 2013, 634–638].

- “Stand-off markup,” which “separates the text and the

elements used to describe it . . . It establishes a new

hierarchy by building a new tree whose nodes are XML elements

that do not contain textual content but rather links to another

layer: a node in another XML document or a span of text”

[TEI P5 2013, 638–639].

The last option, stand-off markup, is the most elegant solution but it is not

easy to implement using generally available software based on current markup

standards — and it is not, in fact, included in the TEI’s official encoding

method but requires an extension of it. Many people who are troubled by the

problem of overlapping hierarchies favor some form of stand-off markup instead

of ordinary “embedded” or “in-line”

markup. However, stand-off markup deviates so much from the original markup

metaphor that it no longer belongs within the document paradigm at all and is

best implemented within the database paradigm. Stand-off markup involves the

digital representation of multiple readings of a text by means of separate data

objects, one for each reading, with a system of pointers that explicitly connect

the various readings to the text’s components. But this amounts to a database

solution to the problem. The best way to implement this solution is to abandon

the use of a single long character sequence to represent a scholarly text — the

document approach — in order to take advantage of the atomized data models and

querying languages characteristic of database systems. Otherwise, the complex

linkages among separate data objects must be laboriously managed without the

benefit of the software tools that are best suited to the task. The database

approach we describe below is functionally equivalent to stand-off markup but is

implemented by means of an atomized and keyed database.

[9]Other solutions to the problem of overlapping hierarchies continue to be

proposed, some of them quite sophisticated.

[10] However,

the complex relationships among distinct data objects that underlie these

proposed solutions can best be implemented in a database system that does not

represent a text by means of a single character string. Such strings should be

secondary and ephemeral rather than primary and permanent. Nothing is lost by

adopting a database approach because a properly designed database system will

allow the automated conversion of its internal text representation to and from

linear character sequences, which can be dynamically generated from the database

as needed.

With respect to the limitations of the TEI encoding method, some have argued that

the fault lies with the Extensible Markup Language (XML) standard that

prescribes how markup tags may be formatted [

http://www.w3.org/standards/xml]. The TEI method conforms to the XML

standard so that XML-based software tools, of which there are many, can be used

with TEI-encoded texts. But there is a good reason why standardized markup

grammars like XML allow only one strict hierarchy of nested textual components.

Accommodating overlapping pairs of markup tags within a single character string

would create a more complicated standard that demands more complicated software,

losing the main benefit provided by the markup technique, which is that it is

intuitive and easy to use, even by people who have modest programming skills. If

we are going to work with more complex data structures and software, it would be

better to abandon the single-character-string representation of a text

altogether in favor of a more atomized, and hence more flexible, database

representation.

[11]Thus, in our view, the TEI markup scheme and other similar text-encoding schemes

are useful as secondary formats for communicating a particular reading of a text

that has been generated from a richer underlying representation, but they are

not suitable for the primary digital representation of a text. For this one

needs a suitably designed database system, keeping in mind that with the right

kind of internal database representation it is a simple matter to import or

export a “flattened” representation of a given text — or,

more precisely, of one reading of the text — as a single character string in a

form that can be used by markup-based text-processing software. An existing

text-reading stored in digital form as a single sequence of characters (with or

without markup tags) can be imported by automatically parsing it in order to

populate the database’s internal structures. In the other direction, a character

string that represents a particular reading can easily be generated when needed

from internal database structures, incorporating whatever kind of markup may be

desired in a particular context (e.g., TEI tags or HTML formatting tags).

In summary, we argue that document-markup solutions to the problem of overlapping

textual hierarchies are awkward workarounds that are at odds with the basic

markup model of a single hierarchy of textual components. Solving the problem

will require abandoning a digital representation of texts in the form of long

linear character sequences. That approach may suffice for many kinds of digital

texts, especially in non-academic domains, but it is not the best method for the

primary representation of texts that are objects of scholarly study and will be

read in different ways. Digital documents do not transcend the structure of the

flat printed page but rely on relative position in one or two dimensions to

distinguish entities and to represent their relationships to one another,

instead of making those distinctions and relationships explicit in

multi-dimensional structures of the kind found in a well-designed database. What

is needed is a database that can explicitly distinguish each entity and

relationship of interest, enabling us to capture all the conceptual distinctions

and particular observations that scholars may wish to record and discuss.

2. The history of the document paradigm versus the database paradigm

In light of the limitations imposed by the document-markup method of scholarly

text encoding, we may ask why this method took root and became so widely

accepted instead of the database alternative. To answer this question, it is

worth examining the history of the document paradigm of software design in

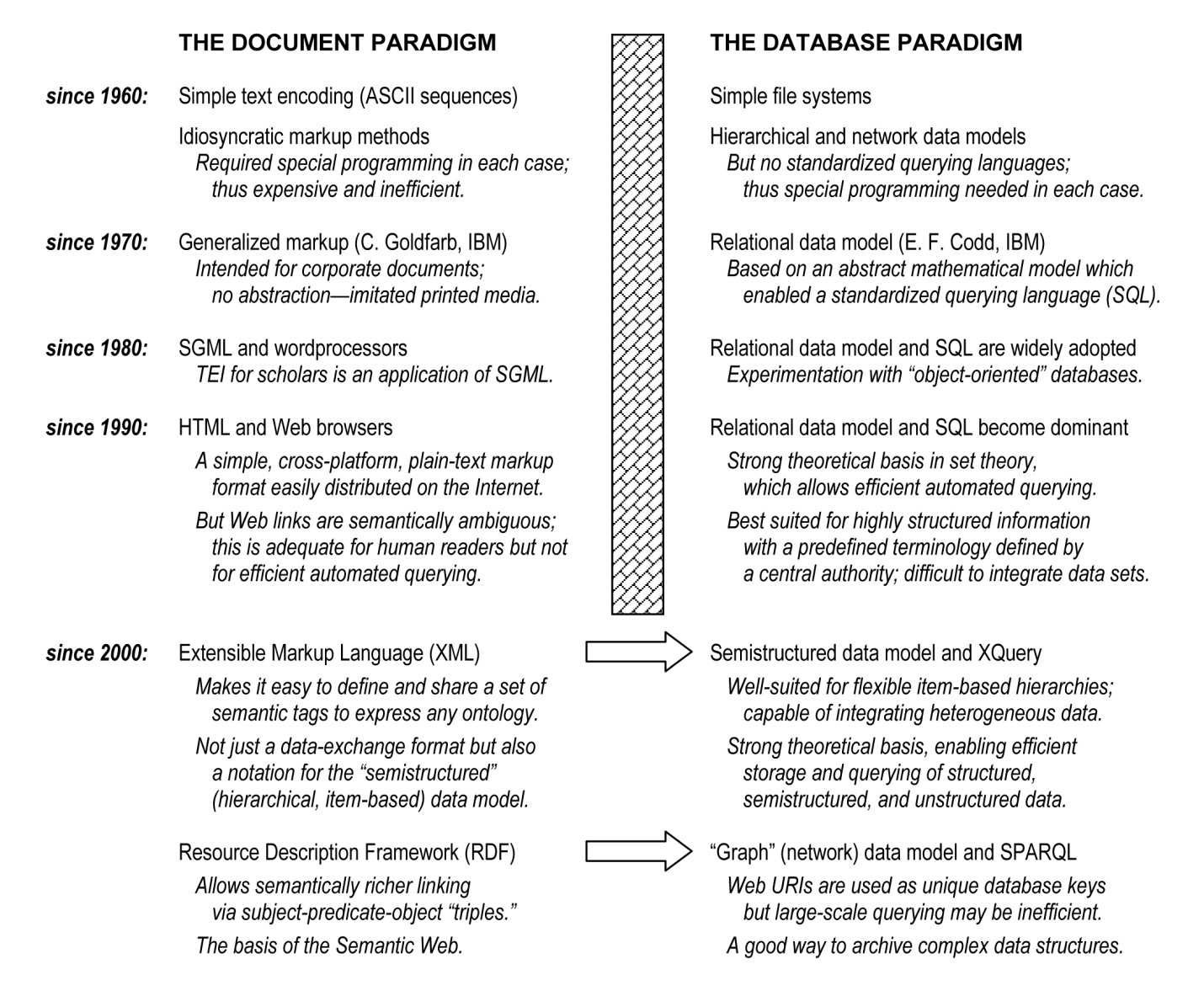

contrast to the parallel history of the database paradigm. The diagram below

summarizes the key developments in the emergence of these two paradigms, leading

up to the software environment in which we work today.

It is remarkable that current approaches to software design for both documents

and databases emerged in the United States in the same year, 1969, and in the

same institution, namely the International Business Machines Corporation (IBM),

although on opposite sides of the country. At the IBM lab in Cambridge,

Massachusetts, Charles Goldfarb and his colleagues came up with the first widely

adopted markup method for digital texts. This was eventually canonized as SGML,

the Standard Generalized Markup Language, which became an ISO standard in 1986

and a few years later gave birth to HTML, the HyperText Markup Language, and

thus to the World Wide Web (see [

Goldfarb 1990] on SGML; the

relationship between SGML and HTML, and subsequently XML, is described in [

DuCharme 1999, 3–24]).

Meanwhile, at the IBM lab in San Jose, California, an Englishman named Ted Codd

was writing a paper entitled “A Relational Model of Data for

Large Shared Data Banks”

[

Codd 1970], which changed the world of databases forever. Codd’s

relational data model subsequently became the basis for the majority of database

systems in use today. Beyond the relational model itself, the approach to

database design that flowed from Codd’s conceptual innovations informs many

aspects of recent non-relational systems and querying languages.

The intellectual contrast between these two IBM employees could not have been

greater. Codd was a mathematician who received a Ph.D. from the University of

Michigan in 1965 with a thesis that focused on a difficult problem in the theory

of computation (self-replication in cellular automata). Goldfarb was an attorney

who graduated from Harvard Law School in 1964 and then practiced law in Boston.

He did not join IBM until November 1967, at which point, as he says in a memoir,

he knew nothing about computers (see [

Goldfarb 1996]). He was

given the task of applying computers to the needs of law firms, in order to help

IBM expand its presence in that market. In particular, he was asked to work with

IBM programmers to integrate a simple text-editing program with an

information-retrieval system and a text-formatting program to make it easier to

manage and distribute legal documents and other structured documents used in

business enterprises and governmental settings, such as catalogues and

procedural manuals.

Given his background, it is not surprising that Goldfarb’s solution to the

problem was non-theoretical and ad hoc. Quite understandably, he decided to

imitate what he knew about the preparation of printed documents and he latched

onto the concept of markup, as many others were doing around the same time,

transferring the blue pencil-marks of pre-digital editors to special sequences

of digitally encoded characters embedded in the main text. These embedded markup

“tags” could provide formatting instructions that told a

computer program how to print or display a text, or they could provide semantic

information about the structure and contents of the text. Goldfarb’s innovation

was to come up with a standard method for defining new markup tags in a

generalized way, allowing anyone to create a set of tags suitable for a

particular kind of document and making it easy to write software that could work

with a wide range of tagging schemes. His Standard Generalized Markup Language

is, strictly speaking, not itself a markup language (i.e., a document tagging

scheme) but rather a formal grammar for the design and specification of markup

languages.

In addition to this standard tagging mechanism, the writing of general-purpose

software for text processing was greatly aided by adopting a simple hierarchical

model in which tags were used to group textual components into larger

configurations in a nested fashion, so that lines of text were grouped within

paragraphs, and paragraphs within sections, and sections within chapters, and so

on. This simple and intuitive approach to digital documents has proved to be

very popular, precisely because of its simplicity. For example, the HyperText

Markup Language on which the World Wide Web is based is one such tagging scheme,

and it took off like wildfire in the early 1990s in part because it required

very little technical expertise to use it.

But Goldfarb made a fateful limiting assumption about the nature of texts that

has affected digital text-processing ever since. He assumed that the structure

of any text that was being digitally encoded would be determined in advance by

assigning it to a particular predefined genre (“document

type”). This would determine which markup tags could be used and

the way in which separately tagged components of the text could be nested within

one another to form a single hierarchy. In other words, Goldfarb assumed that

someone would decide in advance how the text ought to be read and this way of

reading would be fundamental to the text’s digital representation. This limiting

assumption is not usually a problem in the corporate world in which digital

markup emerged, where textual structures are determined from the top down by a

central semantic authority. However, this assumption creates severe problems for

scholars who lack a central semantic authority and thus work with texts in a

different way.

[12]Turning now to the database paradigm, we can contrast the ad hoc and

non-theoretical character of Goldfarb’s approach with Codd’s theoretically

sophisticated approach to the digital representation of information. Codd’s

abstract relational model was initially resisted by IBM itself, his own

employer, which had recently adopted a quite different model.

[13] But the relational model eventually won out and displaced

all its rivals. It did so because it is firmly rooted in mathematical theory,

enabling the creation of general-purpose software that can search and manipulate

relationally organized information with great efficiency. As is often the case

in computing, abstract theoretical elegance yielded optimal real-world

performance in the long run.

Codd’s solution to the problem of managing digital information involved a leap of

abstraction to the mathematical concepts of “set” and “relation” and

the algebraic operations that can be performed on relations (for a concise

introduction to the relational data model and relational algebra, see [

Garcia-Molina et al. 2009, 17–65]). Codd gave a mathematical basis to the

idea of database “keys,” that is, pieces of information that

uniquely identify other pieces of information and can be used to mix and match

the information in a database in any number of different ways. By finding the

right level of abstraction, he also guaranteed universality, meaning that

digital information of all kinds, regardless of which conceptual ontology is

used to organize it, can be manipulated and retrieved in a relational database

system.

Goldfarb failed to make a similar leap of abstraction with the consequence that

his method of text representation is not universal but only partial and does not

meet the needs of textual scholars in particular. His digital texts mimic the

structure of pre-digital documents, conveying information implicitly by means of

sequential position, as in a printed work, which conveys information by the

relative placement of characters in a line, of lines in a page, and of pages in

a book. The information is implicit in the positioning of discrete entities — in

their before-and-after juxtapositions with other entities — rather than being

made explicit in independently addressable entities that can be related to one

another in any number of different ways, as in a keyed and indexed database.

In other words, if we rely on data structures that conform to the document

paradigm, such as linear sequences of character codes, then our information is

imprisoned in the straitjacket of sequential position rather than being free to

be configured flexibly in many different dimensions, as can be done in a modern

database. This would not matter if all we were trying to do is display a

predetermined view of the text to a human reader. But the whole point of the

kind of digitization we are talking about is to encode information in a way that

enables not just a visual display of the text but automated analyses of its

contents. And, as we have seen, what we are actually encoding in that case is

not the text per se but one or more interpretations of it. So, if what we want

to represent digitally are the ways that one or more readers have understood the

text from different perspectives, we must transcend sequential position, which

limits our ability to configure a text’s components, and adopt a more flexible,

multi-dimensional method of representing a text.

It is true that the markup tags embedded in a Goldfarbian digital text allow us

to go beyond a representation of the text as a simple unmarked sequence of

characters by making explicit some aspects of a reader’s interpretation of the

text. But markup tags are limited in what they can do by the very fact that they

are embedded in a specific location within a sequence of characters just as

pre-digital markup written in blue pencil exists at a certain place on the

page.

[14] This

limitation is tolerable when the structure of the text and thus the possible

ways of analyzing it are ordained in advance, as in the world of business and

government. But that is not the case in the world of scholarship.

The lesson here is that humanists should not be parasitic on the impoverished

ontology of digital texts that flourishes in an environment of top-down semantic

authority but should draw upon the intellectual tradition of critical

scholarship to devise their own richer and more effective ontology. It is

unfortunate, in our view, that scholars became reconciled to the limitations of

the document-markup approach and neglected the database alternative. However, to

be fair, we concede that the relational database software that dominated in the

1980s and 1990s presented some practical barriers for textual applications

(e.g., the need for many inefficient table joins). It is only in recent years

that these barriers have been eliminated with the emergence of non-relational

database systems and querying languages, which themselves have borrowed

substantially from SGML and document-processing techniques while remaining

within the database paradigm. Thanks to these enhancements, we are at the point

where we can stop trying to solve what is actually a database problem by means

of a document-oriented method that lacks the powerful techniques implemented in

database systems. We can solve the problem of overlapping hierarchies by

breaking free of the constraints of the document paradigm and moving firmly to

the database paradigm. And we can do so while bringing with us the best features

of the document paradigm, which in recent years has greatly enriched the

database paradigm and has made it more capable of dealing with relatively

unstructured texts.

3. Transcending the document paradigm via item-based atomization of

information

We turn now from the document paradigm and its history to a database approach

that can transcend the limitations of digital documents. But first we must

emphasize that the problem we are trying to solve goes beyond the representation

of texts, so the best solution will be one that is applicable to other kinds of

scholarly data and permits both relatively unstructured texts and more highly

structured information to be stored, managed, and queried in the same way. By

generalizing the problem, we can reach the right level of abstraction and

develop a common computational framework for many kinds of scholarly research.

This allows the same database structure and software to be used for different

purposes, yielding financial economies of scale. And the motivation to create a

common computational framework is not simply pragmatic. It is also intellectual,

because the problem of overlapping hierarchies is not confined to textual

studies but emerges in many kinds of research in which multiple configurations

and interpretations of the same data must be represented and managed

efficiently.

The problem we are trying to solve goes beyond text-encoding because the

pervasive document paradigm has fostered analogous limitations in humanities

software applications developed to manage structured data. Structured data is

usually displayed, not as lines of text, but in tabular form, with one row for

each entity of interest and one column for each property of those entities.

Tables provide a convenient way to display structured data but are not the best

way to organize scholarly information in its primary digital representation. A

rigid tabular structure imposes predetermined limits on what can be recorded and

how the information can be analyzed. In the humanities, there is often a high

degree of variability in what needs to be described and a lack of agreement

about the terms to be used and how entities should be classified. Software

developers who try to cope with this variability by using tables as primary

structures will end up creating many idiosyncratic tables which require equally

idiosyncratic (and unsustainable) software to be written for each research

project, and with no way to integrate information derived from different

projects. The alternative we advocate is to exploit the ability of an atomized

keyed-and-indexed database system to represent the full range of scholarly

observations and conceptual distinctions in a more flexible manner, allowing

many different views of a large body of shared information to be generated from

a common underlying database structure that does not predetermine what can be

recorded and how it can be analyzed.

To do this, we must embrace a high degree of atomization in our database design.

We can design data objects and the linkages among them in a way that is less

atomized or more atomized, depending on the nature of the data and how it will

be used. In many database applications, compromises are made to reduce the

number of linkages required between distinct data objects (e.g., to reduce the

number of table joins needed in a relational system). Information about a class

of entities and their properties will often be embedded in a single table (e.g.,

data about the customers of a business enterprise). This kind of database table

therefore resembles a digital document, even though the entities in the table

may be linked to other entities in the database via unique keys, allowing the

database to transcend the document paradigm, at least in some respects. Many

commercial databases are “class-based” in this way; in other

words, their table structures depend on a predetermined classification of the

entities of interest. A class-based database uses two-dimensional tables to

represent predefined classes of entities that have predefined common properties

(i.e., predefined by the database designer). Typically, each class of entities

is represented by a single table; each entity in the class is represented by a

row in the table; and each property of that class of entities is represented by

a column in the table.

In an academic domain such as the humanities, however, a class-based database is

often inadequate. A higher degree of atomization is needed to permit the

flexibility in description and analysis that critical scholarship demands. The

alternative to a class-based database is a highly atomized

“item-based” database in which not just each entity of

interest but each property of an entity and each value of a property is

represented as a separately addressable data object. This enables many different

dynamically generated classifications of entities, which are determined by the

end-users of the system and are not predetermined by the database designer. It

is important to note that even though relational databases use tables, they are

not necessarily class-based but can be designed to be item-based. Likewise,

non-relational databases can be item-based or class-based, depending on their

design.

[15]A highly atomized item-based database of the kind we advocate does not eliminate

the need for strings and tables as a means of presenting information to

end-users. Computer programmers distinguish the secondary presentation of

information to human beings from the primary representation of this information

within a computer. One-dimensional character strings displayed as lines of text

on a computer screen are necessary for presenting information to human readers,

and there is no question that a two-dimensional table is an effective way of

presenting highly structured information. But one-dimensional strings and

two-dimensional tables are not the best structures to use internally for the

primary digital representation of scholarly information. These structures make

it difficult to manage a large number of distinct but interrelated units of

information — in our case, units that reflect the wide range of entities,

properties, and relationships distinguished by scholars — in a way that allows

the information to be easily combined in new configurations.

[16]We contend that a highly atomized item-based database can provide a common

solution for scholarly work both with unstructured texts and with more highly

structured data, allowing the same underlying database structure and algorithms

to be used for both kinds of data. In such a database, individual data objects

may be linked together by end-users in different ways without imposing a single

standardized terminology or classification scheme. By virtue of being highly

atomized and readily reconfigurable, data objects that represent individual

entities and properties and the relationships among them are able to represent

the many idiosyncratic interpretations that characterize critical scholarship

much better than traditional digital documents. They can do so because there is

no requirement that the entities and properties of interest be defined in

advance or grouped together structurally in a way that inhibits other ways of

configuring them.

[17]With respect to texts, in particular, we can solve the problem of overlapping

hierarchies by using an item-based database to separate the representation of

each hierarchy of textual components from the representation of those components

themselves, while also separating the representation of each textual component

from the representations of all the others. If we separate the representation of

a hierarchy of textual components from the representations of each individual

component we obtain an atomized structure in which each textual component is

stored and retrieved separately from the hierarchies in which it participates,

no matter how small it may be and no matter how it may be defined. For example,

a database item may represent a unit of analysis on the epigraphic level, like a

character or line; or it may represent a unit of analysis on the linguistic or

discourse level, like a morpheme, word, clause, or sentence. It is up to the

end-user to define the scope of the items that make up a text and how they are

related to one another hierarchically and non-hierarchically.

We call this an item-based database because it treats each textual component as

an individually addressable item of information. It is also a hierarchical

database, insofar as it makes full use of the expressive power of hierarchies —

as many as are needed — to represent efficiently the relationships of parts to a

larger whole. Indeed, each hierarchy is itself a database item. And because this

kind of database is highly atomized and item-based, it readily allows

non-hierarchical configurations of items, if those are necessary to express the

relationships of interest. This database design has been implemented in a

working system in use at the University of Chicago [

Schloen and Schloen 2012],

which is described below in more detail. The same database structure has proved

to be applicable to many kinds of scholarly information other than texts.

We are aware that this way of thinking about texts as databases and not as

documents will seem strange to many people. Some will no doubt react by

insisting that a text is intrinsically a sequential phenomenon with a beginning,

middle, and end, and is therefore well suited to digital representation by means

of a linear character sequence (i.e., a document). It is true, of course, that a

text is sequential. However, as we noted above, what we are representing is not

the text per se but various readers’ understandings of the text; and anyone who

understands what he or she is reading forms a mental representation that

encompasses many parts of the text at once. A human reader’s way of

comprehending (literally, “grasping together”) the

sequentially inscribed components of a text will necessarily transcend the

sequence itself. Thus, we need digital data structures that preserve the text’s

linearity while also being able to capture the many ways scholarly readers might

conceptualize the text’s components and simultaneously relate them to one

another.

This way of thinking about texts requires a leap of abstraction of a kind

familiar to database designers. It is a matter of going beyond the concrete form

that information may take in a particular situation in order to discern

fundamental entities and relationships that a database system can work with to

generate many different (and perhaps unexpected) views of the data, depending on

the questions being asked, without error-prone duplication of information. Some

may object that operating at this level of abstraction — abandoning the simple

and intuitive document paradigm in favor of the more complex database paradigm —

creates obstacles for software development. It is true that it calls for

properly trained programmers rather than do-it-yourself coding, but this is

hardly an argument against developing more powerful systems that can more

effectively meet the needs of researchers. Scholars rely on complex

professionally written software every day for all sorts of data management and

retrieval (e.g., for social media, shopping, banking, etc.). We should not be

surprised if they need similarly complex software for their own research data.

As end-users they do not need to see or understand the underlying software and

data structures as long as the database entities, relationships, and algorithms

they are working with are understood at a conceptual level. Thus the complexity

of the software should be irrelevant to them.

Moreover, poverty is not an excuse for failing to develop suitable software for

the humanities, whose practitioners usually have little or no money to hire

programmers. To the extent that a database system meets common needs within a

research community whose members use similar methods and materials (literary,

historical, etc.), the cost of developing and maintaining the software can be

spread over many researchers. Front-end user interfaces for the same system can

be customized for different projects without losing economies of scale, which

depend on having a single codebase for the back-end database software, where the

complexity lies. Indeed, if some of the resources expended over the years on

document-oriented software for the humanities had been spent on designing and

building suitable back-end database software, we would have better tools at our

disposal today. In our view, universities can support digital humanities in a

better and more cost-effective way by discouraging the development of

idiosyncratic project-specific tools and giving researchers and students access

to larger shared systems that are professionally developed and maintained,

together with the necessary training and technical support.

4. XML, the CHOIR ontology, and the OCHRE database system

We need now to give some indication of how, in practice, one can work within the

database paradigm using currently available software techniques to overcome the

limitations of the document paradigm. For a long time, the barrier between

textual scholarship and database systems seemed insurmountable because it was

cumbersome (though not impossible) to represent free-form texts and their

flexible hierarchies within relational databases, which have been the dominant

type of database since the 1980s. For this reason, in spite of its limitations,

the document-markup approach often seemed to be the best way to handle digital

texts. However, in the last ten years the database paradigm has itself been

greatly enriched by the document paradigm. This has enabled the creation of

non-relational database systems that can more easily handle flexible textual

hierarchies as well as highly structured data.

This came about because of the Extensible Markup Language (XML), which was

adopted as a universal data-formatting standard by the World Wide Web Consortium

in 1998 and was followed in subsequent years by other widely used standards,

such as XQuery, a querying language specifically designed to work with XML

documents, and the Resource Description Framework (RDF) and its SPARQL querying

language, which are the basis of the so-called Semantic Web.

[18] XML is

itself simply a streamlined and Web-oriented version of SGML that was developed

to remedy the semantic ambiguity of the HyperText Markup Language (HTML) on

which the Web is based. XML makes it easy to define semantic tagging schemes and

share them on the Internet.

[19]Database specialists quickly realized that XML transcends the world of document

markup and provides a formal notation for what has been called the “semistructured data model,” in distinction

from Codd’s relational data model. XML accordingly became the basis of a new

kind of non-relational database system that can work equally well with

structured tables and more loosely structured texts.

[20] It provides

a bridge between the document paradigm and the database paradigm. The keyed and

indexed data objects in an XML database conform to the XML markup standard and

thus may be considered documents, but they function quite differently from

traditional marked-up documents within the document paradigm. Like any other XML

documents, the data objects in an XML database each consist of a sequence of

character codes and so can be displayed as plain text, but they are not

necessarily correlated one-for-one to ordinary documents or texts in the real

world. Instead, they function as digital representations of potentially quite

atomized pieces of interlinked information, as in any other database

system.

[21]For our purposes, the most important feature of the semistructured data model is

its close congruence to item-based ontologies characterized by recursive

hierarchies of unpredictable depth, for which the relational data model is not

well suited. As we have said, an item-based ontology defined at the right level

of abstraction can represent overlapping textual hierarchies without duplicating

any textual content, enabling digital text representations that capture

different ways of reading a text without losing sight of the fact that they are

all readings of the same text. And the same ontology can represent highly

structured information without embedding units of scholarly analysis in

two-dimensional tables of the kind prescribed by the document paradigm. Instead,

each entity of interest, and each property of an entity, can be stored as a

separately addressable unit of information that can be combined with other units

and presented in many different ways.

We have demonstrated this in practice by developing and testing an item-based

ontology we have called CHOIR, which stands for “Comprehensive Hierarchical

Ontology for Integrative Research.” But an ontology is not itself a

working data-retrieval system; it is just a conceptual description of entities

and relationships in a given domain of knowledge. To demonstrate its utility for

scholarly research, we have implemented the CHOIR ontology in an XML database

system called OCHRE, which stands for “Online Cultural and Historical Research

Environment.” This multi-user system has been extensively tested for the

past several years by twenty academic projects in different branches of textual

study, archaeology, and history. In 2012 it was made more widely available via

the University of Chicago’s OCHRE Data Service [

http://ochre.uchicago.edu].

[22]Although we have implemented the CHOIR ontology in an XML database system, we are

not using XML documents as a vehicle for marked-up texts in the manner

prescribed by the TEI text-encoding method. In an OCHRE database, XML documents

do not correspond to real-world documents but are highly atomized and

interlinked data objects, analogous to the “tuples” (table

rows) that are the basic structural units in a relational database. Each XML

document represents an individual entity or a property of an entity, however

these may be defined in a given scholarly project; or an XML document might

represent a particular configuration of entities or properties in a hierarchy or

set. For example, in the case of a text, each textual component, down to the

level of graphemes and morphemes if desired, would be represented by a different

XML document, and each hierarchical analysis of the text would be represented by

its own XML document that would contain pointers to the various textual

components.

In contrast to conventional text-encoding methods, OCHRE’s representation of a

text is not limited to a single hierarchy of textual components. If overlapping

hierarchies are needed to represent alternate ways of reading the same text,

OCHRE does not duplicate textual content within multiple, disconnected

hierarchies. Instead, the same textual components can be reused in any number of

quite different hierarchical configurations, none of which is structurally

primary. Moreover, an OCHRE hierarchy that represents an overall mode of textual

analysis (e.g., metrical, grammatical, topical, etc.) can accommodate smaller

textual or editorial variants within the same mode of analysis as overlapping

branches in the same hierarchy. OCHRE also permits non-hierarchical

configurations of textual components, if these are needed.

In terms of Hjelmslev’s expression-versus-content distinction, which was

mentioned above in connection with Dino Buzzetti’s critique of embedded markup,

a text is represented in OCHRE by means of recursive hierarchies of uniquely

keyed text-expression items (physical epigraphic units) and separate recursive

hierarchies of uniquely keyed text-content items (linguistically meaningful

discourse units). There is one hierarchy for each epigraphic analysis and one

hierarchy for each discourse analysis. To connect the hierarchies that make up

the text, each epigraphic unit is linked in a cross-hierarchical fashion to one

or more discourse units that represent interpretations of the epigraphic signs

by one or more editors. Each epigraphic unit and each discourse unit is a

separately addressable database item that can have its own descriptive

properties and can in turn contain other epigraphic units or discourse units,

respectively, allowing for recursion within each hierarchy.

A recursive hierarchy is one in which the same structure is repeated at

successive levels of hierarchical nesting. Many linguists, following Noam

Chomsky, believe that recursion is a fundamental property of human language and,

indeed, is what distinguishes human language from other kinds of animal

communication (see [

Hauser et al. 2002]; [

Nevins et al. 2009];

[

Corballis 2011]). Whether or not this is true, it is clear

that recursive hierarchies are easily understood by most people and serve as an

intuitive mechanism for organizing information, as well as being easy to work

with computationally because they lend themselves to an iterative

“divide and conquer” approach. For this reason, recursion

plays a major role in the CHOIR ontology and the OCHRE database system that

implements it.

The units of analysis within a given recursive hierarchy are defined by the

text’s editor according to his or her chosen method of analysis and they are

ramified to whatever level of detail is required. For example, a scholar might

distinguish pages, sections, lines, and individual signs, in the case of

epigraphic-expression hierarchies, and might distinguish paragraphs, sentences,

clauses, phrases, words, and morphemes, in the case of discourse-content

hierarchies. But note that the nature of the textual components and the degree

of nesting in each hierarchy are not predetermined by the software; they are

determined by the scholars who are using the software.

Finally, OCHRE is not dependent on existing character-encoding standards for its

representation of writing systems. These standards may be inadequate for

scholarly research in certain cases. As we observed at the beginning of this

paper, the Unicode character-encoding standard, large as it is, does not include

every allograph of every sign in every human writing system. It embodies someone

else’s prior choices about how to encode characters and what to leave out of the

encoding. With this in mind, OCHRE safeguards the semantic authority of the

individual scholar, even at this most basic level, by abstracting the digital

representation of texts in a way that allows the explicit representation, not

just of texts themselves, but also of the writing systems that are instantiated

in the texts. If necessary, a scholar can go beyond existing standardized

character codes and can represent the components and structure of a particular

writing system in terms of hierarchies of idealized signs constituted by one or

more allographs (e.g., the first letter in the Latin alphabet is constituted by

the allographs “A” and “a” and various other ways of writing this

sign). The allographs of a writing system can then be linked individually,

usually in an automated fashion, to a text’s epigraphic-expression units, and

the text can be displayed visually via the drawings or images associated with

the allographs.

In other words, scholars are able to make explicit in the database not just their

interpretations of a text but also their understandings of the writing system

used to inscribe the text. The epigraphic expression of a text can be

represented as a particular configuration of individually addressable allographs

that constitute signs in one or more writing systems — or, from another

perspective, a writing system can be represented as a configuration of ideal

signs derived from each sign’s allographic instantiations in a corpus of texts.

This is implemented internally by means of pointers from database items that

represent the text’s epigraphic units to database items that represent signs in

a writing system. Moreover, overlapping hierarchies can be used to represent

different understandings of a given writing system, reconfiguring the same signs

in different ways, just as overlapping hierarchies represent different readings

of a given text, reconfiguring the same textual components in different

ways.

We can now say with some confidence, having developed and tested the CHOIR

ontology over a period of more than ten years in consultation with a diverse

group of researchers, that this ontology is an effective solution to complex

problems of scholarly data representation and analysis, including the

longstanding problem of overlapping hierarchies. To be sure, writing the

software to implement this ontology in a working database system such as OCHRE

requires a high level of programming skill and a considerable investment of time

and effort. However, the generic design of the underlying ontology ensures that

the same software can be used by many people for a wide range of projects,

making the system financially sustainable.

Professionally maintained and well-supported multi-project systems like this are

much too scarce in the humanities, which have tended toward idiosyncratic

project-specific software that is not adequately documented, supported, or

updated over time. This tendency is not simply caused by a lack of resources. At

its heart, the problem is ontological, so to speak. Scholars have lacked a