Tool development in the Digital Humanities has been the subject of numerous articles

and conference presentations.

[1] While the purpose and direction of tools and

tool development for the Digital Humanities has been debated in various forums, the

value of tool development as a scholarly activity has seen little discussion. This

may be, in part, because of the perception that tools are developed to aid and abet

scholarship, but that their development is not necessarily considered scholarship in

and of itself.

This perception, held by the vast majority of tenure review boards, dissertation

committees, and our peers, may be an impediment to the development of the field of

digital humanities. Indeed, as our survey results indicate, some tool developers also

subscribe to this. A majority of respondents, however, consider tools development

positively linked to more traditional scholarly pursuits. As one respondent

indicated,

I develop a tool as a specific means to an

end, and the end is always pertinent to some literary question. Tool development

is deeply informed by the research agenda and thus the tool development might be

seen as analogous to other “research” activities. Archival research is one

way of obtaining data. To get that data one must employ a methodology etc. The

development of a tool is akin to this.

Tool development as a methodological approach was considered no less rigorous and

scholarly than other approaches:

My field is the

digital humanities, and some part of my research is on how computing affects

(positively and negatively) scholarly activity. Building the tool — which

expresses a particular intellectual stance on certain issues — is meant to be a

research activity.

Several major recent reports urge the academic community (particularly in the

humanities) to consider tool development as a scholarly pursuit, and as such, build

it into our system of academic rewards. The clearest statement of such a shift in

thinking came from the recommendations of the ACLS Commission on

Cyberinfrastructure for the Humanities and Social Sciences, which called

not only for “policies for tenure and promotion that

recognize and reward digital scholarship and scholarly communication” but

likewise stated that “recognition should be given not

only to scholarship that uses the humanities and social science

cyberinfrastructure but also to scholarship that contributes to its design,

construction and growth.”

The hurdles we might expect in seeing these recommendations implemented are

complicated by a parallel but distinct issue noted by the MLA

Report on Evaluating Scholarship for Tenure and Promotion: namely, that a

majority of departments have little to no experience evaluating refereed articles and

monographs in electronic format. The prospects for evaluating tool development as

scholarship, at least in the near term, in these departments would appear dim.

However, coupled with the more optimistic recommendations of the ACLS report, as well

as the MLA Report’s findings that evaluation of work in digital form is gaining

ground, the notion of tool development as a scholarly activity may not be far

behind.

In 2005, scholars from the humanities, the social sciences, and computer science met

in Charlottesville, Virginia for a Summit on Digital Tools for

the Humanities. While the summit itself focused primarily on the use of

digital resources and digital tools for scholarship, the Report on Summit

Accomplishments that followed touched on development, concluding that “the development of tools for the interpretation of

digital evidence is itself research in the arts and humanities.”

The present study was thus undertaken in response to some of the questions and

conclusions that came out of the Digital Tools summit and also as a follow-up to our

own experiences in conducting an earlier survey in the spring of 2007 on the

perceived value of

The Versioning Machine. One of the intriguing results of The Versioning Machine survey, which was

presented as a poster at the 2007

Digital Humanities

conference [

Schreibman et al. 2007b] was in the area of value. The vast

majority of respondents found it valuable as a means to advance scholarship in spite

of the fact that they themselves did not use it, or at least did not use it in the

ways the developers of

The Versioning Machine envisioned

its use. As a result of feedback during and subsequent to the poster session, the

authors decided to conduct a survey focusing on tool development as a scholarly

activity.

The authors developed the survey to meet in one small way John Unsworth’s challenge,

made at the 2007 Digital Humanities Centers Summit,

“to make our difficulties, the shortcomings of our

tools, the challenges we haven't yet overcome, something that we actually talk

about, analyze, and explicitly learn from.” There were many ways to

approach this study: by surveying the community for whom the tools were developed; by

surveying digital humanities centres where much (but certainly not all) of the tool

development takes place; by questioning department chairs or tenure committee heads

as to their perceptions on tool development as a scholarly activity and how it fits

within the academic reward system.

In the end it was decided to focus the study on developers of digital humanities

tools: their perceptions of their work, how it fits into a structure of academic

rewards, and the value of tool development as a scholarly pursuit. Rather than invite

select respondents to take the survey, we decided that we would allow the field of

respondents to self select. Notices of the survey were sent to mailing lists such as

Humanist, the TEI list, XML4Lib, Code4Lib, and Centernet. Additionally we sent

invitations to about two dozen people whom we knew developed tools.

An initial set of questions were drawn up in autumn 2007. This was circulated to

several prominent tool developers for feedback. The survey was refined on the basis

of their feedback and issued to mailing lists in December 2007. By March 2008, when

the survey closed, 54 individuals had completed it. Survey questions were grouped

into four main categories: Demographics, Tool Development, Specific Tools, and Value. These categories reflected the main emphases for the

survey — i.e., what kinds of tools were being developed and why; and specifically

whether the process of developing tools was considered to have value to the

developers, particularly with respect to career development and scholarship.

In order to allow developers to comment on their experiences with more than one tool,

the survey provided for demographic information to be collected once and linked to

any number of tools developed by an individual. The survey was constructed in this

way because we were curious as to whether developers had different experiences with

particular tools, or whether perceptions of value would be consistent regardless of

the type of tool developed. For the most part, developers who described experiences

with more than one tool had similar perceptions of value regardless of the tool.

There were, however, some differences, including a developer who did not feel that

one tool developed early in his career could be categorized as a scholarly activity,

while two others were. The “non-scholarly”

tool was singled out as being “merely a response to

needs for workable data management, even though quite a lot of the conceptual work

was reused later [in the development of another tool].” The two tools that

this developer considered scholarly conferred benefits to his individual research

agenda and raised his profile in his field.

We were impressed with the thoroughness with which the majority of respondents

completed the survey. Most respondents took the time to provide lengthy answers to

questions that demanded more than a quantifiable response (such as yes/no/maybe). As

returns came in, however, we realized that there were several questions we did not

ask but wish we had. One involved geographic location of the respondent. It was

possible to extract some of this information based on IP address, but it is cruder

than we would have liked: we know that approximately half of the respondents were in

the United States (51%), 27% were in Europe, most of whom were in the U.K., which by

itself accounted for 13% of all respondents, while 7% were in Canada. Another

question we regretted not asking is what career stage the respondent was (tenured,

tenure-track, non-tenure, etc.), although we could ascertain if respondents were

graduate students. And lastly respondents were not asked their gender.

We approached the survey with several assumptions. The results of The Versioning Machine survey indicated that users found

tools valuable even if they did not use tools in the ways their developers intended

them to be used. We also assumed that developers might be less optimistic about the

value of tools that were not widely adopted.

Survey responses did demonstrate that low adoption rates were something that

developers felt hurt the value of their tools, but it was not their biggest concern:

level of adoption was ranked fourth among four potential measures of a tool’s

success. We also assumed that there might be a more negative response regarding tool

development and career advancement. While our survey did not reveal an academia

suddenly receptive to tool development as a scholarly activity, we were surprised at

the relatively positive response, as well as the range of ways that developers

articulated scholarly value in relation to tool development.

Analysis of Data

Basic Demographics

There were 108 responses to the survey. Of those, 63 were complete responses — in

other words, all questions in the survey were completed. This represents several

individuals who returned multiple times to describe different tools (in total 54

individuals completed the survey). Of the four respondents who filled up the

survey multiple times, one person described six tools, two described three tools,

and one described two tools.

Partially completed surveys that were partial because the respondent was returning

to discuss a second or third tool, or because the respondent had skipped a

question that was not relevant, were kept. Partially completed surveys in which

the respondent had only filled out the consent form and demographic information

were discarded.

Forty-eight respondents answered the multiple-choice question about who they were:

32 respondents (67%) identified themselves as Faculty (teaching). The next highest

was Programmer/Developer with 6 respondents (13%). Libraries (faculty or

non-faculty) came in third, with 4 respondents (8%). Departmental affiliations

were entered by the respondent (i.e., there was no controlled vocabulary): English

departments were the most represented (11 respondents); Libraries and Information

Studies were next (eight respondents); six identified their primary affiliation as

a Humanities Center.

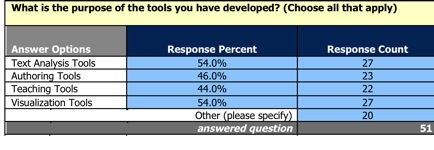

Tool development was categorized in four broad areas: text analysis, authoring,

teaching, and visualization. Interestingly, the percentage of tools developed in

each category were remarkably similar.

Other categories that were not captured but which had more than one response were

database management tools, indexing tools, and archiving tools.

[2] Many of the tools developed by

practitioners in the digital humanities community are represented in the survey.

These include tools with “brand” names many might recognize: Hyperpo, Image

Markup Tool, Ivanhoe, Collex, Justa, nora, Monk, Tact, TactWeb, Tamarind, Tapor,

Taporware, teiPublisher, TokenX, Versioning Machine, and Zotero. It was clear that

frequently more than one project participant filled up the survey. Equally, many

respondents developed “unbranded tools,” simply describing them as scripting

tools, perl routines, tei stylesheets, or exercise authoring tools.

This latter category seemed to the authors to be the more invisible side of tool

development. While branded tools frequently gain public recognition through their

websites, public announcements on mailing lists, conference presentations, and

published articles, tools developed to do particular routines or to make work

easier for a particular developer or project, are less frequently considered in

discussions in the academic system of rewards particularly to those on tenure or

tenure track lines.

There does appear to be some correlation between branding tools and the perception

that tool development has contributed to career advancement. There were twenty-two

“yes” answers to the question, “Has your

tool development counted towards career advancement (i.e., it has counted

towards tenure or promotion)?” Of those 22, it was possible to correlate

18 with degree of collaboration on tool development and name or description of the

tool developed. Of those 18 individuals, 8 reported that they had developed tools

with “brand” names. Compare these numbers to those who answered @no

to the career development question — out of 11 who felt that tool development had

not helped advance their careers, only 2 reported working on tools with “brand

names.”

Our presumption going into the survey was that tool development in the Humanities

is an inherently collaborative activity. The results bore this out: 50.8% of

respondents described their collaboration as extensive. Interestingly, 15.3%

described collaboration from not at all to moderately (or 1-3 on a scale of 5). In terms of whom

collaboration was with, 85% were with programmers, with nearly 80% of

collaboration with colleagues in the humanities. In one way this is not

surprising: a majority of humanities scholars probably need to collaborate with

programmers in tool development. What was unexpected was that nearly as many

respondents indicated that their collaboration involved humanities scholars. As

only 12% of respondents self-identified as programmers/developers, it seems

logical to presume that tool development takes place within teams in which there

is more than one humanities scholar.

Value and Success

Success of Tools Development Activities

Overall, survey respondents responded positively regarding the success of the

tools they had developed. 94% of the respondents (54 of 58) said that the tool

they had developed still fulfilled its original purpose. A lower percentage

(though still a majority) reported that they considered their tool development

endeavors successful — 33 of 54 indicated “yes” (61%); 21 said

“somewhat” (39%); interestingly, not one respondent ticked the “no”

box.

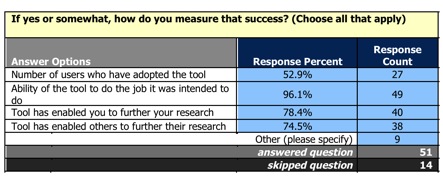

Respondents who felt their tools were successful described how they measured the

success from a controlled list. The highest ranked answer was “ability of the tool to do the job it was intended to do”

(with 96% of respondents choosing that option). The next highest option was that

the “tool enabled the respondent to further their

research,” (with 78.4% of respondents choosing that option). The lowest

choice, but still with a majority (52%) measured success by the “number of users who have adopted the tool.”

Nine respondents chose “other” and their answers tended to focus on tool

development as an activity that enables further research.

Twenty-one respondents checked that their tool development was “somewhat

successful.” The reasons for their lack of success fit, by and large, into

one of three categories: lack of resources or funding (5 answers); too early in

development to determine success (5 answers); and the tool was somehow not

suitable for users — either not adopted by many users or not as useful as intended

(5 answers).

Relationship to Scholarship

While we were interested in the value developers placed on their own tools, we

were also interested in how valuable they felt the development of tools was to

their own research and how they perceived the value placed on these activities in

terms of scholarship, promotion, and tenure. All of the respondents answered the

question, “Do you consider tool development a

scholarly activity?” 51 respondents, or 94%, said yes. The three

respondents who answered no gave these reasons:

- didn’t want to check “yes” or “no” — consider it more a service

activity than scholarly activity;

- one respondent focused on their particular tool, which they said was only

a “response to needs for workable data

management”;

- the third respondent wrote that “it does

not in itself advance scholarly knowledge; it is implicit in the word

‘tool’ that its just a tool which other use to do scholarly

activity.”

More interesting, however, were the detailed responses to the question for those

who answered that they derived scholarly benefit from tool development.

Respondents cited benefits such as a better understanding of source materials or

processes (11 of 51 or 21%); creating traditional intellectual output, such as

publications and conference papers (6 of 51, or 12%); cognitive benefits — such as

a better understanding of analytical methods, systematic reasoning, and “the problem space” (3 of 51, or 6%.) Several

responses clustered around the area of creativity. One of the respondents answered

that the tool is a community/public artwork/creative work that enables

interdisciplinary collaboration, while another answered that tool development

furthered the relations between objects, making it a playground for innovative

user interfaces and browsers with visualisation capacity.

Two responses described deferred benefits. One simply replied, “Awaiting benefits.” Another deferred benefit

was tied to traditional publication: “None so far

but we will publish in journals later.” One respondent’s experience was

clearly mixed when describing the scholarly benefits derived from tool

development: “None,” was the reply: “Was never promoted and lost salary, but was amply

rewarded by the sales of the product.”

Relationship to Career Development

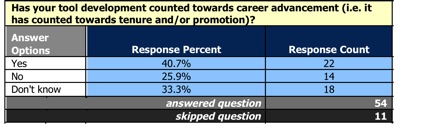

When asked if tool development has counted toward career advancement, respondents

were less certain (although more confident than our assumptions going into

the survey). All of the respondents answered this question: 22 (40%)

ticked “yes”; 18 (33%) responded “don’t know”; and 14 (26%) ticked

“no.” We correlated those responses with the respondents’ departmental

affiliations to see if patterns emerged. Of the two departments most represented

in the survey, those in English responded with six “don’t know”s, three

“yes”, and only two “no”. Library and Information Science was

similar, with four “don’t know”s, two “yes” and two “no”. Those who

identified a Humanities Center as their primary affiliation were most confident as

a whole, with four “yes” and one each for “don’t know” and “no.”

There were several repeated themes among the 51 respondents who elaborated on

their answers: the most-often-cited positive responses included raising one’s

profile in the field; winning grant money; increasing avenues for publication and

presentations; gains in salary; and new job opportunities. One respondent

concluded that it must not have hurt as “I

continue to be gainfully employed.” Others were more positive: “It was an important part of my tenure package and

understood well in my department.”

However, at least four respondents felt that despite the other rewards they might

have gained, promotion or tenure were less likely due to the inability of tenure

committees to properly evaluate tool development as a scholarly activity. Other

responses were even more succinct as to the negative impact the activity had on

their careers: “It derailed it”; another

answered, “For whatever reason, the University

never rewarded this activity.”

Other responses hint at the grey area that many practitioners work in — neither

knowing if their development activities hinders or helps: “I keep including tools in my professional reports, but I doubt the

time, intellectual investment, and impact are considered. I keep hoping for a

turning point . . .”

Another grey area emerged from a closer examination of degree of collaboration

reported in the survey. The average degree of collaboration was somewhat higher

among respondents who reported that tool development had advanced their careers

than among those who reported that it hadn’t. On a scale of 1 to 5 (with 5 being

the highest level of collaboration) the combined average for the “no” group

was 3.09 while the “yes” group was 3.68. The “yes” group included 9 of

19 respondents reporting “5,” the highest level of collaboration, with only 2

reporting “1” or essentially no collaboration. In the “no” group, 4 of

11 respondents reported a “5,” and 3 reported “1” or no collaboration.

What our survey cannot tell us, however (and this may be future research), is

whether those who reported both a high degree of collaboration and

career advancement felt that their advancement stemmed from the collaborative

development of the tools themselves, or from single-author papers and

presentations that resulted from their work. In other words, is collaborative,

cross-disciplinary work beginning to be better recognized and rewarded in

disciplines that value single-author works, or are the secondary outputs of that

work — i.e., articles and presentations — being rewarded?

Distribution

We were also interested to know what avenues were used to publicize tools and what

percentage of tools were developed for public use. The vast majority, 84% (48

responses), replied that the tools were made available to others. When asked about

obtaining feedback on the usefulness of the tool, as a whole, tool developers were

less systematic. The vast majority of respondents indicated that their main

mechanism for feedback was asking colleagues (86%), while 47% obtained feedback

via the tool website. Less than a third of the responses (31%) indicated that

usability studies were conducted, and even fewer, 14%, utilized surveys. Mailing

lists, or more specifically project mailing lists, were frequently cited as a mode

for obtaining feedback.

Dissemination of tools was along traditional scholarly lines, with 75% responding

that they made their tools known via conference presentations. This was closely

followed by the project website (71%), with word-of mouth- dissemination at

conferences coming in third at 64%. Not surprisingly, Humanist was the most

frequently cited mailing list, with TEI-L coming in second.

Conclusion

There is clearly more research that should be done into this area, from surveying

department or tenure committee chairs as to the obstacles in considering tool

development a scholarly activity to surveying the secondary scholarship that results

from tool development.

It is equally clear that the survey supports the findings of the reports mentioned at

the outset of this article in that as a discipline we have considerable work to do in

making tool development an activity that is rewarded on par with more traditional

scholarly outputs: articles, monographs, and conference presentations.

We found that tool developers, by and large, derived both personal satisfaction and

professional recognition from their work. Sometimes this recognition translated into

academic rewards such as promotion and tenure. But more frequently respondents wrote

about the intellectual insights derived from their work, the new methodologies

developed, deeper insights into their area of study and developing new models, and

analytical methods.

Equally, many respondents indicated that tool development led to more traditional

scholarly outputs: conference papers and articles in journals (both peer-reviewed and

non peer-reviewed). If the tool development itself was not rewarded, then these

secondary products were.

The overwhelming majority of respondents (94%) considered tool development a

scholarly activity, although the range of responses to this question made it clear

that many departments and institutions do not. Digital Humanities as a field has been

pushing the boundaries of what is considered scholarship: from the creation of

thematic research collections to e-literature. New tools that foster new insights

into work with the ever increasing amount of digital data available to us are not a

luxury but a necessity: who better to develop them than humanists who have both a

knowledge of the content domain and of the content as data.