Abstract

In 1995 in the midst of the first widespread wave of digitization, the Modern Language

Association issued a Statement on the Significance of Primary Records in order to assert

the importance of retaining books and other physical artifacts even after they have been

microfilmed or scanned for general consumption. “A primary

record,” the MLA told us then, “can appropriately

be defined as a physical object produced or used at the particular past time that one is

concerned with in a given instance” (27). Today, the conceit of a “primary record” can no longer be assumed to be

coterminous with that of a “physical object.”

Electronic texts, files, feeds, and transmissions of all sorts are also now, indisputably,

primary records. In the specific domain of the literary, a writer working today will not

and cannot be studied in the future in the same way as writers of the past, because the

basic material evidence of their authorial activity — manuscripts and drafts, working

notes, correspondence, journals — is, like all textual production, increasingly migrating

to the electronic realm. This essay therefore seeks to locate and triangulate the

emergence of a .txtual condition — I am of course remediating Jerome McGann’s influential

notion of a “textual condition” — amid our contemporary constructions of the

“literary”, along with the changing nature of literary archives, and

lastly activities in the digital humanities as that enterprise is now construed. In

particular, I will use the example of the Maryland Institute for Technology in the

Humanities (MITH) at the University of Maryland as a means of illustrating the kinds of

resources and expertise a working digital humanities center can bring to the table when

confronted with the range of materials that archives and manuscript repositories will

increasingly be receiving.

I.

In April 2011 American literature scholars were buzzing with the news of Ken Price’s

discovery of thousands of new papers written in Walt Whitman’s own hand at the National

Archives of the United States. Price, a distinguished University of Nebraska English

professor and founding co-editor of the digital Walt Whitman Archive, had followed a hunch

and gone to the Archives II campus in College Park looking for federal government

documents — red tape, essentially — that might have been produced by the Good Gray Poet

during Whitman’s tenure in Washington DC as one of those much-maligned federal bureaucrats

during the tumultuous years 1863-1873. Price’s instincts were correct: to date he has

located and identified some 3000 official documents originating from Whitman, and believes

there are still more to come. Yet the documents have value not just or perhaps not even

primarily

prima facie, because of what Whitman actually wrote. Collectively,

they also constitute what Friedrich Kittler once called a discourse network, a way of

aligning or rectifying (think maps) Whitman’s activities in other domains through

correlation with the carefully dated entries in the official records. “We can now pinpoint to the exact day when he was thinking about

certain issues,” Price is quoted as saying in the

Chronicle

of Higher Education

[

Howard 2011a].

Put another way, the official government documents written in Whitman’s hand are

important to us not just as data, but as metadata. They are a reminder that the human

life-world all around us bears the ineluctable marks and traces of our passing, mundane

more often than not, and that these marks and tracings often take the form of written and

inscribed documents, some of which survive, some of which do not, some of which are

displayed in helium- and water-vapor filled cases, and some of which lie forgotten in

archives, or brushed under beds, or glued beneath the end papers of books. Today such

documents are as likely to be digital, or more precisely born-digital, as

they are to be physical artifacts. A computer is, as Jay David Bolter observed quite some

time ago, a writing space — one which models, by way of numerous telling metaphors, a

complete working environment, including desktop, file cabinets, even wallpaper. Access to

someone else’s computer is like finding a key to their house, with the means to open up

the cabinets and cupboards, look inside the desk drawers, peek at the family photos, see

what’s playing on the stereo or TV, even sift through what’s been left behind in the

trash.

The category of the born-digital, I will argue, is an essential one for what this issue

of

DHQ names as

the literary. Yet it is still

not well-understood. In 1995, in the midst of the first widespread wave of digitization,

the Modern Language Association felt it urgent to issue a Statement on the Significance of

Primary Records in order to assert the importance of retaining books and other physical

artifacts even after they have been microfilmed or scanned for general consumption. “A primary record,” the MLA tells us, “can appropriately be defined as a physical object

produced or used at the particular past time that one is concerned with in a given

instance”

[

MLA 1995, 27]. Though the retention of such materials is still by no

means a given, as recent commentators such as Andy Stauffer have shown, the situation in

which we find ourselves today is even more complex. Today, the conceit of a “primary

record” can no longer be assumed to be coterminous with that of a “physical

object.” Electronic texts, files, feeds, and transmissions of all sorts are also now,

indisputably, primary records (for proof one need look no further than Twitter hashtags

such as #Egypt or #Obama). This is the “.txtual condition” of my title. In the

specific domain of the literary, a writer working today will not and cannot be studied in

the future in the same way as writers of the past, because the basic material evidence of

their authorial activity — manuscripts and drafts, working notes, correspondence, journals

— is, like all textual production, increasingly migrating to the electronic realm. Indeed,

as I was finishing my first draft of this essay the British Library opened its J. G.

Ballard papers for public access; it is, as one commentator opines, likely to be “the last solely non-digital literary archive of

this stature,” since Ballard never owned a computer. Consider by contrast Oprah

enfant terrible Jonathan Franzen who, according to

Time Magazine, writes with a “heavy, obsolete Dell laptop from which he has scoured any trace of hearts and

solitaire, down to the level of the operating system”

[

Grossman 2010]. Someday an archivist may have to contend with this rough

beast, along with Franzen’s other computers and hard drives and USB sticks and floppy

diskettes in shoeboxes.

In fact a number of significant writers already have material in major archives in

born-digital form. The list of notables includes Ralph Ellison, Norman Mailer, John

Updike, Alice Walker, Jonathan Larson (composer of the musical

RENT), and many others. Perhaps the most compelling example to date is the

groundbreaking work that’s been done at Emory University Library, which has four of Salman

Rushdie’s personal computers in their collection among the rest of his “papers.” One

of these, his first — a Macintosh Performa — is currently available as a complete virtual

emulation on a dedicated workstation in the reading room. Patrons can browse Rushdie’s

desktop file system, seeing, for example, which documents he stored together in the same

folder; they can examine born-digital manuscripts for

Midnight’s

Children, and other works; they can even take a look at the games on the machine

(yes, Rushdie is a gamer — as his most recent work in

Luka and the

Fire of Life confirms). Other writers, however, have been more hesitant. Science

fiction pioneer Bruce Sterling, not exactly a luddite, recently turned over fifteen boxes

of personal papers and cyberpunk memorabilia to the Harry Ransom Center at the University

of Texas but flatly refused to consider giving over any of his electronic records, despite

the fact that the Ransom Center is among the places leading the way in processing

born-digital collections. “I’ve never believed

in the stability of electronic archives, so I really haven’t committed to that

stuff,” he’s quoted as saying [

Howard 2011b].

We don’t really know then to what extent discoveries on the order of Ken Price’s will

remain possible with the large-scale migration to electronic documents and records. The

obstacles are not only technical but also legal and societal. Sterling’s brand of

techno-fatalism is widespread, and it is not difficult to find jeremiads warning of the

coming of the digital dark ages, with vast swaths of the human record obliterated by

obsolescent storage. And hard drives and floppy disks are actually easy in the sense that

at least an archivist has hands-on access to the original storage media. The accelerating

shift to Web 2.0-based services and so-called cloud computing means that much of our data

now resides in undisclosed locations inside the enclaves of corporate server farms, on

disk arrays we will never even see or know the whereabouts of.

For several years I’ve argued this context has become equally essential for thinking

about what we now call the digital humanities, especially if it is to engage the objects

and artifacts of its own contemporary moment. Yet the massive challenges facing the

professional custodians of the records of history, science, government, and cultural

heritage in the roughly three and a half decades since the advent of personal computing

have been left largely unengaged by the digital humanities, perhaps due to the field’s

overwhelming orientation toward things past, especially things in the public domain

pastures on the far side of the year 1923 B©, before copyright. The purpose of this essay,

therefore, is to locate and triangulate the emergence of the .txtual condition — I am of

course remediating Jerome McGann’s influential notion of a “textual condition” — amid

our contemporary constructions of the “literary,” along with the changing nature of

literary archives, and lastly activities in the digital humanities as that enterprise is

now construed. In particular, I will use the example of the Maryland Institute for

Technology in the Humanities (MITH) at the University of Maryland as a means of

illustrating the kinds of resources and expertise a working digital humanities center can

bring to the table when confronted with the range of materials that archives and

manuscript repositories will increasingly be receiving.

II.

Jacques Derrida famously diagnosed the contemporary fixation on archives as a malady, an

“archive fever”: “Nothing is less

reliable, nothing is less clear today than the word ‘archive,’

” he tells us [

Derrida 1995, 90]. In one sense that is

indisputable: the work of recent, so-called “postmodern” archives thinkers and

practitioners such as Brien Brothman, Terry Cook, Carolyn Heald, and Heather MacNeil have

all (as John Ridener has convincingly shown [

Ridener 2009]) laid stress on

core archival concepts, including appraisal, original order, provenance, and the very

nature of the record. The archives profession has thus witnessed a lively debate about

evidence, authenticity, and authority in the pages of its leading journals and

proceedings. Derrida would also remind us of the etymological ancestry of archives in the

original Greek

Arkhē, which does double duty as a form of

both custodianship and authority; yet as a modern and self-consciously theorized

avocation, the archives profession is in fact relatively young. The first consolidated

statement of major methodological precepts, the so-called Dutch Manual, was published in

1898. In the United States, the National Archives and Records Administration that is the

repository of the Whitman papers recovered by Ken Price did not even exist as a public

institution until 1934; and the American archives community would not have a coherent set

of guidelines until the 1957 publication of Theodore Roosevelt Schellenberg’s

Modern Archives: Principles and Techniques. (There Schellenberg

staked out a philosophy of practice for American archives in the face of a massive influx

of records from rapidly expanding post-war federal agencies.)

Today’s changes and transitions in the archives profession are brought about not only by

the rise of the born-digital but also the popularization and distribution — arguably, the

democratization — of various archival functions. Archival missions and sensibilities are

now claimed by activist groups like Jason Scott’s Archives Team, which springs into

action, guerilla fashion, to download endangered data and redistribute it through rogue

torrent sites, or else the International Amateur Scanning League and the Internet Archive,

as well as the signature Citizen Archivist initiative of Archivist of the United States

David Ferriero. The public at large seems increasingly aware of the issues around digital

preservation, as people’s personal digital mementos — their Flickr photographs and

Facebook profiles, their email, their school papers, and whatever else — are now regarded

as assets and heirlooms, to be preserved and passed down. Organizations such as the

National Digital Information Infrastructure and Preservation Program (NDIIPP) in the

United States and the European WePreserve coalition have each gone to great lengths to

build public awareness and engage in outreach and training. Still, the personal

information landscape is only becoming more complex. Typical users today have multiple

network identities, some mutually associating and some not, some anonymous some not, some

secret some not, all collectively distributed across dozens of different sites and

services, each with their own sometimes mutually exclusive and competing end user license

agreements and terms of service, each with their own set of provisions for rights

ownership and transfer of assets to next of kin, each with their own separate dependencies

on corporate stability and commitments to maintaining a given service or site. For many

now their born-digital footprint begins literally in utero, with an ultrasound JPEG passed

from Facebook or Flickr to family and friends, soon be augmented by vastly more voluminous

digital representations as online identity grows, matures, proliferates, and becomes a

life-long asset, not unlike financial credit or social security, and perhaps one day

destined for Legacy Locker or one of the other digital afterlife data curation services

now becoming popular.

At the same time, however, the definition of archives has been codified to an

unprecedented degree as a formal model or ontology, expressed and embodied in the Open

Archival Information System, a product of the Consultative Committee for Space Data

Systems adopted as ISO Standard 14721 in the year 2002. This point is worth reinforcing:

over the last decade the OAIS has become

the canonical authority for modeling

workflows around ingest, processing, and provision of access in an archives; it

establishes, at the level of both people and systems, what any archives, digital or

traditional, must do in order to act as a guarantor of authenticity:

The reference model addresses a full range of archival information

preservation functions including ingest, archival storage, data management, access, and

dissemination. It also addresses the migration of digital information to new media and

forms, the data models used to represent the information, the role of software in

information preservation, and the exchange of digital information among archives. It

identifies both internal and external interfaces to the archive functions, and it

identifies a number of high-level services at these interfaces. It provides various

illustrative examples and some “best practice” recommendations. It defines a

minimal set of responsibilities for an archive to be called an OAIS, and it also defines

a maximal archive to provide a broad set of useful terms and concepts. [Consultative Committee for Space Data Systems 2002]

The contrast between the fixity of the concept of archives as stipulated in the OAIS

Reference Model and the fluid nature of its popular usage is likewise manifest in the

migration of “archive” from noun to verb. The verb form of archive is largely a

twentieth-century construction, due in no small measure to the influence of computers and

information technology. To archive in the realm of computation originally meant to take

something offline, to relegate it to media which are not accessible or indexical via

random access storage. It has come to do double duty with the act of copying,

so archiving is coterminous with duplication and redundancy. In the arena of digital

networking, an archive connotes a mirror or reflector site, emulating content at remote

hosts to reduce the physical distance information packets have to travel. In order to

function as a reliable element of network architecture, however, the content at each

archive site must be guaranteed as identical. This notion of the archive as mirror finds

its most coherent expression in the initiative now known as LOCKSS, or Lots of Copies Keep

Stuff Safe, the recipient of multiple multi-million dollar grants and awards from public

and private funding sources.

An archives as a noun (and a plural one at that) was the very antithesis of such notions.

Not lots of copies, but one unique artifact or record

to keep safe. Unique

and irreplaceable; or “records of enduring

value,” as the Society of American Archivists says in its definition. But no

digital object is ever truly unique, and in fact our best practices rely on the assumption

that they never can be. As Luciana Duranti has put it, “In the digital realm, we can only persevere our ability to

reconstruct or reproduce a document, not the document itself.” There is a real

sense, then, in which the idea of archiving something digitally is a contradictory

proposition, not only or primarily because of the putative instability of the underlying

medium but also because of fundamentally different understandings of what archiving

actually entails. Digital memory is, as the German media theorist Wolfgang Ernst has it in

his brilliant reading of archives as “agencies

of cultural feedback”

[

Ernst 2002], a simulation and a “semantic archaism.”

“What we call memory,” he continues,

“is nothing but information scattered on hard

or floppy disks, waiting to be activated and recollected into the system of data

processing”

[

Ernst 2002, 109]. In the digital realm there is a real sense, a

material sense, in which archive can only ever

be a verb,

marking the latent potential for reconstruction and reconstitution.

This palpable sense of difference with regard to the digital has given rise to a series

of discourses which struggle to articulate its paradoxical distance and disconnect with

the ultimately unavoidable truth that nothing in this world is ever truly immaterial. Nick

Montfort and Ian Bogost have promoted “platform studies” as a rubric for attention to

the physical constraints embodied in the hardware technologies which support computation;

in Europe and the UK, “media archaeology” (of which Ernst is but one exemplar) has

emerged as the heir to the first-wave German media theory pioneered by Friedrich Kittler;

Bill Brown, meanwhile, has given us “thing theory”; Kate Hayles, “media-specific

analysis”; Wendy Chun favors the conceit of the “enduring ephemeral”; I myself

have suggested the dualism of forensic and formal materiality, and I have seen references

in the critical literature to continuous materiality, vital materiality, liminal

materiality, and weird materiality as well. All of these notions find particular resonance

in the realm of archives, since an archives acts as a focalizer for, as Bill Brown might

put it, the thingness of things, that is the artifactual aura that attends

even the most commonplace objects. The radical reorientation of subjectivity and

objectivity implicit in this world view is also the ground taken up by the rapidly rising

conversation in object-oriented ontology (OOO), including Bogost (again) in Alien Phenomenology, as well as various strains of post-humanism,

and the new materialism of political philosophy thinkers like Diana Coole and Samantha

Frost.

Clearly, then, questions about objects, materiality, and things are once again at the

center of a vibrant interdisciplinary conversation, driven in no small measure by the

obvious sense in which digital objects can — demonstrably — function as a “primary

records” (in the MLA’s parlance), thereby forcing a confrontation between our

established notions of fixity and authenticity and the unique ontologies of data,

networks, and computation. Abby Smith puts it this way:

When all data are recorded as 0’s and 1’s, there is, essentially, no object that exists

outside of the act of retrieval. The demand for access creates the “object,” that

is, the act of retrieval precipitates the temporary reassembling of 0’s and 1’s into a

meaningful sequence that can be decoded by software and hardware. A digital

art-exhibition catalog, digital comic books, or digital pornography all present

themselves as the same, all are literally indistinguishable one from another during the

storage, unlike, say, a book on a shelf. [Smith 1998]

The OAIS reference model handles this Borgesian logic paradox through a concept known as

Representation Information, which means that it is incumbent upon the archivist to

document all of the systems and software required to recreate or reconstitute a digital

object at some future point in time — a literal object lesson in the sort of unit

operations Bogost now advocates to express the relationships between “things.”

“[I]n order to preserve a digital object,” writes

Kenneth Thibodeau, Director of Electronic Records Programs at the National Archives and

Records Administration, “we must be able to identify and

retrieve all its digital components.”

Here I want to go a step further and suggest that the preservation of digital objects is

logically inseparable from the act of their creation — the lag between

creation and preservation collapses completely, since a digital object may only ever be

said to be preserved if it is accessible, and each individual access creates

the object anew. One can, in a very literal sense, never access the

“same” electronic file twice, since each and every access constitutes a distinct

instance of the file that will be addressed and stored in a unique location in computer

memory. The analogy as it is sometimes given is if one had to build a Gutenberg press from

scratch, set the type (after first casting it from molds, which themselves would need to

be fabricated), and print and bind a fresh copy of a book (first having made the paper,

spun the sewing thread, mixed the glue, etc.) each and every time one wanted to open one.

In the terms I put forth in Mechanisms, each access engenders

a new logical entity that is forensically individuated at the level of its physical

representation on some storage medium. Access is thus duplication, duplication is

preservation, and preservation is creation — and recreation. That is the

catechism of the .txtual condition, condensed and consolidated in operational terms by the

click of a mouse button or the touch of a key.

III.

In May of 2007 the Maryland Institute for Technology in the Humanities (MITH) at the

University of Maryland acquired a substantial collection of computer hardware, storage

media, hard-copy manuscripts, and memorabilia from the author, editor, and educator Deena

Larsen. MITH is not an archives in any formal institutional sense: rather, it is a working

digital humanities center, with a focus on research, technical innovation, and supporting

new modes of teaching, scholarship and public engagement. While this poses some obvious

challenges in terms of our responsibilities to the Larsen Collection, there are also some

unique opportunities. Without suggesting that digital humanities centers summarily assume

the functions of archives, I want to describe some of the ways in which MITH was

positioned to embrace the opportunity to receive a large complex assemblage of

born-digital materials, how the availability of these materials has furthered a digital

humanities research agenda, and how that research agenda is in turn creating new models

for the stewardship of such a collection.

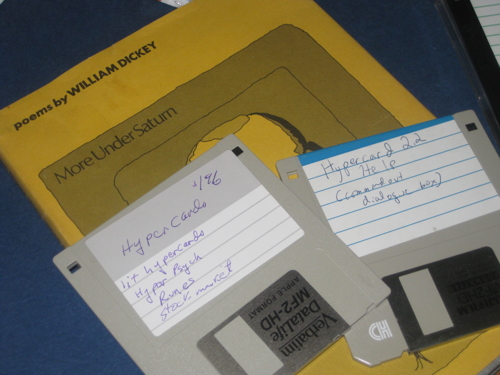

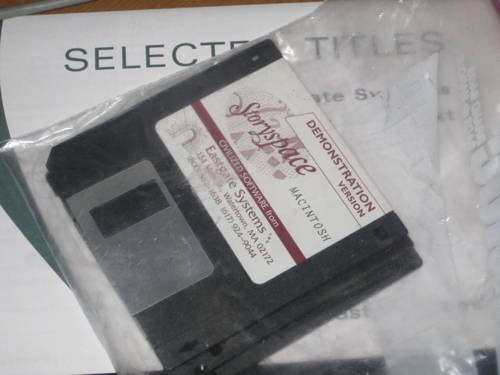

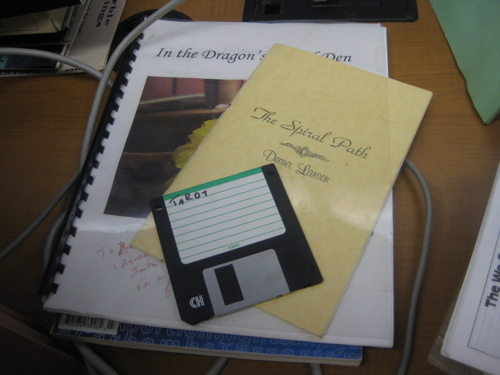

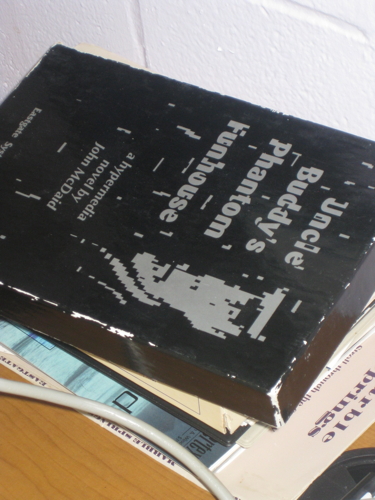

Deena Larsen (b. 1964) has been an active member of the creative electronic writing

community since the mid-1980s. She has published two pieces of highly-regarded hypertext

fiction with Eastgate Systems,

Marble Springs (1993) and

Samplers (1997), as well as additional works in a variety

of outlets on the Web. A federal employee, she lives in Denver and is a frequent attendee

at the annual ACM Hypertext conference, the meetings of the Electronic Literature

Organization (where she has served on the Board of Directors), and kindred venues.

Crucially, Larsen is also what we might nowadays term a “community organizer”: that

is, she has an extensive network of correspondents, regularly reads drafts and work in

progress for other writers, and is the architect of some landmark events, chief amongst

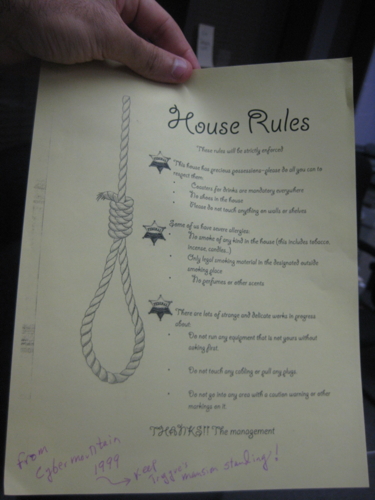

them the CyberMountain writers’ workshop held outside of Denver in 1999. As she herself

states:

I conducted writers workshops at these conferences

and even online. We worked together to develop critiques — but more importantly methods

of critiques — of hypertext, as well as collections of schools of epoetry, lists and

groups. And thus I have been lucky enough to receive and view texts in their infancy —

during the days when we thought floppy disks would live forever. And thus I amassed this

chaotic, and perhaps misinformed treasure trove that I could bequeath to those

interested in finding the Old West goldmines of the early internet days. [Larsen 2009]

What this means for the collection at MITH is that in addition to her own writing and

creative output, Larsen also possessed a broad array of material by other electronic

literature authors, some of it unpublished, unavailable, or believed otherwise lost,

effectively making her collection a cross-section of the electronic writing community

during its key formative years (roughly 1985-2000). The files contain multiple versions of

nationally recognized poet William Dickey’s electronic works, Dickey’s student work,

nationally recognized poet Stephanie Strickland’s works, M.D. Coverley’s works, Kathryn

Cramer’s works, If Monks had Macs, the Black Mark (a hypercard stack developed at the 1993 ACM Hypertext conference),

Izme Pass, Chris Willerton’s works, Mikael And’s works, Jim

Rosenberg’s works, Michael Joyce and Carolyn Guyer’s works that were in progress, Stuart

Moulthrop’s works, George Landow’s works and working notes, textual games from Nick

Montfort, Coloring the Sky (a collaborative work from Brown

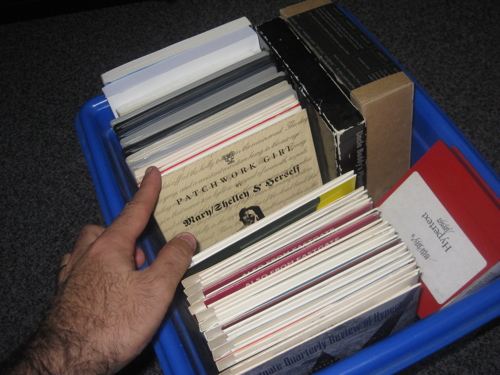

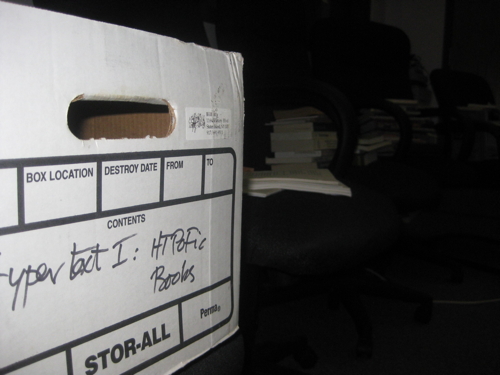

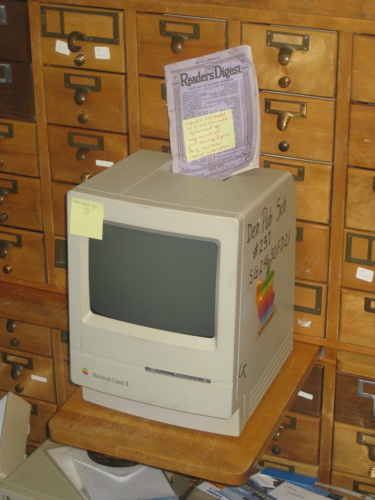

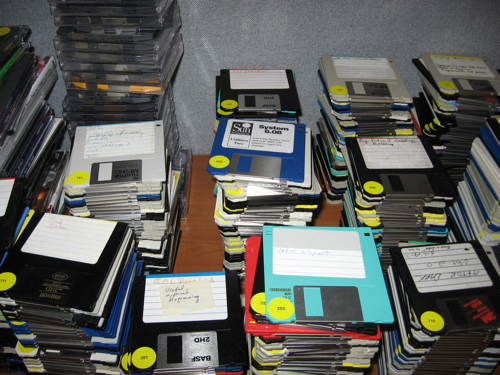

in 1992-94), and Tom Trelogan’s logic game. Most of the data are on 3½-inch diskettes

(over 800 of them) or CD-ROMs. The Deena Larsen Collection also includes a small number of

Zip disks, and even some audiovisual media such as VHS tapes and dictation cassettes. The

hardware at present consists of about a dozen Macintosh Classics, an SE, and a Mac Plus

(these were all machines that Deena used to install instances of her Marble Springs), as well as a Powerbook laptop that was one of her personal

authoring platforms. All of this is augmented by a non-trivial amount of analog archival

materials, including hard-copy manuscripts, newspaper clippings, books, comics, operating

manuals, notebooks (some written in Larsen’s encrypted invented personal alphabet),

syllabi, catalogs, brochures, posters, conference proceedings, ephemera, and yes, even a

shower curtain, about which more below.

Some may wonder what led Larsen to MITH in the first place. The circumstances are

particularized and not necessarily easily duplicated even if that were desirable. First,

Deena Larsen and I had a friendly working relationship; we had met some years ago on the

conference circuit, and I had corresponded with her about her work (still unfinished)

editing William Dickey’s HyperCard poetry. MITH was also, at the time, the institutional

home of the Electronic Literature Organization, and our support for ELO signaled our

interest in engaging contemporary creative forms of digital production as well as more the

more traditional cultural heritage that is the mainstay of digital humanities activities.

The Electronic Literature Organization, for its part, had a record of raising questions

about the long-term preservation and accessibility of the work of its membership: at a

UCLA conference in 2001, N. Katherine Hayles challenged writers, scholars, and

technologists to acknowledge the contradictions of canon formation and curriculum

development, to say nothing of more casual readership, in a body of literature that is

obsolescing with each new operating system and software release. The ELO responded with an

initiative known as PAD which produced the widely-disseminated pamphlet Acid-Free Bits that furnished writers with practical steps they

could take to begin ensuring the longevity of their work, as well as a longer, more

technical and ambitious white paper, “Born Again Bits,” which

outlined a theoretical and methodological paradigm based on an XML schema and a

“variable media” approach to the representation and reimplementation of electronic

literary works. Given ELO’s residency at MITH, and MITH’s own emerging research agenda in

these areas (in addition to the ELO work, the Preserving Virtual Worlds project had just

begun), Larsen concluded that MITH was well positioned to assume custody of her

collection.

From MITH’s perspective, the Deena Larsen Collection was an excellent fit with our

mission and sense of purpose as a digital humanities center. Our emerging research agendas

in digital preservation argued in favor of having such a collection in-house, one that

could function as a testbed and teaching resource. Our connections at the University of

Maryland’s Information School, which includes an archives program, further encouraged us

in this regard. Finally and most frankly, MITH was in a position to operate free of some

of the limitations and constraints that a library special collections unit would face if

it acquired the same material. Because our institutional mandate does not

formally entail the stewardship of records of enduring value, we enjoy the privilege of

picking and choosing the things we think will be interesting to work on. There are no

deadlines with regard to processing the collection nor is it competing with other

processing tasks. We were free to create our own workflows and milestones, which was

essential given the experimental nature of much of the material. We are free to experiment

and take risks and, even, dare I say it, mess up.

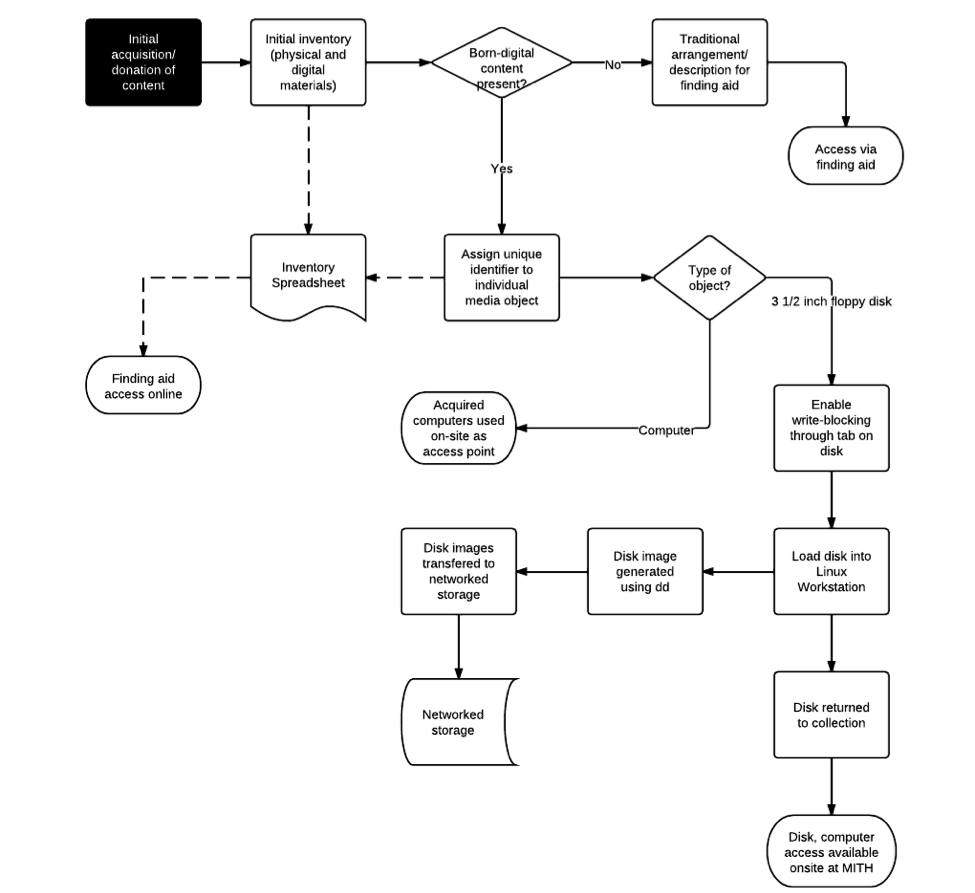

Professional archives create workflows to manage the many steps involved in the

appraisal, accession, arrangement, description, and preservation of the documents and

records entrusted to their care. Once a deed of gift had been signed, our initial efforts

consisted in what an archivist would term arrangement and description, eventually

resulting in a pair of Excel spreadsheets which function as finding aids (see Figure 1,

below, for a schematic of our complete workflow). No effort was made to respect the

original order of the materials, an archival donnée that makes sense

for the records of a large organization but seemed of little relevance for an

idiosyncratic body of materials we knew to have been assembled and packed in haste.

Several things about the collection immediately became apparent to everyone who worked on

it. First and most obviously, it was a hybrid entity: both digital and analog materials

co-existed, the digital files themselves were stored on different kinds of media and

exhibited a multiplicity of different formats, and most importantly of all, the digital

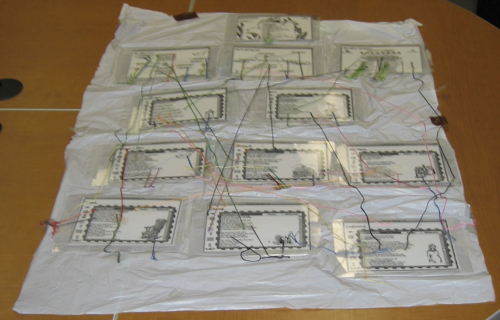

and analog materials manifested a complex skein of relationships and dependencies. Marble Springs alone (or portions thereof) exists in multiple

digital versions and states on several different kinds of source media, as well as in

bound drafts of Larsen’s MA thesis, in notes and commentary in her journals, and as a

sequence of laminated screenshots mounted on a vinyl shower curtain and connected by

colored yarn to map the affective relations between the nodes. Second, as noted above, the

Collection was not solely the fonds of Deena Larsen herself; it includes

numerous works by other individuals, as well as third party products such as software,

some of it licensed and some of it not, some of it freeware, abandonware, and greyware.

(This alone would likely have proved prohibitive had special collections here on campus

sought to acquire this material; while the deed of gift makes no warrant as to copyright,

the provisions of the Digital Millennium Copyright Act raise questions about the legality

of transferring software without appropriate licensing, a contradiction that becomes a

paralyzing constraint when dealing with a large, largely obsolesced collection such as

this.) The Larsen Collection is thus a social entity, not a single author’s

papers. Finally, of course, the nature of the material is itself highly ambitious,

experimental, and avant garde. Larsen and the other writers in her circle used software

that was outside of the mainstream, and indeed sometimes they invented their own software

and tools; their works often acknowledge and include the interface and hardware as

integral elements, thus making it difficult to conceive of a satisfactory preservation

program based on migration alone; and much of what they did sought to push the boundaries

of the medium and raise questions about memory, representation, and archiving in overtly

self-reflexive ways.

None of this, I hasten to add, is new or unique to the Deena Larsen Collection in and of

itself. All archival collections are “hybrid” and “social” to greater or lesser

degrees, and all of them present challenges with regard to their materiality and their own

status as records. But the Larsen Collection dramatizes and foregrounds these

considerations in ways that other collections perhaps do not, and thus provides a vehicle

for their hands-on exploration in a setting (MITH) that thrives on technical innovation

and intellectual challenges.

In late 2011, Bill Bly, also an Eastgate author, agreed to consign his own considerable

collection of electronic literature and author’s papers to MITH, where it now joins

Deena’s materials. The two collections thus constitute a very substantial resource for

those interested in the formative years of the electronic writing community. In time, we

also hope to build functional connections to other archival collections of writers in

Larsen and Bly’s circle, notably the Michael Joyce papers at the Harry Ransom Center, and

Duke University Library, which has collected the papers of Judy Malloy and Stephanie

Strickland. The Deena Larsen Collection was one of several collections surveyed in a

multi-institutional NEH-funded report on “Approaches to Managing and

Collecting Born-Digital Literary Content for Scholarly Use,” and is currently

being used as a testbed for the BitCurator environment under development by MITH and a

team from the University of North Carolina at Chapel Hill’s School of Information and

Library Science. Other users have included graduate and undergraduate students at the

University of Maryland. Recently we have also begun seeing external researchers seek

access to the collection, requests which we are happy to accommodate. Leighton

Christiansen, a Master’s student in the Graduate School of Library and Information Science

at the University of Illinois, spent several days at MITH in April 2011 researching the

Marble Springs materials as part of his thesis on how to

preserve the work. In May 2011, Deena herself visited the collection, for a two-week

residence we immediately dubbed “Deenapalooza.” The MITH

conference room was converted to something resembling a cross between a conservator’s lab

and a digital ER, as, first with Bill Bly, and then with Leighton (who returned) they

worked to create a collaborative timeline of early electronic literature, restoring

several key works to functionality in the process, including Deena’s never before seen 2nd

edition of Marble Springs. A visit from a team of ACLS-funded

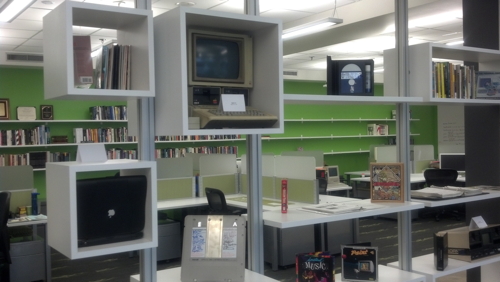

scholars working on early electronic literature is planned for the coming year. The image

gallery below documents some of the holdings in the University of Maryland's Larsen and

Bly Collection — consider it a proleptic archaeology of a future literary.

IV.

My first computers were Apples. I had a IIe and a IIc growing up, and, at my father’s

insistence both remained boxed at the back of a closet long after I had moved on to other

hardware (and away from home). When my parents sold their house, however, I had to take

custody of the machines or see them in a dumpster. I decided to relocate them to MITH,

along with hundreds of 5¼-inch diskettes and a stack of manuals and early programming

books. Not long afterwards Doug Reside arrived with his collection of Commodores, early

software (including a complete run of Infocom’s interactive fiction titles), and old

computer magazines. Visitors to MITH began noticing our vintage gear. The calls and emails

began. “I have a TRS-80 Model 100… are you interested?”

“What about this old Osborne?”

“You know, I just found some Apple III disks…”

Initially the old computers were just interesting things to have around the office.

Texture. Color. Conversation pieces. As MITH’s research agenda around born-digital

collections took shape, however, we began to realize that the machines served a dual role:

on the one hand objects of preservation in and of themselves, artifacts that we sought to

sustain and curate; but on the other hand, the vintage systems served as functional

instruments, invaluable assets to aid us in retrieving data from obsolescent media and

understanding the material affordances of early computer systems. This dual-use model

sidesteps the OAIS mandate that information be retained in an “independently

understandable” form. While the Reference Model acknowledges that such criteria will

be “in general, quite subjective,” the essential concept here is that of

Representation Information, as introduced in section II above. Best practices for

provision of Representation Information for complex born-digital objects, including

software, are still very much evolving; one set of examples has been provided by the

Preserving Virtual Worlds project, which has documented and implemented a complete OWL

schema for its content packaging, though that kind of high-end curatorial attention is

unlikely to scale. Indeed, in the case of the Larsen Collection, limited resources have

meant limited measures. We knew that one early priority had to be migrating data from the

original storage media, and so we have made an initial pass at imaging the roughly 800

3½-inch diskettes, with around an 80% success rate; however, the resulting images do not

have any associated metadata (“Preservation Description Information” in OAIS

parlance), and in practice we have maintained them merely as a “dark archive,” that

is an offline repository with no provision for general access.

Our best Representation Information is, one could argue, embodied in the

working hardware we maintain for access to the original media. In other words, we rely

largely on the vintage computers in the Larsen Collection for access to data in legacy

formats. Bill Bly, when he visited MITH, spoke unabashedly about the joy he felt in firing

up the old hardware, the dimensions and scale of his real-world surroundings instantly

contracting and realigning themselves to the 9-inch monochrome display of the Mac Classic

screen, a once familiar focalizer. Nonetheless, the contention that so vital an aspect of

the OAIS as Representation Information can be said to be “embodied” in a piece of

hardware is bound to be controversial, so let me attempt to anchor it with some additional

context.

Wolfgang Ernst, quoted earlier, has emerged as one of the more provocative figures in the

loose affiliation of thinkers self-identifying with media archaeology. As Jussi Parikka

has documented, for Ernst, media archaeology is not merely the excavation of neglected or

obscure bits of the technological past; it is a methodology that assumes the primacy of

machine actors as autonomous agents of representation. Or in Ernst’s own words, rather

more lyrically: “media archeology is both a

method and aesthetics of practicing media criticism […] an awareness of

moments when media themselves, not exclusively human any more, become active

‘archeologists’ of knowledge”

[

Parikka 2011, 239]. What Ernst is getting at is a semiotic broadening

beyond writing in the literary symbolic sense to something more like inscription and what

Parikka characterizes as “a materialism of

processes, flows, and signals”; and the flattening of all data as traces

inscribed on a recording medium which Ernst dubs “archaeography,” the archive writing

itself. What separates digital media and practices of digital archiving radically from the

spatial organization of the conventional archive (embodied as physical repository) is the

so-called “time-criticality” of digital media, the inescapable temporality that

accounts for observations such as Duranti’s about the errant ontology of digital

documents. Indeed, while the chronological scope of the Larsen Collection is easily

circumscribed, it resists our instinct toward any linear temporal trajectory. On the one

hand, the avant garde nature of the material is such that traditional procedures of

appraisal, arrangement and description, and access must be challenged and sometimes even

overruled. On the other hand, however, the media and data objects that constitute the

born-digital elements of the Collection are themselves obsolescing at a frightening rate.

This ongoing oscillation between obsolescence and novelty, which manifests itself

constantly in the workflows and routines we are evolving, is characteristic of the .txtual

condition as I have come to understand it; or as Wolfgang Ernst puts it (2011), seemingly

to confirm Bly’s observations above, “

‘Historic’ media objects are radically present when they still function, even if

their outside world has vanished”

[

Ernst 2011, 241].

While the OAIS never specifically stipulates it, the assumption is that Representation

Information must ultimately take the form of human-readable text. The Reference Model does

incorporate the idea of a “Knowledge Base,” and offers the example of “a person who has a Knowledge Base that includes

an understanding of English will be able to read, and understand, an English

text”

[

Consultative Committee for Space Data Systems 2002]. Lacking such a Knowledge Base, the Model goes on to

suggest, Representation Information would also have to include an English dictionary and

grammar. We have what I would describe as an epistemological positivism, one that assumes

that a given chain of symbolic signification will ultimately (and consistently) resolve to

a stable referent. Thus one might appropriately document a simple text file by including a

pointer to a reference copy of the complete ASCII standard, the supposition being that a

future member of the designated user community will then be able to identify the bitstream

as a representation of ASCII character data, and apply an appropriate software decoder.

Yet Ernst’s archaeography is about precisely displacing human-legible inscriptions as the

ultimate arbiters of signification. The examples he gives, which are clearly reminiscent

of Friedrich Kittler’s writings on the technologies of modernity, include ambient noise

embedded in wax cylinder recordings and random details on a photographic negative, for

instance (and this is my example, not Ernst’s) in the 1966 film

Blow-Up where an image is placed under extreme magnification to reveal a detail

that divulges the identity of a murderer. For Ernst, all of these semiotic events are

flattened by their ontological status as data, or a signal to be processed by digital

decoding technologies that makes no qualitative distinction between, say, a human voice

and background noise. In this way “history” becomes a form of media, and the writing

of the literary — its alphabetic semantics — is replaced by signal flows capturing the

full spectrum of sensory input.

What becomes dubious from the standpoint of archival practice — affixing the OAIS concept

of Representation Information to actual hardware and devices — thus becomes a legitimate

mode of media archaeology after Ernst, and indeed Ernst has enacted his approach in the

archaeological media lab at the Institute of Media Studies in Berlin, where “old

machines are received, repaired, and made operational…all of the objects are

characterized by the fact that they are operational and hence relational, instead of

collected merely for their metadata or design value”

[

Parikka 2011, 64 [caption]]. A similar approach has been adopted by

Lori Emerson at the University of Colorado at Boulder, where she has established an

Archeological Media Lab: “The Archeological

Media Lab…is propelled equally by the need to maintain access to early works of

electronic literature…and by the need to archive and maintain the computers these works

were created on.” More recently, Nick Montfort at MIT has opened the “Trope

Tank,” a laboratory facility that makes obsolete computer and game systems available

for operative use. Thus, the Deena Larsen Collection, housed within a digital humanities

center, begins to take its place in a constellation of media archaeological practices that

take their cues from both the professionalized vocation of archival practice as well as

the theoretical precepts of media archaeology, platform studies, and related endeavors.

Media archaeology, which is by no means co-identical with the writings and positions of

Ernst, offers one set of critical tools for coming to terms with the .txtual condition.

Another, of course, is to be found in the methods and theoretical explorations of textual

scholarship, the discipline from which McGann launched his ongoing program to revitalize

literary studies by restoring to it a sense of its roots in philological and documentary

forms of inquiry. As I’ve argued at length elsewhere, the field that offers to most

immediate analog to bibliography and textual criticism in the electronic sphere is

computer forensics, which deals in authenticating, stabilizing, and recovering digital

data. One early commentator is prescient in this regard: “much will be lost, but even when disks become unreadable, they

may well contain information which is ultimately recoverable. Within the next ten years,

a small and elite band of e-paleographers will emerge who will recover data signal by

signal”

[

Morris 1998, 33]. Digital forensics is the point of practice at which

media archaeology and digital humanities intersect. Here then is a brief .txtual tale from

the forensics files.

Paul Zelevansky found me through a mutual contact. I’d like to imagine the scene

beginning with his shadow against my frosted glass door as if in an old private eye movie,

but in truth we just exchanged e-mails. Paul is an educator and artist who in the 1980s

published a highly regarded trilogy of artist’s books entitled The

Case for the Burial of Ancestors. One of these also included a 5¼-inch floppy

disk with a digital production called Swallows. It was

formatted for the same Apple II line of computers I once worked on, and programmed in

Forth-79. For many years, Paul had maintained a vintage Apple that he would occasionally

boot up to revisit Swallows, but when that machine gave up

the ghost he was left with only the disks, their black plastic envelopes containing a thin

circular film of magnetic media that offered no way to decipher or transcribe their

contents. The bits, captured on disks you could hold in your hand, may as well have been

on the moon.

So Paul came to see me, and we spent an afternoon in my office at the University of

Maryland using my old computing gear and a floppy disk controller card to bring

Swallows back to life (see Figure 15).

The actual process was trivial: it succeeded on the first try, yielding a

140-kilobyte “image” file, the same virtual dimensions as the original diskette. We

then installed an Apple II emulator on Paul’s Mac laptop and booted it with the disk

image. (An emulator is a computer program that behaves like an obsolescent computer

system. Gaming enthusiasts cherish them because they can play all the old classics from

the arcades and consoles, like the Atari 2600.) The emulator emitted the strident beep

that a real Apple would have made when starting up. It even mimicked the sounds of the

spinning drive, a seemingly superfluous effect, except that it actually provides crucial

aural feedback — a user could tell from listening to the drive whether the computer was

working or just hung up in an endless loop. After a few moments,

Swallows appeared on the Apple II screen, a Potemkin raster amid the flotsam

and jetsam of a 21st-century desktop display.

In February 2012, Paul released Swallows 2.0, which he

describes as a “reworking” of the original. (The piece relies upon video captures

from the emulation intermixed with new sound effects and motion sequences.) The disk image

of the original, meanwhile, now circulates among the electronic literature community with

his blessing. As satisfying as it was retrieving Swallows,

however, meet-ups arranged through e-mail to recover isolated individual works are not a

broadly reproducible solution. Most individuals must still be their own digital

caretakers. In the 21st century, bibliographers, scholars, archaeologists, and archivists

must be wise in the ways of a past that comes packaged in the strange cant of disk

operating systems and single- and double-density disks.

V.

The work of this essay has been in triangulating among the conditions of the future

literary with the shape of its archives and the emergence of the digital humanities as an

institutionalized field. Yet even as the material foundations of the archival enterprise

are shifting as a result of the transformations wrought by what I have been calling the

.txtual condition, archivists who are actively engaging with the challenges presented by

born-digital materials remain a minority within their profession. The issues here are

manifold: most have to do with limited resources in an era of fiscal scarcity, limited

opportunities for continuing education, and of course a still-emerging consensus around

best practices for processing born-digital collections themselves, as well as the inchoate

nature of many of the tools essential to managing digital archival workflows.

I and others have advocated for increased collaboration between digital archivists and

digital humanities specialists. Yet collisions and scrapes and drive-bys on blogs and

Twitter have also produced some unfortunate misunderstandings, many of them clustering

around careless terminology (chief amongst which is doubtless the word “archive”

itself — digital humanists have busied themselves with the construction of online

collections they’ve dubbed “archives” since the early 1990s and suggestions for

alternatives, such as John Unsworth’s “thematic resource collection,” have never

fully caught on). Kate Theimer is representative of some of these tensions and

frustrations when she insists “I don’t think

it’s unfair to assert that some DHers don’t ‘get’ archives, and by ‘get’ I

mean understand the principles, practice, and terminology in the way that a trained

archivist does.” And, in a like vein, a blog posting from one digital humanist

likewise invoking

Archive Fever drew this stern comment:

“Archival science is a discipline similar

to library science. The[re] are graduate programs in this, and a Society of American

Arch[i]vists, which recently celebrated its 73rd birthday. So people have had these

concerns long before Derrida in 1994”

[

Keathley 2010]. Or finally, as an archivist once said to me, “Yeah, I’ve had those conversations with scholars

who come to me with a certain glimmer in their eye, telling me they’re going to

problematize what I do.”

All three commentators are correct. Most important is not that digital humanists become

digital archivists, but that each community think about how best to leverage whatever

knowledge, insights, tools, and habits it has evolved so as to enter into fruitful joint

collaborations. Over the last few years, because of the projects I’ve chosen to work on,

I’ve been privileged to have an unusual degree of entrée into the deliberations and

conversations within the professional archives community. What it comes down to is this:

collaborating with archivists means collaborating with archivists. It means

inviting them to your meetings and understanding their principles, practices and

terminology, as well as their problems and points of view. It means acknowledging that

they will have expertise you do not, respecting their disciplinary history and its

institutions. Archivists are, as a community, exquisitely sensitized to the partial,

peculiar, often crushingly arbitrary and accidental way that cultural records are actually

preserved. They are trained to think not only about individual objects and artifacts but

also about integrating these items into the infrastructure of a collecting repository that

can ensure access to them over time, act as guarantors of their authenticity and

integrity, perform conservation as required, and ensure continuance of custodianship.

Here then are some specifics I have considered as to how digital humanities might

usefully collaborate with those archivists even now working on born-digital

collections:

- Digital archivists need digital humanities researchers and subject experts to

use born-digital collections. Nothing is more important. If humanities

researchers don’t demand access to born-digital materials then it will be harder to get

those materials processed in a timely fashion, and we know that with the born-digital

every day counts.

- Digital humanists need the long-term perspective on data that archivists have.

Today’s digital humanities projects are, after all, the repository objects of tomorrow’s

born-digital archives. Funders are increasingly (and rightfully) insistent about the

need to have a robust data management and sustainability plan built into project

proposals from the outset. Therefore, there is much opportunity for collaboration and

team-building around not only archiving and preservation, but the complete data curation

cycle. This extends to the need to jointly plan around storage and institutional

infrastructure.

- Digital archivists and digital humanists need common and interoperable digital

tools. Open source community-driven development at the intersection of the needs of

digital archivists, humanities scholars, and even collections’ donors should become an

urgent priority. The BitCurator project MITH has undertaken with the School of

Information and Library Science at UNC Chapel Hill is one example. Platforms like SEASR

and Bamboo have the potential to open up born-digital collections to analysis through

techniques such as data mining, visualization, and GIS, all of which have gained

traction in digital humanities.

- Digital humanists need the collections expertise of digital archivists. Scholars in

more traditional domains have long benefited from the knowledge of archivists and

curators, who can come to know a collection intimately. In the same way, born-digital

collections can be appraised with the assistance of the archivist who processed them,

paving the way for discovery of significant content and interesting problems to work on.

Likewise, archives offers the potential of alternative access models which are perhaps

usefully different than those of the digital humanities community, which often insists

upon open, unlimited access to everything in the here and now.

- Digital archivists need cyberinfrastructure. Here the digital humanities community

has important lessons and insights to share. Many smaller collecting institutions simply

cannot afford to acquire the technical infrastructure or personnel required to process

complex born-digital materials. Fortunately, not every institution needs to duplicate

the capabilities of its neighbors. Solutions here range from developing the means to

share access to vintage equipment to distributed, cloud-based services for digital

collections processing. Digital humanities centers have been particularly strong in

furthering the conversation about arts and humanities cyberinfrastructure, and so there

is the potential for much cross-transfer of knowledge here. Likewise, the various

training initiatives institutionalized within the digital humanities community, such as

the Digital Humanities Summer Institute, have much to offer to archivists.

- Digital archivists and digital humanists both need hands-on retro-tech know-how.

Digital humanities curricula should therefore include hands-on training in retro-tech,

basic digital preservation practices like disk imaging and the use of a hex editor,

basic forensic computing methodologies, exposure to the various hardware solutions for

floppy disk controllers like the Software Preservation Society’s KyroFlux, and

introduction to basic archival tools and metadata standards, not only EAD but emerging

efforts from constituencies like the Variable Media Network to develop schemas to notate

and represent born-digital art and ephemera.

- Finally, digital archivists need the benefit of the kinds of conversations we’ve

been having in digital humanities. I’ve learned, for example, that it’s not obvious at

many collecting institutions whether “digital archivist” should be a

specialization, or whether archivists and personnel at all levels should be trained in

handling digital materials at appropriate points in the archival workflow. This tracks

strongly with conversations in digital humanities about roles and responsibilities

vis-a-vis scholars, digital humanities centers, and programmers. The emergence of the

alt-ac sensibility within digital humanities also offers a powerful model for creating

supportive environments within archival institutions that are undergoing transformations

in personnel and staff cultures.

This then is the research agenda I would put forward to accompany the .txtual condition,

albeit expressed at a very high level. There is plenty of room for refinement and

elaboration. But for those of us who take seriously the notion that the born-digital

materials of today

are the literary of tomorrow, what’s at stake is nothing

less than what Jerome McGann has pointedly termed “the scholar’s art.” This is the

same impulse that sent Ken Price to the National Archives in search of scraps and jottings

from Whitman, the bureaucratic detritus of the poet’s day job. “Scholarship,” McGann reminds us, “is a service vocation. Not only are Sappho and Shakespeare

primary, irreducible concerns for the scholar, so is any least part of our cultural

inheritance that might call for attention. And to the scholarly mind, every smallest

datum of that inheritance has a right to make its call. When the call is heard, the

scholar is obliged to answer it accurately, meticulously, candidly, thoroughly”

[

McGann 2006, ix].

Stirring words, but if we are to continue to answer that call, and if we are to continue

to act in the service of that art, then we must commit ourselves to new forms of curricula

and training, those tangible methods of media archaeology and digital paleography; we must

find new forms of collaboration, such as those that might come into being between digital

humanities centers or media labs and archives and other cultural heritage institutions; we

must fundamentally reimagine our objects of study, to embrace the poetics (and the

science) of signal processing and symbolic logic alongside of alphabetic systems and

signs; and we must learn to form new questions, questions addressable to what our media

now inscribe in the objects and artifacts of the emerging archives of the .txtual

condition — even amid circumstances surely as quotidian as those of Whitman and his

clerkship.

Acknowledgements

A shorter and differently focused version of this essay is forthcoming in a collection

entitled Comparative Textual Media, edited by N. Katherine

Hayles and Jessica Pressman. I am grateful to them for allowing me to work from and

significantly expand that base of prose in my contribution here. The several paragraphs in

section IV concerning Paul Zelevanksy’s SWALLOWS originally appeared in slightly different

form in an essay entitled “Bit by Bit” in the Chronicle of Higher Education, March 10, 2012.

Numerous archivists and information professionals have been welcoming and generous in

helping me learn more about their discipline, but in particular I am grateful to Paul

Conway, Bradley Daigle, Rachel Donahue, Luciana Duranti, Erika Farr, Ben Fino-Radin,

Jeremy Leighton John, Leslie Johnston, Kari Kraus, Cal Lee, Henry Lowood, Mark Matienzo,

Jerome McDonough, Donald Mennerich, Courtney Mumma, Trevor Muñoz, Naomi Nelson, Erin

O'Meara, Richard Ovenden, Trevor Owens, Daniel Pitti, Gabriela Redwine, Doug Reside, Jason

Scott, Seth Shaw, Kate Theimer, and Kam Woods. I am also grateful to the anonymous readers

for DHQ, as well as Jessica Pressman and Lisa Swanstrom, for their comments on drafts of

this essay; and to the organizers and audience at the 2011 Digital Humanities Summer

Institute, where portions of this were presented as the Institute Lecture. My dedication

to Jerome McGann (his virtual essence) acknowledges the influence he has had throughout my

thought and career.

Works Cited

Consultative Committee for Space Data Systems 2002 Consultative Committee for Space Data Systems. Reference Model for an Open Archival Information System (OAIS). Washington DC:

CCSDS Secretariat, 2002. Online.

Derrida 1995 Derrida, Jacques. Archive Fever: A Freudian Impression. Trans. Eric Prenowitz.

Chicago: University of Chicago Press, 1995. Print.

Duranti 2010 Duranti, Luciana. “A Framework for Digital Heritage Forensics.” Presentation. Computer Forensics and Born-Digital Content. College Park, MD:

University of Maryland, May 2010. Online.

Ernst 2002 Ernst, Wolfgang. “Agencies of Cultural Feedback: The Infrastructure of Memory.” In

Waste-Site Stories: The Recycling of Memory. Eds. Brian

Neville and Johanne Villeneuve. Albany: SUNY Press, 2002. 107-120. Print.

Ernst 2011 Ernst, Wolfgang. “Media Archaeography: Method and Machine versus History and Narrative of

Media.” In Media Archeology: Approaches, Applications,

Implications. Eds. Erkki Huhtamo and Jussi Parikka. Berkeley: University of

California Press, 2011. 239-255. Print.

Grossman 2010 Grossman, Lev. “Jonathan Franzen: Great American Novelist.”

Time. 12 August 2010. Online.

Hall 2011 Hall, Chris. “JG Ballard: Relics of a Red-Hot Mind.”

The Guardian. 3 August 2011. Online.

Howard 2011a Howard, Jennifer. “In Electric Discovery, Scholar Finds Trove of Walt Whitman Documents in

National Archives.”

Chronicle of Higher Education. 12 April 2011. Online.

Howard 2011b Howard, Jennifer. “U. of Texas Snags Archive of ‘Cyberpunk’ Literary Pioneer Bruce

Sterling.”

Chronicle of Higher Education. 8 March 2011. Online.

Keathley 2010 Keathley, Elizabeth.

Weblog comment. 19 January 2010. “The Archive or the Trace: Cultural

Permanence and the Fugitive Text.” SampleReality. 18 January 2010. Online.

Larsen 2009 Larsen, Deena. “Artist’s Statement.”

The Deena Larsen Collection. College Park, MD: MITH, 2009.

Online.

Lavoie 2004 Lavoie, Brian F. “The Open Archival Information System Reference Model: Introductory

Guide.” Dublin, OH: OCLC, 2004. Online.

MLA 1995 Modern Language Association, “Statement on the Significance of Primary Records.” New York: MLA,

1995. Online.

McDonough 2010 McDonough, Jerome,

et al. Preserving Virtual Worlds Final Report. Champaign, IL:

IDEALS, 2010. Online.

McGann 2006 McGann, Jerome. The Scholar’s Art: Literary Studies in a Managed World. Chicago:

University of Chicago Press, 2006. Print.

Morris 1998 Morris, R. J. “Electronic Documents and the History of the Late Twentieth Century: Black

Holes or Warehouses?” In Edward Higgs, ed. History and

Electronic Artefacts. Oxford: Oxford University Press, 1998: 31-48.

Print.

Parikka 2011 Parikka, Jussi. “Operative Media Archeology: Wolfgang Ernst’s Materialist Media

Diagrammatics.”

Theory, Culture, & Society 28.5 (2011): 52-74.

Online.

Ridener 2009 Ridener, John. From Polders to Postmodernism: A Concise History of Archival

Theory. Duluth: Litwin, 2009.

Ross 1999 Ross, Seamus and Ann Gow. Digital Archaeology: Rescuing Neglected and Damaged Data

Resources. Glasgow: Humanities Advanced Technology and Information Institute,

1999. Online.

Smith 1998 Smith, Abby. “Preservation in the Future Tense.”

CLIR Issues 3 (May/June 1998). Online.

Stauffer 2011 Stauffer, Andrew M.

“The Troubled Future of the 19th-Century Book.”

Chronicle of Higher Education. 11 December 2011.

Online.

Theimer 2012 Theimer, Kate. Weblog

comment. 28 March 2012. “Two Meanings of ‘Archival Silences’ and

Their Implications.” ArchivesNext. 27 March 2012. Online.

Thibodeau 2002 Thibodeau, Kenneth

T. 2002. “Overview of Technological Approaches to Digital Preservation

and Challenges in Coming Years.” In The State of Digital

Preservation: An International Perspective. Washington DC: Council on Library

and Information Resources.